Adobe is Betting Big on Generative AI With a Raft of New Features

Driving relevance means driving growth. Join global brands and industry thought leaders at Brandweek, Sept. 11–14 in Miami, for actionable takeaways to better your marketing. 50% off passes ends April 10.

As a host of startups have ushered in a new era of artificial intelligence-powered image creation over the past year, speculation has mounted on how and when Adobe, one of the world’s largest high-end creative software providers, would integrate this tech into its suite of programs.

The company answered that question Tuesday with a group of new features called Project Firefly that will weave machine learning capabilities like text-to-image generation, visual stylization and large language models across Adobe’s various design and marketing offerings. The first two of those models—image creation and generative design for text—will be available this week, with more slated to be unveiled in the future.

While Adobe sees generative AI as key to its platform’s future, the software giant has had to tread carefully into this space. It caters to a large client base of creators and artists who are concerned about the use of their work in training these models and the automation of their jobs, and the company wants to avoid the legal blowback that image generation startups have faced over copyright issues in the past several months.

“Generative AI and these large models are going to transform the world as we know it across the board, and the only way to succeed is to embrace this technology and make it useful to our customers,” said Alexandru Costin, vice editor president, generative AI and Sensei at Adobe. “We don’t think our creators will be replaced by AI, but we do think that creatives using AI are going to be more competitive than creatives not using AI.”

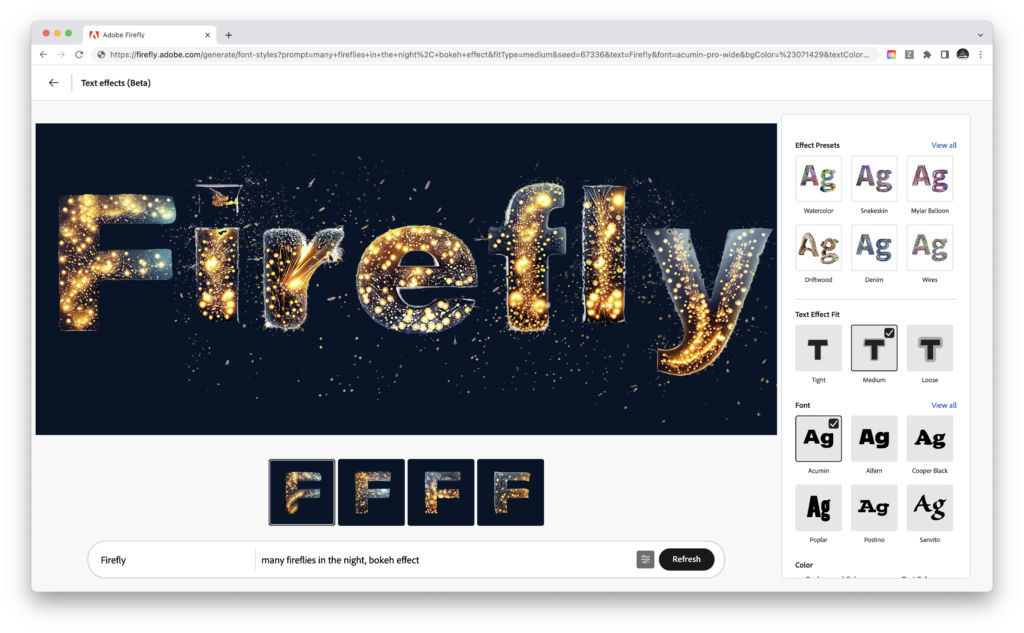

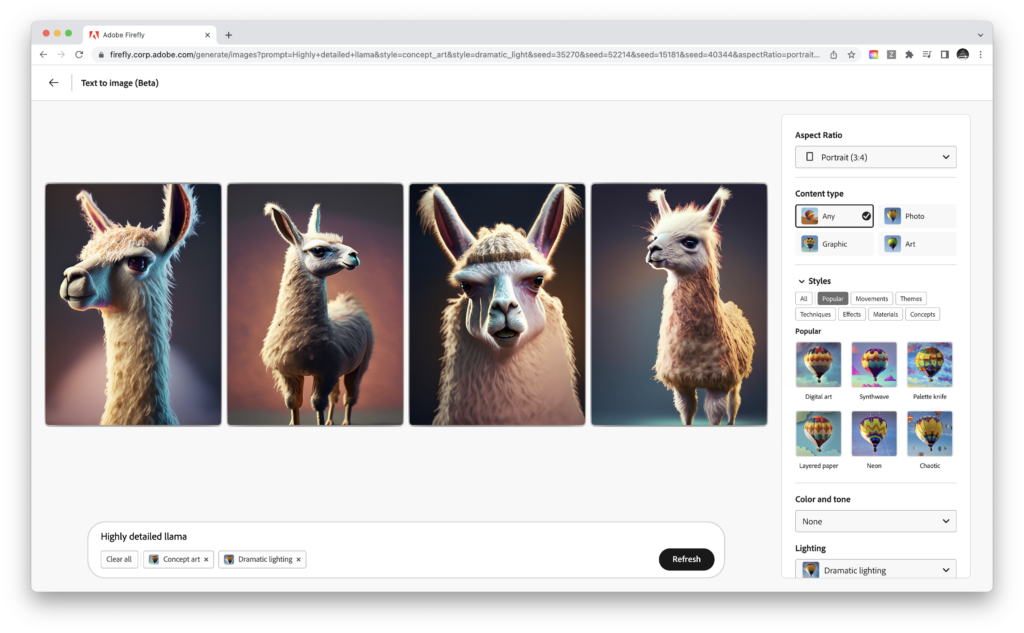

The tools Adobe is rolling out this week will let people create images with a detailed text prompt—a process similar to those used by competing products like Midjourney and Stable Diffusion—and generate and apply filter-like styles to them. Another feature, called text effects, will automate designs, styles and textures within the letters of a given font.

In addition to integrating the above tools into its marketing software, Adobe will also roll out a feature that generates custom brand copy for advertising, tapping Microsoft-backed OpenAI and other large language models.

“We want to position it as a copilot and make sure that it’s always there inside the core workflows, helping you as a marketer be more productive and create content personalized for your segments in a really easy way,” Costin said.

Costin said Adobe also has a slew of features in the pipeline as the company ramps up its investment in generative AI. Those include a feature that will extend or fill in parts of an existing image based on generation, 3D modeling and video effects.

A long time in the making

Some of the models that constitute Project Firefly have been in the works for up to three years, according to Costin, a period of time in which generative AI has undergone a transformation from garbled abstractions and meandering strings of text to passably realistic images and copy. But investment in the project picked up around a year ago when the release of research group OpenAI’s Dall-E 2 supercharged the field of AI image generation.

In an effort to avoid lawsuits like the one Getty Images filed against image generation startup Stability AI last month, Adobe has trained its image model only on Adobe stock images and media in the public domain. The company also gives creators the option to exclude their work from training databases and plans to eventually draw up a compensation model for artists whose work is used.

Whether or not that training set of around 330 million images would be enough to produce a tool on par with models trained on billions of assets from across the web remained to be seen when research began, Costin said.

“This is a question that we had to prove by doing, and our research organization took this challenge to heart,” Costin said. “We actually feel that we have achieved pretty good output quality, even if it’s not the whole internet.”

While the model may be smaller, it could quell the concerns of some brands and agencies who have been hesitant to use image generation in anything beyond internal ideation communication for legal issues.

The release comes as other big tech companies are scrambling to integrate the latest advances in AI into their products. Last week, Microsoft announced an initiative called Copilot that will embed AI into its enterprise tools like Word, PowerPoint, Outlook and Teams. Google is similarly bringing its own competing AI tools to Gmail, Docs and Slides.

https://www.adweek.com/creativity/adobe-is-betting-big-on-generative-ai-with-a-raft-of-new-features/