Better than holograms: 3D-animated starships can be viewed from any angle

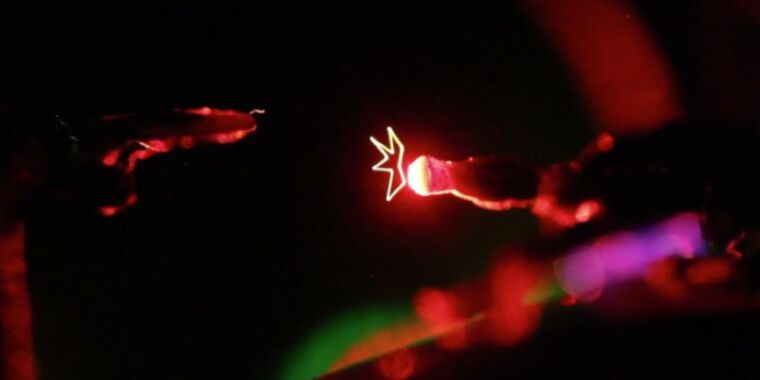

Scientists at Brigham Young University (BYU) have created tiny 3D animations out of light. The animations pay homage to Star Trek and Star Wars with tiny versions of the USS Enterprise and a Klingon battle cruiser launching photon torpedoes, as well as miniature green and red light sabers with actual luminous beams. The animations are part of the scientists’ ongoing “Princess Leia project“—so dubbed because it was partly inspired by the iconic moment in Star Wars Episode IV: A New Hope when R2D2 projects a recorded 3D image of Leia delivering a message to Obi-Wan Kenobi. The researchers described the latest advances on their so-called screenless volumetric display technologies in a recent paper published in the journal Scientific Reports.

“What you’re seeing in the scenes we create is real; there is nothing computer generated about them,” said co-author Dan Smalley, a professor of electrical engineering at BYU. “This is not like the movies, where the lightsabers or the photon torpedoes never really existed in physical space. These are real, and if you look at them from any angle, you will see them existing in that space.”

The technology making this science fiction a potential reality is known as an optical trap display (OTD). These are not holograms; they’re volumetric images, as they can be viewed from any angle, as they seem to float in the air. A holographic display scatters light across a 2D surface, and microscopic interference patterns make the light look as if it is coming from objects in front of, or behind, the display surface. So with holograms, one must be looking at that surface to see the 3D image. In contrast, a volumetric display consists of scattering surfaces distributed throughout the same 3D space occupied by the resulting 3D image. When you look at the image, you are also viewing the scattered light.

Photophoresis

Smalley likens the effect to Tony Stark’s interactive 3D displays in Iron Man or Avatar‘s image-projecting table. The BYU volumetric display platform uses lasers to trap a single particle of a plant fiber called cellulose and heat it evenly. The trick uses a phenomenon called photophoresis, in which spherical lenses create aberrations in laser light, heating microscopic particles and trapping them inside the beam. Researchers use computer-controlled mirrors to push or pull the particle wherever they wish in the display space to create the desired image, all while illuminating it with a second set of lasers projecting visible red, green, and blue light.

The technology also exploits persistence of vision, a perceptual phenomenon that arises because the brain has a natural propensity to smooth over interruptions of stimuli. The brain retains an impression of light hitting the retina for roughly 1/10th to 1/15th of a second—just long enough so that the world doesn’t go black every time we blink. It can’t distinguish shifts in light that occur faster than that. This is the same principle behind classic animation or the flip books many of us made as children. Movies, like flip books, appear to show continuous motion, but in reality, images flash on the screen at a sufficiently fast rate that we perceive a flicker-free picture.

-

A tiny starship Enterprise fires at a tiny Klingon battle cruiser—real animated images created in thin air.

-

(Left) Recreating Princess Leia’s projected message to Obi-Wan Kenobi. (Right) Tiny figures dueling with lightsabers.

-

A butterfly of light.

-

A 3D Pokemon.

-

Simulated virtual image of a crescent moon.

-

Conceptual diagram of the optical trap display and simulated virtual images.Wesley Rogers & Daniel Smalley, 2021

In the case of Smalley et al.’s optical trap displays, persistence of vision means that a particle’s trajectory appears as a solid line, in an effect akin to waving a sparkler around in the dark. It’s almost like 3D printing with light. “The particle moves through every point in the image several times a second, creating an image by persistence of vision,” the authors wrote. “The higher the resolution and the refresh rate of the system, the more convincing this effect can be made, where the user will not be able to perceive updates to the imagery displayed to them, and at sufficient resolution will have difficulty distinguishing display image points from real-world image points.”

Back in 2018, the team used its system to create several tiny, screenless, free-floating images: a butterfly, a prism, a Pokémon, and a stretchy version of the BYU logo, for example. The researchers even produced an image of a team member dressed in a lab coat, crouched in the famous Princess Leia position. This latest work builds on those accomplishments to create simple animations in thin air. In addition to creating the spaceship and lightsaber battles, the BYU scientists also made virtual stick figures and animated them. The researchers’ students could even interact with the stick figures by placing fingers in the center of the display, creating the illusion that the figures were walking and jumping off the fingers.

“Most 3D displays require you to look at a screen, but our technology allows us to create images floating in space—and they’re physical, not some mirage,” said Smalley. “This technology can make it possible to create vibrant animated content that orbits around or crawls on or explodes out of every day physical objects.”

The parallax view

The research has also addressed a key shortcoming of optical trap displays: the ability to show virtual images. While it’s theoretically possible to make volumetric images bigger than the display itself, creating an optically correct volumetric image of the moon, for example, would require an OTD scaled up to astronomical proportions. The authors drew an analogy to movie sets or theatrical stages, “where props and players must occupy a fixed space even when trying to capture a scene meant to occur outdoors or in outer space.” Theaters traditionally have overcome this limitation by using flat backdrops with pictorial 3D perspective and occlusion cues, among other tricks. Theaters can also employ projection backdrops, in which motion can be used to simulate parallax.

The BYU team drew inspiration from those theatrical tricks and decided to employ a time-varying perspective projection backdrop with their OTD system. This allowed the team to take advantage of perceptual tricks like motion parallax to make the display look bigger than its actual physical size. As proof of principle, the researchers simulated the image of a crescent moon appearing to move along the horizon behind a physical, 3D-printed miniature house.

The next step is to figure out how best to scale the display volume up from the current 1 cm3 to more than 100 cm3 and to incorporate visual cues beyond parallax, such as occlusion. The experiment was limited by the need to track the viewer’s eye position and the fact that it was a monocular rather than binocular experiment (normal human vision is binocular). Making the OTD system binocular would require better control of directional scatter.

Despite these limitations, the BYU team believes its method of simulating virtual images with optical trap displays, combined with perspective projection surfaces, is still preferable to combining OTDs with holographic systems. “Unlike OTDs, holograms are extremely computationally intensive and their computational complexity scales rapidly with display size,” the researchers wrote. “Neither is true for OTD displays.”

The researchers point out that in order to create a backdrop of stars, a holographic display system would need terabytes of data per second to properly render star-like points, regardless of the number of stars in the backdrop. In contrast, OTDs would only require bandwidth proportional to the number of visible stars.

DOI: Scientific Reports, 2021. 10.1038/s41598-021-86495-6 (About DOIs).

Listing image by BYU

https://arstechnica.com/?p=1763689