CrowdStrike fixes start at “reboot up to 15 times” and get more complex from there

Airlines, payment processors, 911 call centers, TV networks, and other businesses have been scrambling this morning after a buggy update to CrowdStrike’s Falcon security software caused Windows-based systems to crash with a dreaded blue screen of death (BSOD) error message.

We’re updating our story about the outage with new details as we have them. Microsoft and CrowdStrike both say that “the affected update has been pulled,” so what’s most important for IT admins in the short term is getting their systems back up and running again. According to guidance from Microsoft, fixes range from annoying but easy to incredibly time-consuming and complex, depending on the number of systems you have to fix and the way your systems are configured.

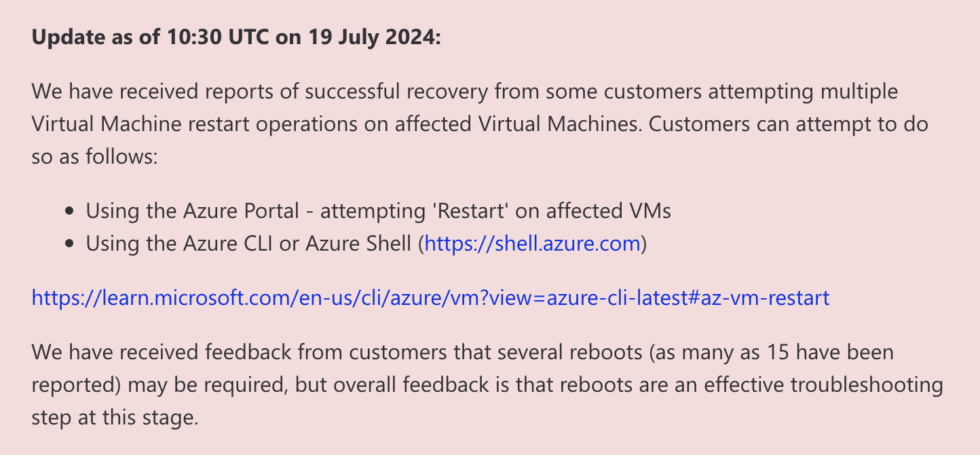

Microsoft’s Azure status page outlines several fixes. The first and easiest is simply to try to reboot affected machines over and over, which gives affected machines multiple chances to try to grab CrowdStrike’s non-broken update before the bad driver can cause the BSOD. Microsoft says that some of its customers have had to reboot their systems as many as 15 times to pull down the update.

If rebooting doesn’t work

If rebooting multiple times isn’t fixing your problem, Microsoft recommends restoring your systems using a backup from before 4:09 UTC on July 18 (just after midnight on Friday, Eastern time), when CrowdStrike began pushing out the buggy update. Crowdstrike says a reverted version of the file was deployed at 5:27 UTC.

If these simpler fixes don’t work, you may need to boot your machines into Safe Mode so you can manually delete the file that’s causing the BSOD errors. For virtual machines, Microsoft recommends attaching the virtual disk to a known-working repair VM so the file can be deleted, then reattaching the virtual disk to its original VM.

The file in question is a CrowdStrike driver located at Windows/System32/Drivers/CrowdStrike/C-00000291*.sys. Once it’s gone, the machine should boot normally and grab a non-broken version of the driver.

Deleting that file on each and every one of your affected systems individually is time-consuming enough, but it’s even more time-consuming for customers using Microsoft’s BitLocker drive encryption to protect data at rest. Before you can delete the file on those systems, you’ll need the recovery key that unlocks those encrypted disks and makes them readable (normally, this process is invisible, because the system can just read the key stored in a physical or virtual TPM module).

This can cause problems for admins who aren’t using key management to store their recovery keys, since (by design!) you can’t access a drive without its recovery key. If you don’t have that key, Cryptography and infrastructure engineer Tony Arcieri on Mastodon compared this to a “self-inflicted ransomware attack,” where an attacker encrypts the disks on your systems and withholds the key until they get paid.

And even if you do have a recovery key, your key management server might also be affected by the CrowdStrike bug.

We’ll continue to track recommendations from Microsoft and CrowdStrike about fixes as each company’s respective status pages are updated.

“We understand the gravity of the situation and are deeply sorry for the inconvenience and disruption,” wrote CrowdStrike CEO George Kurtz on X, formerly Twitter. “We are working with all impacted customers to ensure that systems are back up and they can deliver the services their customers are counting on.”

https://arstechnica.com/?p=2038144