Facebook to Pack More Details Into Descriptions of Images for Blind, Visually Impaired People

Facebook revamped its automatic alt text experience, which provides detailed descriptions of images for people who are blind or visually impaired.

The social network introduced AAT in April 2016, using artificial intelligence and object recognition to automatically generate descriptions of photos on-demand.

Instagram added AAT in November 2018.

AAT is available to people using screen readers, an assistive technology that converts text and other on-screen elements to speech.

Facebook wrote in a Newsroom post Tuesday, “When Facebook users scroll through their News Feed, they find all kinds of content—articles, friends’ comments, event invitations and. of course, photos. Most people are able to instantly see what’s in these images, whether it’s their new grandchild, a boat on a river or a grainy picture of a band onstage. But many users who are blind or visually impaired can also experience that imagery, provided it’s tagged properly with alternative text. A screen reader can describe the contents of these images using a synthetic voice and enable people who are BVI to understand images in their Facebook feed.”

The company’s AAT technology can now recognize more than 1,200 objects and concepts, over 10 times the number when the tool was introduced in 2016, meaning that more photos will now have descriptions.

Those descriptions are also more detailed, as the updated technology can identify activities, animals, landmarks and other details, with Facebook offering as an example: “May be a selfie of two people, outdoors, the Leaning Tower of Pisa.”

The social network also made it possible to include information about the positional location and relative size of elements in a photo, providing these examples:

Instead of providing a photo description of “may be an image of five people,” it can now specify that there are two people at the center of the photo and three others scattered toward the fringes, implying that the two in the center are the focus.

Instead of describing a landscape as, “may be a house and a mountain,” Facebook can now highlight that the mountain in the primary object, based on how large it appears in comparison with the house at its base.

The social network said AAT is available for photos in groups, News Feed and profiles, as well as when images are open in the detail view, where the image appears full-screen and the background is black.

Alt text descriptions are phrased simply, enabling them to be translated into 45 different languages.

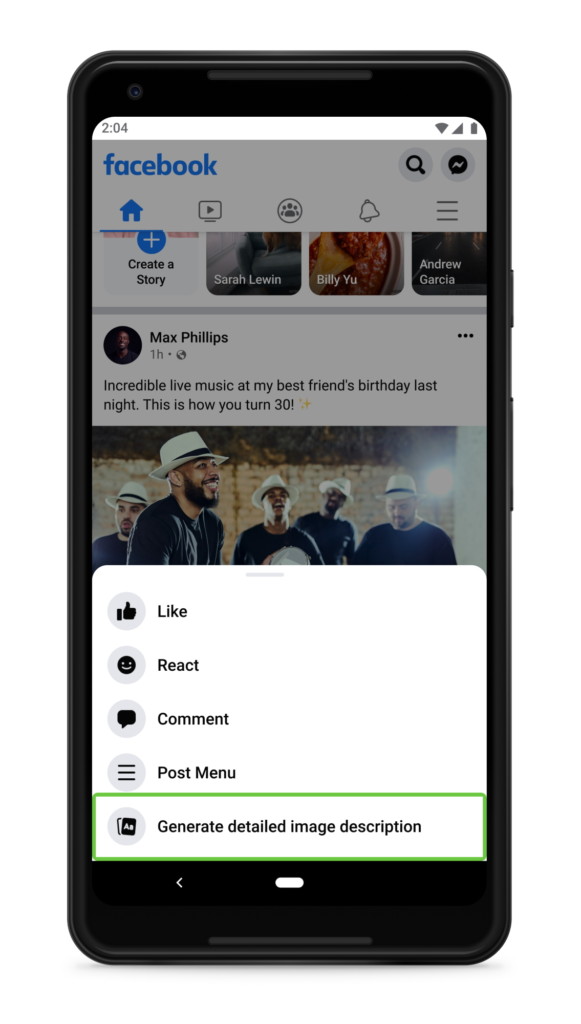

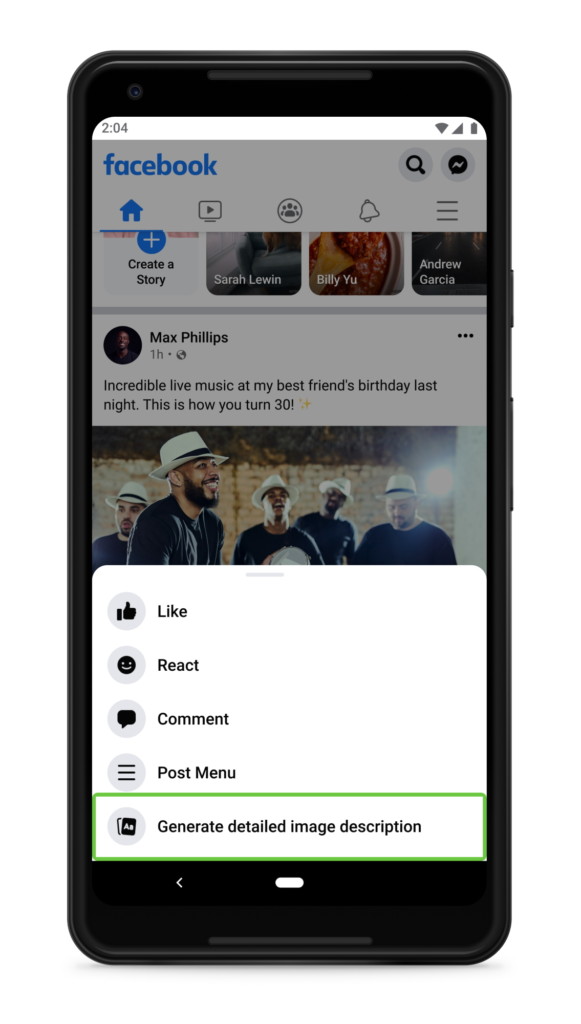

Facebook said it asked users who depend on screen readers for feedback on the types of information they wanted to hear and when they wanted to hear it, finding that they wanted more information when images were from friends and family and less when they were not.

The social network explained what happens when users opt for more detailed descriptions: “A panel is presented that provides a more comprehensive description of a photo’s contents, including a count of the elements in the photo, some of which may not have been mentioned in the default description. Detailed descriptions also include simple positional information—top/middle/bottom or left/center/right—and a comparison of the relative prominence of objects, described as ‘primary,’ ‘secondary’ or ‘minor.’ These words were specifically chosen to minimize ambiguity. Feedback on this feature during development showed that using a word like ‘big’ to describe an object could be confusing because it’s unclear whether the reference is to its actual size or its size relative to other objects in an image. Even a chihuahua looks large if it’s photographed up close.”

https://www.adweek.com/media/facebook-to-pack-more-details-into-descriptions-of-images-for-blind-visually-impaired-people/