Facebook’s new AI teaches itself to see with less human help

Most artificial intelligence is still built on a foundation of human toil. Peer inside an AI algorithm and you’ll find something constructed using data that was curated and labeled by an army of human workers.

Now, Facebook has shown how some AI algorithms can learn to do useful work with far less human help. The company built an algorithm that learned to recognize objects in images with little help from labels.

The Facebook algorithm, called Seer (for SElf-supERvised), fed on more than a billion images scraped from Instagram, deciding for itself which objects look alike. Images with whiskers, fur, and pointy ears, for example, were collected into one pile. Then the algorithm was given a small number of labeled images, including some labeled “cats.” It was then able to recognize images as well as an algorithm trained using thousands of labeled examples of each object.

“The results are impressive,” says Olga Russakovsky, an assistant professor at Princeton University who specializes in AI and computer vision. “Getting self-supervised learning to work is very challenging, and breakthroughs in this space have important downstream consequences for improved visual recognition.”

Russakovsky says it is notable that the Instagram images were not hand-picked to make independent learning easier.

The Facebook research is a landmark for an AI approach known as “self-supervised learning,” says Facebook’s chief scientist, Yann LeCun.

LeCun pioneered the machine learning approach known as deep learning that involves feeding data to large artificial neural networks. Roughly a decade ago, deep learning emerged as a better way to program machines to do all sorts of useful things, such as image classification and speech recognition.

But LeCun says the conventional approach, which requires “training” an algorithm by feeding it lots of labeled data, simply won’t scale. “I’ve been advocating for this whole idea of self-supervised learning for quite a while,” he says. “Long term, progress in AI will come from programs that just watch videos all day and learn like a baby.”

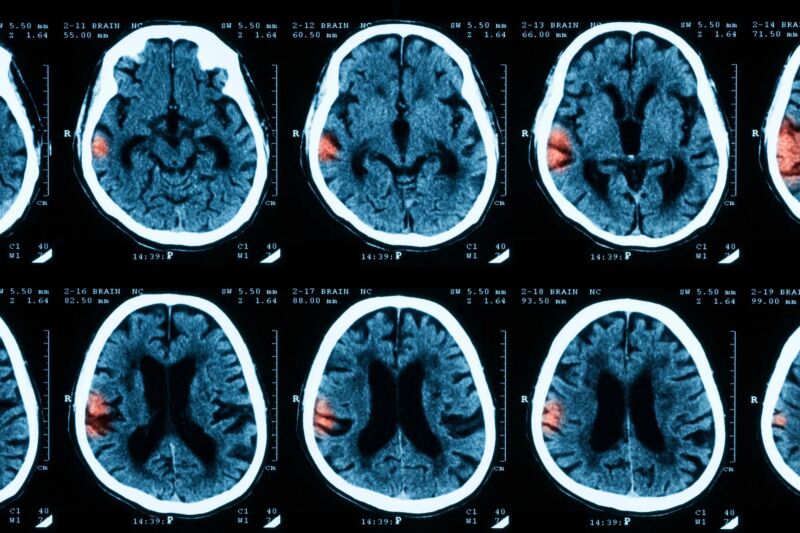

LeCun says self-supervised learning could have many useful applications, for instance learning to read medical images without the need for labeling so many scans and x-rays. He says a similar approach is already being used to auto-generate hashtags for Instagram images. And he says the Seer technology could be used at Facebook to match ads to posts or to help filter out undesirable content.

The Facebook research builds upon steady progress in tweaking deep learning algorithms to make them more efficient and effective. Self-supervised learning previously has been used to translate text from one language to another, but it has been more difficult to apply to images than words. LeCun says the research team developed a new way for algorithms to learn to recognize images even when one part of the image has been altered.

Facebook will release some of the technology behind Seer but not the algorithm itself because it was trained using Instagram users’ data.

Aude Oliva, who leads MIT’s Computational Perception and Cognition lab, says the approach “will allow us to take on more ambitious visual recognition tasks.” But Oliva says the sheer size and complexity of cutting-edge AI algorithms like Seer, which can have billions or trillions of neural connections or parameters—many more than a conventional image-recognition algorithm with comparable performance—also poses problems. Such algorithms require enormous amounts of computational power, straining the available supply of chips.

Alexei Efros, a professor at UC Berkeley, says the Facebook paper is a good demonstration of an approach that he believes will be important to advancing AI—having machines learn for themselves by using “gargantuan amounts of data.” And as with most progress in AI today, he says, it builds upon a series of other advances that emerged from the same team at Facebook as well as other research groups in academia and industry.

This story originally appeared on wired.com.

https://arstechnica.com/?p=1747769