How Experts Are Attempting to Combat Artificial Intelligence’s Racist Tendencies

Artificial intelligence might be the cutting edge of futuristic tech, but by its nature, it’s also rooted in the past and present.

The technology learns from human behavior, and as hundreds of years of humanity show, often veers into racism, misogyny and many other types of inequality. So, as conversations about confronting systemic discrimination have grown in volume in recent months across institutions and industries, so too have they become central debates in the world of AI.

The consequences of this discussion aren’t just academic. They’ll play out in the algorithms that increasingly play a role in deciding the course of people’s lives, whether it be through job application filters, loan approval software or facial recognition.

The key question is whether an AI that’s trained on real human data can be taught not to reproduce racism.

Many in the industry say it can, but not when it’s made behind closed doors by engineers who are overwhelmingly male and disproportionately white. They say the industry needs to change from the ground up.

“We all have a shared responsibility within the field to ensure that present and historical biases are not exacerbated by new innovations and that there is sincere and substantive inclusion of the concerns and needs of impacted communities,” said Google DeepMind research scientist Shakir Mohamed, who recently published a widely read paper on decolonizing AI. “This means that the ideas we discuss in the paper will take action from more than just an individual company, industry or government.”

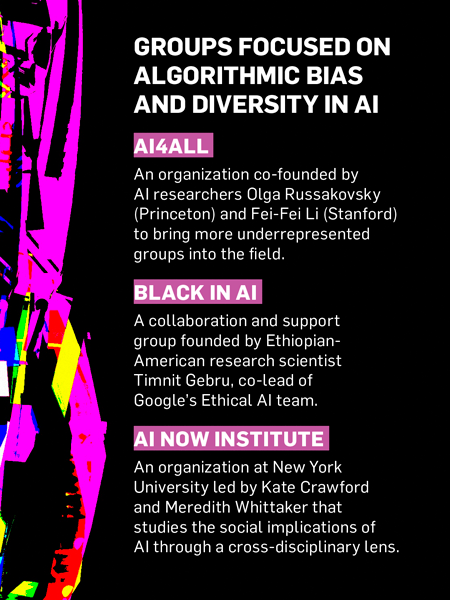

A particularly heated debate flared recently between some of the most prominent names in the field over an image editing tool that whitewashed the faces of people of color. On one side, Facebook’s chief AI scientist Yann LeCun argued that the issue was primarily rooted in biases within the underlying data set. Others, like Timnit Gebru, a co-lead of Google’s Ethical AI team and co-founder of the group Black in AI, pushed back, claiming the problem wasn’t so simple.

“You can’t just reduce harms caused by ML [machine learning] to data set bias,” Gebru told LeCun on Twitter. “For once, listen to us people from marginalized communities and what we tell you.”

Indeed, a singular focus on data sets ignores much of the nuance of the sometimes subtle decision-making that occurs throughout the development process, according to Tulsee Doshi, product lead on Google’s ML Fairness and Responsible AI team.

“I think, most critically and what often gets overlooked, is that this isn’t just about coming up with new product ideas or having diverse points of feedback on a product,” Doshi said. “Every step in the product development process is a decision. What data did you look at? Which users did we talk to? … It’s often the little decisions in that process that add up to missed experiences and opportunities for underrepresented users.”

A growing movement of activists, technologists and academics say a holistic approach to algorithmic bias must involve democratizing the development of AI systems so that it accounts for input from those who will eventually be impacted by the AI.

“Even the best-intentioned AI developers who have not personally been impacted by these inequities may not notice them in the systems they’re designing,” said Tess Posner, CEO of AI diversity group AI4All. “Leaving potentially life-altering technology development up to individuals who have not been properly trained to consider ethics or social impacts is dangerous.”

For recruiting software company Leoforce, which bills its AI-powered platform Arya as a way for employers to avoid implicit biases in hiring choices, counteracting data tinged by entrenched societal attitudes is a constant battle.

“There’s some amount of historic biases that existed in society that will still creep in,” said Leoforce founder and CEO Madhu Modugu. “You have a particular type of job that people of a certain gender or people of a certain ethnicity have worked historically. So, the AI itself is more biased. … And then there is the human bias that happens after that.”

Modugu said some of the company’s antibias measures include selection protocol that proactively combats certain biases, training for hiring manager clients on the various ways biases manifest, and a variety of algorithms designed to capture nuances about how employees perform after hiring and unconventional career paths, which can lessen the power of gatekeeping institutions.

https://www.adweek.com/digital/how-experts-are-attempting-to-combat-artificial-intelligences-racist-tendencies/