IonQ’s new ions have lower error, great performance on a new benchmark

Back in 2020, we talked to the CEO of a quantum computing startup called IonQ that uses trapped ions for its qubits. At the time, the company had just introduced a quantum processor that could host 32 qubits that had impressive fidelity, meaning they were much less likely to produce an error when being manipulated or read out. Best yet, the CEO suggested there was an obvious path to doubling the qubit count every eight months for the next few years.

By that metric, we should be seeing a high-fidelity, 128-qubit machine from the company about now. Instead, it chose to make a significant change to the underlying technology and rethought how it viewed scaling its processors. To find out what motivated the changes, we talked with the company’s CTO, Duke University’s Jungsang Kim, about the decision and where IonQ’s hardware stands in the larger landscape.

If you’re interested in IonQ’s hardware in particular, the next two sections are for you; if you care about the state of the quantum computing landscape more generally, you can skip ahead past those sections.

A different element

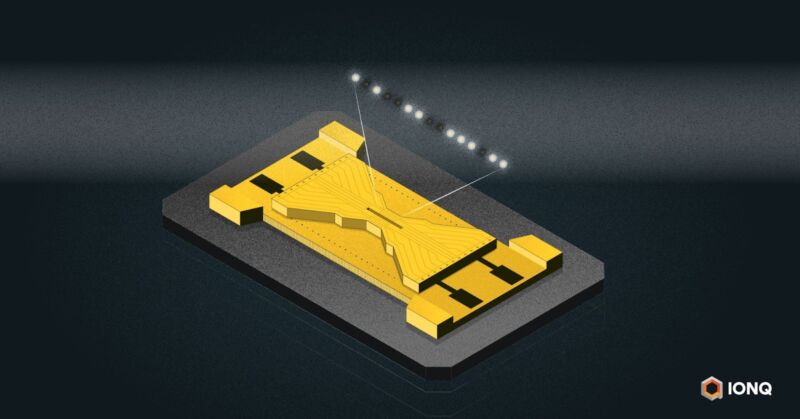

IonQ, as its name implies, uses a technology called trapped ions for its qubits. These ions are kept in a location called a trap by the use of carefully tuned lasers and can be addressed by a separate set of lasers. These lasers can set the state of the qubit, perform manipulations on it, and then read out the end state.

The advantage of this system is that any two ions can be entangled with each other. This is in contrast to qubits based on superconducting wiring called transmons, in which the connections among the qubits are static, based on the wiring put in place during manufacturing. (Transmons are used by companies like Google, IBM, and Rigetti.) This has important implications that we’ll come back to.

In earlier versions of its hardware, IonQ was using ytterbium ions, but these came with one big drawback. “One of the technical challenges with working with ytterbium is a lot of the laser technology requires ultraviolet light,” Kim told Ars. “When you try to scale and try to run fast and use a lot of power, this UV adds a lot of technical complications to what we can do.”

So the company decided to change elements, switching to barium ions instead. With that change, all of the lasers needed to get quantum computing to work were in visible wavelengths, which means lower power and more widely available off-the-shelf hardware. Kim said that, aside from the lasers and some optical hardware needed to get the light in the right places, nothing else had to be changed to get barium-based qubits to work.

High-quality SPAM

The company’s announcement today focuses on a specific aspect of the performance of these barium-based qubits: state preparation and measurement, or SPAM. These are problems that occur when the system is initialized or during readout of its final state; they do not include errors caused by operations performed on the qubits (Kim said the company would have more to say on the latter soon). In short, if you have a problem with SPAM, you won’t be able to rely on any solutions delivered by the computing hardware.

With the barium-based qubits, SPAM errors have dropped by an order of magnitude; problems only crop up in 0.04 percent of the tests.

That’s an extremely low error rate compared to most qubit technologies. And Kim pointed out that it’s quite a significant advance over the company’s previous SPAM error rate of 0.5 percent. At the earlier error rate, “by the time you get to 100 qubits, just the readout is going to give you 60 percent error,” he said, “so it just undermines your performance as you go to bigger and larger circuits.” The improved qubits would drop the readout error rate on 100 qubit machines to only four percent.

It’s that sort of difference that has the company rethinking its approach to performance and scaling of its systems.

https://arstechnica.com/?p=1837989