Is my co-worker AI? Bizarre product reviews leave Gannett staff wondering

A smattering of articles recently discovered on Reviewed, Gannett’s product reviews site, is prompting an increasingly common debate: was this made with artificial intelligence tools or by a human?

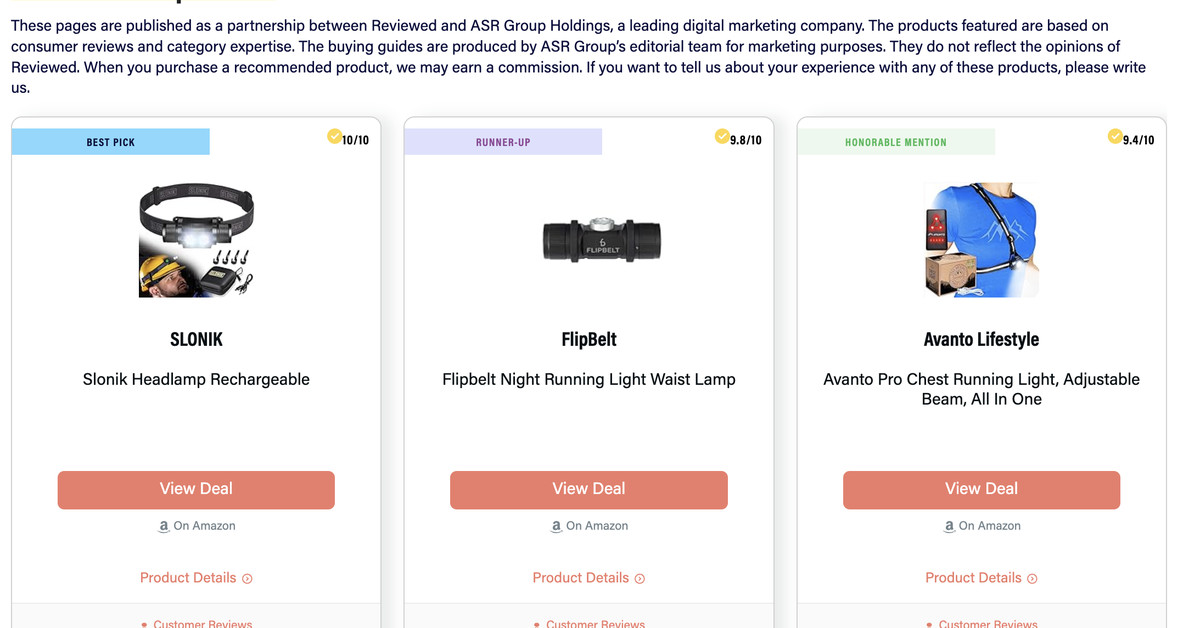

The writing is stilted, repetitive, and at times nonsensical. “Before buying a product, you need to first consider the fit, light settings, and additional features that each option offers,” reads an article titled “Best waist lamp of 2023.” “Before you purchase Swedish Dishcloths, there are a few questions you may want to ask yourself,” says another. On each page, there is a section called “Product Pros/Cons” that, instead of actually offering benefits and drawbacks, just has one list with a handful of features. The pages are loaded with low-resolution images, infographics, and dozens of links to Amazon product listings. (At the time of this writing, the articles appear to have been deleted.)

It’s the type of content readers have come to associate with AI, and this wasn’t Gannett’s first brush with controversy over it. In August, the company ran a botched “experiment” with using AI to generate sports articles, producing reams of stories repeating awkward phrases like “close encounters of the athletic kind.” Gannett paused the use of the tool and said it would reevaluate tools and processes. But on Tuesday, the NewsGuild of New York — the union representing Reviewed workers — shared screenshots of the shopping articles that staff had stumbled upon, calling it the latest attempt by Gannett to use AI tools to produce content.

But Gannett insists the new “reviews” weren’t created with AI. Instead, the content was created by “third-party freelancers hired by a marketing agency partner,” said Lark-Marie Anton, Gannett’s chief communications officer.

“The pages were deployed without the accurate affiliate disclaimers and did not meet our editorial standards. Updates have been published [on Tuesday],” Anton told The Verge in an email. In other words, the articles are an affiliate marketing play produced by another company’s workers.

A new disclaimer on the articles reads, “These pages are published as a partnership between Reviewed and ASR Group Holdings, a leading digital marketing company. The products featured are based on consumer reviews and category expertise. The buying guides are produced by ASR Group’s editorial team for marketing purposes.”

Still, there’s something strange about the reviews. According to old job listings, ASR Group also uses the name AdVon Commerce — a company that specializes in “ML / AI solutions for E Commerce,” per its LinkedIn page. An AdVon Commerce employee listed on the Reviewed website says on LinkedIn that they “mastered the art of prompting and editing AI generative text” and that they “organize and instruct a team of 15 copywriters during the time of transition to ChatGPT and AI generative text.”

What’s more, the writers credited on Reviewed are hard to track down — some of them don’t appear to have other published work or LinkedIn pages. In posts on X, Reviewed staff wondered, “Are these people even real?”

When asked about the marketing company and its use of AI tools, Anton said Gannett confirmed the content was not created using AI. AdVon Commerce didn’t respond to a request for comment.

“It really dilutes what we do”

The dustup with the maybe-AI-maybe-not-AI stories comes just a few weeks after unionized staff at Reviewed walked off the job to secure dates for bargaining sessions with Gannett. In an emailed statement, the Reviewed union said it would raise the issue during its first round of bargaining in the coming days.

“It’s an attempt to undermine and replace members of the union whether they’re using AI, subcontractors of a marketing firm, or some combination of both. In the immediate term, we demand that management unpublish all of these articles and issue a formal apology,” the statement reads.

“These posts undermine our credibility, they undermined our integrity as reporters,” Michael Desjardin, a senior staff writer at Reviewed, told The Verge. Desjardin says he believes the publishing of the reviews is retaliation for the earlier strike.

According to Desjardin, Gannett leadership didn’t notify staff that the articles were being published, and they only realized when they came across the posts on Friday. Staffers noticed typos in headlines; odd, machine-like phrasing; and other “tell tale signs” that wouldn’t meet journalists’ editorial standards.

“Myself and the rest of the folks in the unit feel like — if this is indeed what’s going on — it really dilutes what we do,” Desjardin told The Verge of Gannett’s alleged use of AI tools. “It’s just a matter of this is existing on the same platform as where we publish.”

The fuzziness between what’s AI-generated and what’s created by humans has been a recurring theme of 2023, especially at media companies. A similar dynamic emerged at CNET earlier this year, kicked off by AI-generated stories being published right next to journalists’ own work. Staff had few details about how the AI-generated articles were produced or fact-checked. More than half of the articles contained errors, and CNET did not clearly disclose that AI was used until after media reports.

“This stuff to me looks like it’s designed to camouflage itself, to just blend in with what we do every day,” Desjardin says of the Reviewed content.

https://www.theverge.com/2023/10/26/23931530/gannett-ai-product-reviews-site-reviewed-union-newsguild