Meta Reveals Latest Updates to Teen Safety Measures on Facebook, Instagram

Meta vice president and global head of safety Antigone Davis detailed several updates Monday geared toward keeping teems safe on Facebook and Instagram.

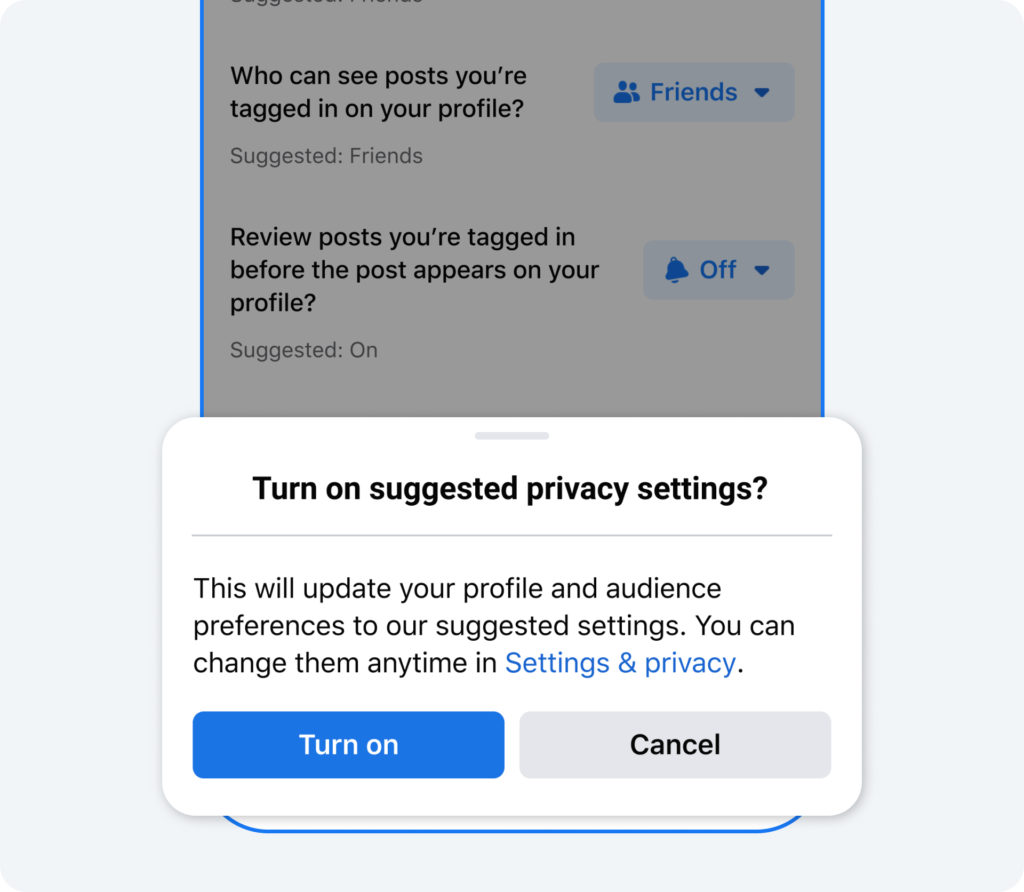

Mirroring a step the company took on Instagram in July 2021, anyone under the age of 16—under 18 in certain countries—who joins Facebook will be defaulted into more private settings, and users in that age group who are already on the platform will be encouraged to make their settings more private for categories such as:

- Who can see their friends list.

- Who can see the people, pages and lists they follow.

- Who can see posts they are tagged in on their profile.

- Reviewing posts they are tagged in before those posts appear on their profile.

- Who can comment on their public posts.

Meta is working with the National Center for Missing and Exploited Children on a global platform for teens who are worried about intimate images they created being shared to public platforms without their consent.

Davis explained in a blog post Monday, “We’ve been working closely with NCMEC, experts, academics, parents and victim advocates globally to help develop the platform and ensure that it responds to the needs of teens so that they can regain control of their content in these horrific situations. We’ll have more to share on this new resource in the coming weeks.”

The company is also collaborating with Thorn and its NoFiltr brand on educational materials that help reduce the shame and stigma surrounding intimate images and empower teens to seek help and take back control if they’ve shared those images or are experiencing sextortion.

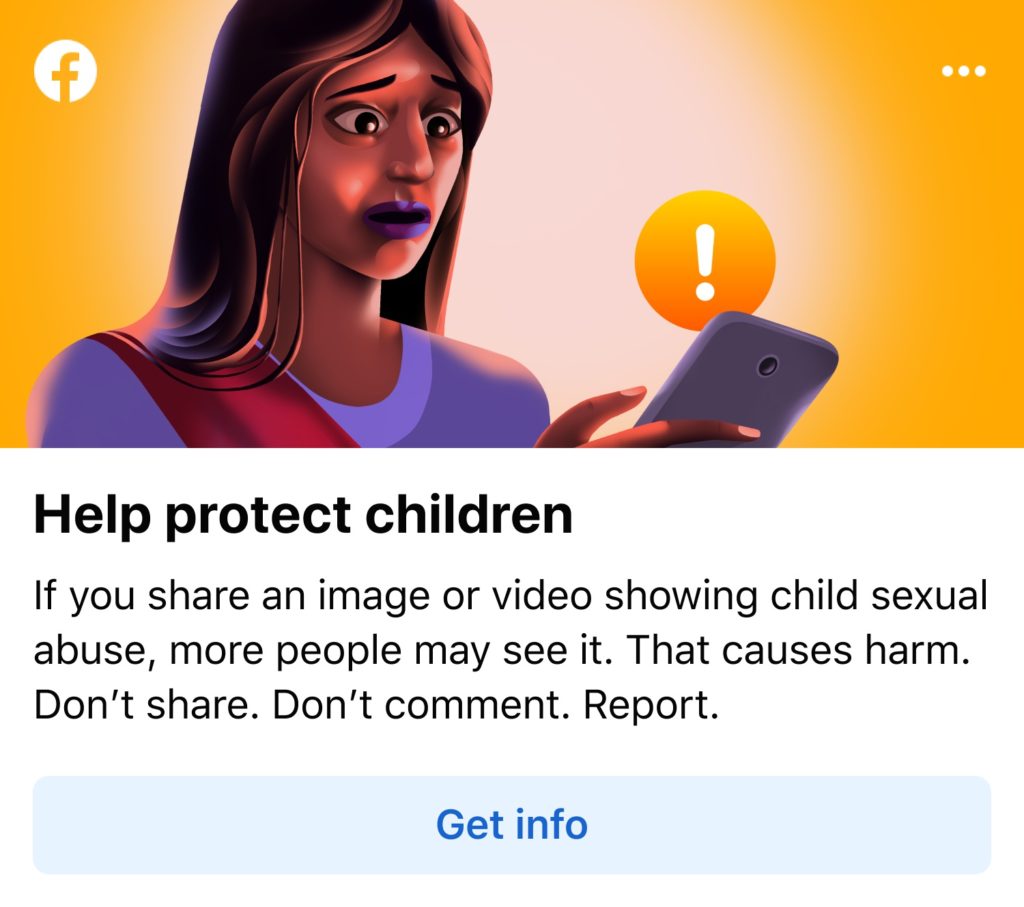

Davis wrote, “We found that more than 75% of people that we reported to NCMEC for sharing child exploitative content shared the content out of outrage, poor humor or disgust, and with no apparent intention of harm. Sharing this content violates our policies, regardless of intent,” adding that a new public-service announcement campaign is aimed at urging people to stop and think before resharing images of this sort and to report them to Meta instead.

She also pointed people seeking information or support to the Stop Sextortion Hub on the Facebook Safety Center and to the platform’s guide for parents on how to talk to teens about intimate images.

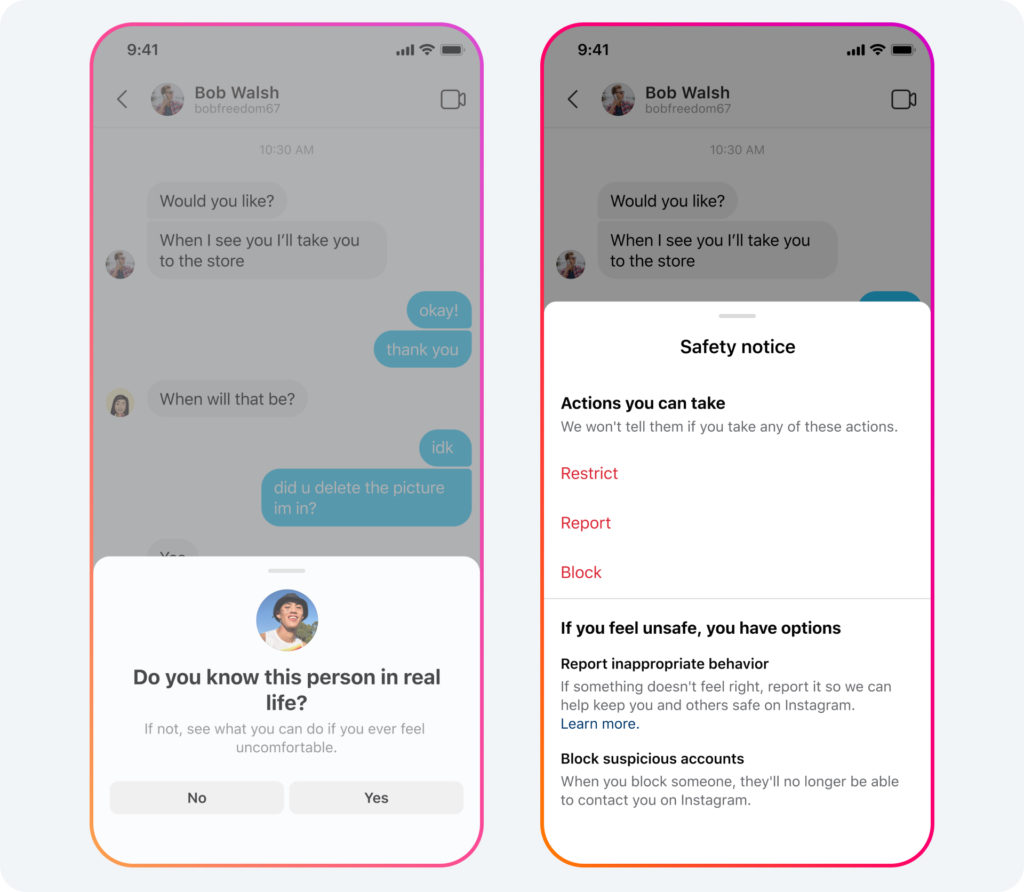

Meta began testing new ways to protect teens from messaging suspicious adults—an adult that may have recently been blocked or reported by a young person, for example—who they aren’t connected to, saying those accounts won’t be shown in People You May Know recommendations, and it is experimenting with removing the message button on teens’ Instagram accounts altogether when those accounts are viewed by suspicious adults.

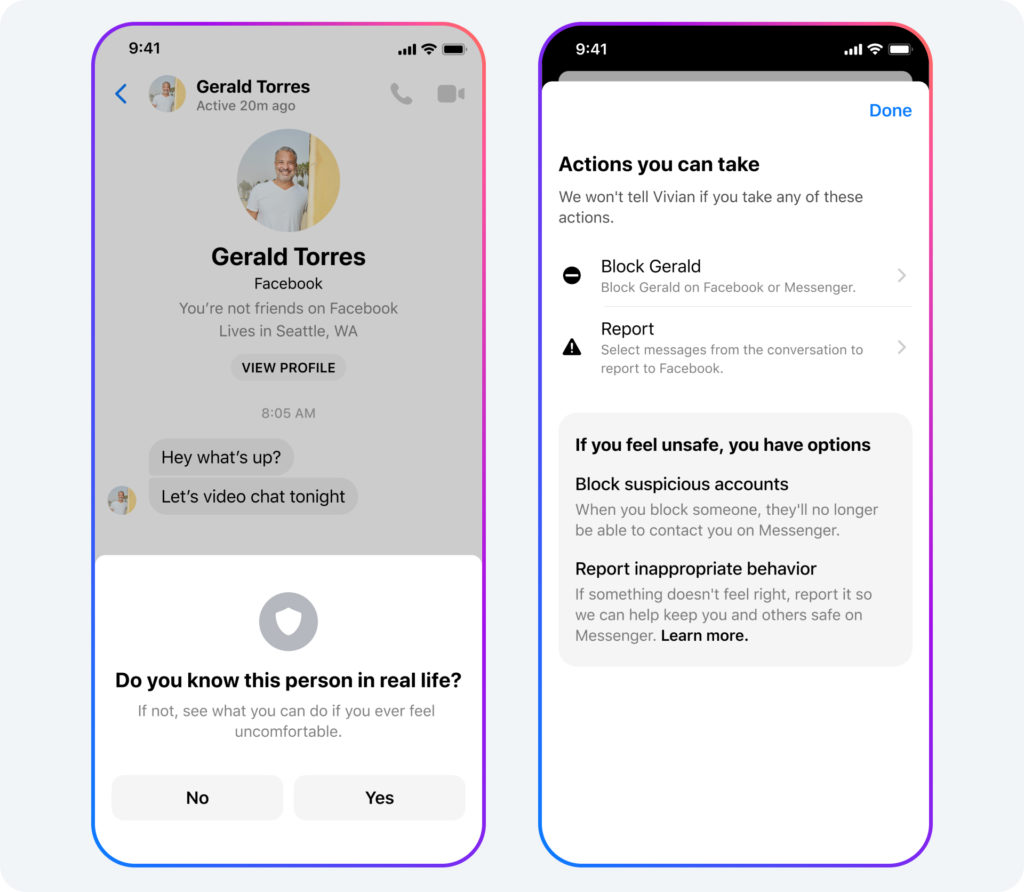

Finally, Meta is introducing new notifications that encourage teens on its platforms to use the safety tools that are available to them, such as prompting teens to report accounts after they block them and sending them safety notices with information on how to navigate inappropriate messages from adults.

https://www.adweek.com/media/meta-reveals-latest-updates-to-teen-safety-measures-on-facebook-instagram/