Home Assistant has a new foundation and a goal to become a consumer brand

Home Assistant, until recently, has been a wide-ranging and hard-to-define project.

The open smart home platform is an open source OS you can run anywhere that aims to connect all your devices together. But it’s also bespoke Raspberry Pi hardware, in Yellow and Green. It’s entirely free, but it also receives funding through a private cloud services company, Nabu Casa. It contains tiny board project ESPHome and other inter-connected bits. It has wide-ranging voice assistant ambitions, but it doesn’t want to be Alexa or Google Assistant. Home Assistant is a lot.

After an announcement this weekend, however, Home Assistant’s shape is a bit easier to draw out. All of the project’s ambitions now fall under the Open Home Foundation, a non-profit organization that now contains Home Assistant and more than 240 related bits. Its mission statement is refreshing, and refreshingly honest about the state of modern open source projects.

“We’ve done this to create a bulwark against surveillance capitalism, the risk of buyout, and open-source projects becoming abandonware,” the Open Home Foundation states in a press release. “To an extent, this protection extends even against our future selves—so that smart home users can continue to benefit for years, if not decades. No matter what comes.” Along with keeping Home Assistant funded and secure from buy-outs or mission creep, the foundation intends to help fund and collaborate with external projects crucial to Home Assistant, like Z-Wave JS and Zigbee2MQTT.

Home Assistant’s ambitions don’t stop with money and board seats, though. They aim to “be an active political advocate” in the smart home field, toward three primary principles:

- Data privacy, which means devices with local-only options, and cloud services with explicit permissions

- Choice in using devices with one another through open standards and local APIs

- Sustainability by repurposing old devices and appliances beyond company-defined lifetimes

Notably, individuals cannot contribute modest-size donations to the Open Home Foundation. Instead, the foundation asks supporters to purchase a Nabu Casa subscription or contribute code or other help to its open source projects.

From a few lines of Python to a foundation

Home Assistant founder Paulus Schoutsen wanted better control of his Philips Hue smart lights just before 2014 or so and wrote a Python script to do so. Thousands of volunteer contributions later, Home Assistant was becoming a real thing. Schoutsen and other volunteers inevitably started to feel overwhelmed by the “free time” coding and urgent bug fixes. So Schoutsen, Ben Bangert, and Pascal Vizeli founded Nabu Casa, a for-profit firm intended to stabilize funding and paid work on Home Assistant.

Through that stability, Home Assistant could direct full-time work to various projects, take ownership of things like ESPHome, and officially contribute to open standards like Zigbee, Z-Wave, and Matter. But Home Assistant was “floating in a kind of undefined space between a for-profit entity and an open-source repository on GitHub,” according to the foundation. The Open Home Foundation creates the formal home for everything that needs it and makes Nabu Casa a “special, rules-bound inaugural partner” to better delineate the business and non-profit sides.

Home Assistant as a Home Depot box?

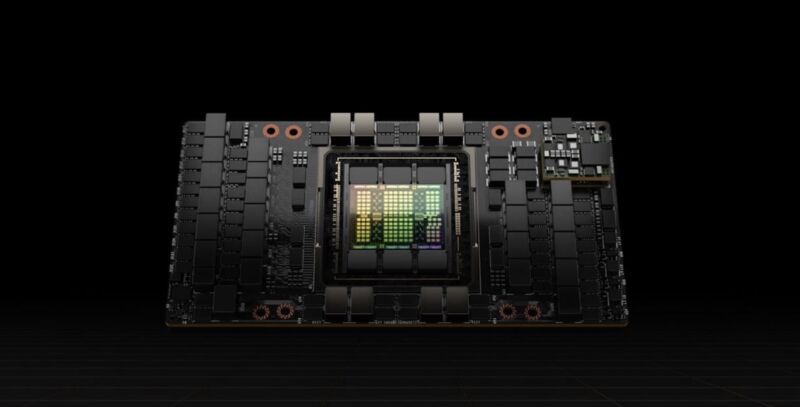

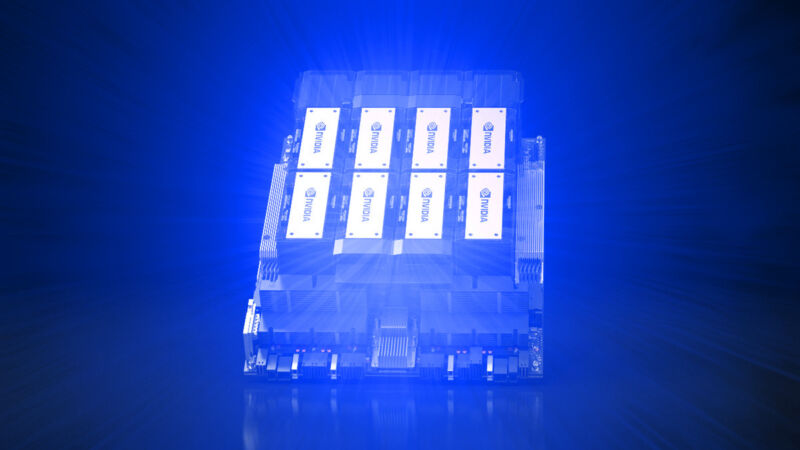

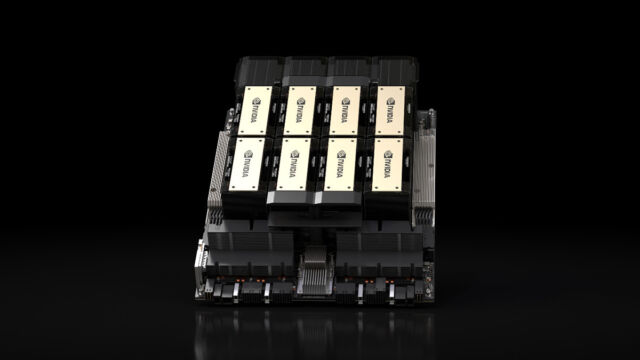

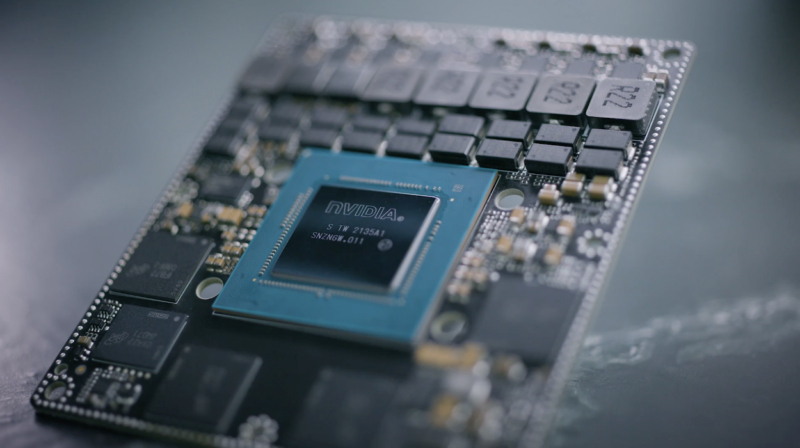

In an interview with The Verge’s Jennifer Pattison Tuohy, and in a State of the Open Home stream over the weekend, Schoutsen also suggested that the Foundation gives Home Assistant a more stable footing by which to compete against the bigger names in smart homes, like Amazon, Google, Apple, and Samsung. The Home Assistant Green starter hardware will sell on Amazon this year, along with HA-badged extension dongles. A dedicated voice control hardware device that enables a local voice assistant is coming before year’s end. Home Assistant is partnering with Nvidia and its Jetson edge AI platform to help make local assistants better, faster, and more easily integrated into a locally controlled smart home.

That also means Home Assistant is growing as a brand, not just a product. Home Assistant’s “Works With” program is picking up new partners and has broad ambitions. “We want to be a consumer brand,” Schoutsen told Tuohy. “You should be able to walk into a Home Depot and be like, ‘I care about my privacy; this is the smart home hub I need.’”

Where does this leave existing Home Assistant enthusiasts, who are probably familiar with the feeling of a tech brand pivoting away from them? It’s hard to imagine Home Assistant dropping its advanced automation tools and YAML-editing offerings entirely. But Schoutsen suggested he could imagine a split between regular and “advanced” users down the line. But Home Assistant’s open nature, and now its foundation, should ensure that people will always be able to remix, reconfigure, or re-release the version of smart home choice they prefer.

https://arstechnica.com/?p=2019041