“We are giddy”—interviewing Apple about its Mac silicon revolution

Some time ago, in an Apple campus building, a group of engineers got together. Isolated from others in the company, they took the guts of old MacBook Air laptops and connected them to their own prototype boards with the goal of building the very first machines that would run macOS on Apple’s own, custom-designed, ARM-based silicon.

To hear Apple’s Craig Federighi tell the story, it sounds a bit like a callback to Steve Wozniak in a Silicon Valley garage so many years ago. And this week, Apple finally took the big step that those engineers were preparing for: the company released the first Macs running on Apple Silicon, beginning a transition of the Mac product line away from Intel’s CPUs, which have been industry-standard for desktop and laptop computers for decades.

In a conversation shortly after the M1 announcement with Apple SVP of Software Engineering Craig Federighi, SVP of Worldwide Marketing Greg Joswiak, and SVP of Hardware Technologies Johny Srouji, we learned that—unsurprisingly—Apple has been planning this change for many, many years.

Ars spoke at length with these execs about the architecture of the first Apple Silicon chip for Macs (the Apple M1). While we had to get in a few inquiries about the edge cases of software support, there was really one big question on our mind: What are the reasons behind Apple’s radical change?

Why? And why now?

We started with that big idea: “Why? And why now?” We got a very Apple response from Federighi:

The Mac is the soul of Apple. I mean, the Mac is what brought many of us into computing. And the Mac is what brought many of us to Apple. And the Mac remains the tool that we all use to do our jobs, to do everything we do here at Apple. And so to have the opportunity… to apply everything we’ve learned to the systems that are at the core of how we live our lives is obviously a long-term ambition and a kind of dream come true.

“We want to create the best products we can,” Srouji added. “We really needed our own custom silicon to deliver truly the best Macs we can deliver.”

Apple began using x86 Intel CPUs in 2006 after it seemed clear that PowerPC (the previous architecture for Mac processors) was reaching the end of the road. For the first several years, those Intel chips were a massive boon for the Mac: they enabled interoperability with Windows and other platforms, making the Mac a much more flexible computer. They allowed Apple to focus more on increasingly popular laptops in addition to desktops. They also made the Mac more popular overall, in parallel with the runaway success of the iPod, and soon after, the iPhone.

And for a long time, Intel’s performance was top-notch. But in recent years, Intel’s CPU roadmap has been less reliable, both in terms of performance gains and consistency. Mac users took notice. But all three of the men we spoke with insisted that wasn’t the driving force behind the change.

“This is about what we could do, right?” said Joswiak. “Not about what anybody else could or couldn’t do.”

“Every company has an agenda,” he continued. “The software company wishes the hardware companies would do this. The hardware companies wish the OS company would do this, but they have competing agendas. And that’s not the case here. We had one agenda.”

When the decision was ultimately made, the circle of people who knew about it was initially quite small. “But those people who knew were walking around smiling from the moment we said we were heading down this path,” Federighi remembered.

Srouji described Apple as being in a special position to make the move successfully: “As you know, we don’t design chips as merchants, as vendors, or generic solutions—which gives the ability to really tightly integrate with the software and the system and the product—exactly what we need.”

Designing the M1

What Apple needed was a chip that took the lessons learned from years of refining mobile systems-on-a-chip for iPhones, iPads, and other products then added on all sorts of additional functionality in order to address the expanded needs of a laptop or desktop computer.

“During the pre-silicon, when we even designed the architecture or defined the features,” Srouji recalled, “Craig and I sit in the same room and we say, ‘OK, here’s what we want to design. Here are the things that matter.’”

When Apple first announced its plans to launch the first Apple Silicon Mac this year, onlookers speculated that the iPad Pro’s A12X or A12Z chips were a blueprint and that the new Mac chip would be something like an A14X—a beefed-up variant of the chips that shipped in the iPhone 12 this year.

Not exactly so, said Federighi:

The M1 is essentially a superset, if you want to think of it relative to A14. Because as we set out to build a Mac chip, there were many differences from what we otherwise would have had in a corresponding, say, A14X or something.

We had done lots of analysis of Mac application workloads, the kinds of graphic/GPU capabilities that were required to run a typical Mac workload, the kinds of texture formats that were required, support for different kinds of GPU compute and things that were available on the Mac… just even the number of cores, the ability to drive Mac-sized displays, support for virtualization and Thunderbolt.

There are many, many capabilities we engineered into M1 that were requirements for the Mac, but those are all superset capabilities relative to what an app that was compiled for the iPhone would expect.

Srouji expanded on the point:

The foundation of many of the IPs that we have built and that became foundations for M1 to go build on top of it… started over a decade ago. As you may know, we started with our own CPU, then graphics and ISP and Neural Engine.

So we’ve been building these great technologies over a decade, and then several years back, we said, “Now it’s time to use what we call the scalable architecture.” Because we had the foundation of these great IPs, and the architecture is scalable with UMA.

Then we said, “Now it’s time to go build a custom chip for the Mac,” which is M1. It’s not like some iPhone chip that is on steroids. It’s a whole different custom chip, but we do use the foundation of many of these great IPs.

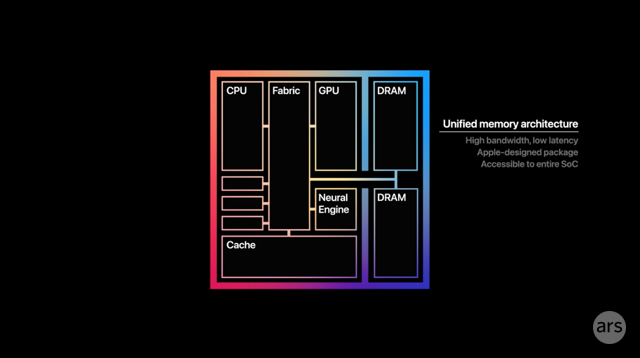

Unified memory architecture

UMA stands for “unified memory architecture.” When potential users look at M1 benchmarks and wonder how it’s possible that a mobile-derived, relatively low-power chip is capable of that kind of performance, Apple points to UMA as a key ingredient for that success.

Federighi claimed that “modern computational or graphics rendering pipelines” have evolved, and they’ve become a “hybrid” of GPU compute, GPU rendering, image signal processing, and more.

UMA essentially means that all the components—a central processor (CPU), a graphics processor (GPU), a neural processor (NPU), an image signal processor (ISP), and so on—share one pool of very fast memory, positioned very close to all of them. This is counter to a common desktop paradigm, of say, dedicating one pool of memory to the CPU and another to the GPU on the other side of the board.

When users run demanding, multifaceted applications, the traditional pipelines may end up losing a lot of time and efficiency moving or copying data around so it can be accessed by all those different processors. Federighi suggested Apple’s success with the M1 is partially due to rejecting this inefficient paradigm at both the hardware and software level:

We not only got the great advantage of just the raw performance of our GPU, but just as important was the fact that with the unified memory architecture, we weren’t moving data constantly back and forth and changing formats that slowed it down. And we got a huge increase in performance.

And so I think workloads in the past where it’s like, come up with the triangles you want to draw, ship them off to the discrete GPU and let it do its thing and never look back—that’s not what a modern computer rendering pipeline looks like today. These things are moving back and forth between many different execution units to accomplish these effects.

That’s not the only optimization. For a few years now, Apple’s Metal graphics API has employed “tile-based deferred rendering,” which the M1’s GPU is designed to take full advantage of. Federighi explained:

Where old-school GPUs would basically operate on the entire frame at once, we operate on tiles that we can move into extremely fast on-chip memory, and then perform a huge sequence of operations with all the different execution units on that tile. It’s incredibly bandwidth-efficient in a way that these discrete GPUs are not. And then you just combine that with the massive width of our pipeline to RAM and the other efficiencies of the chip, and it’s a better architecture.

https://arstechnica.com/?p=1724498