On Wednesday, Meta announced an AI model called the Segment Anything Model (SAM) that can identify individual objects in images and videos, even those not encountered during training, reports Reuters.

According to a blog post from Meta, SAM is an image segmentation model that can respond to text prompts or user clicks to isolate specific objects within an image. Image segmentation is a process in computer vision that involves dividing an image into multiple segments or regions, each representing a specific object or area of interest.

The purpose of image segmentation is to make an image easier to analyze or process. Meta also sees the technology as being useful for understanding webpage content, augmented reality applications, image editing, and aiding scientific study by automatically localizing animals or objects to track on video.

Typically, Meta says, creating an accurate segmentation model “requires highly specialized work by technical experts with access to AI training infrastructure and large volumes of carefully annotated in-domain data.” By creating SAM, Meta hopes to “democratize” this process by reducing the need for specialized training and expertise, which it hopes will foster further research into computer vision.

In addition to SAM, Meta has assembled a dataset it calls “SA-1B” that includes 11 million images licensed from “a large photo company” and 1.1 billion segmentation masks produced by its segmentation model. Meta will make SAM and its dataset available for research purposes under an Apache 2.0 license.

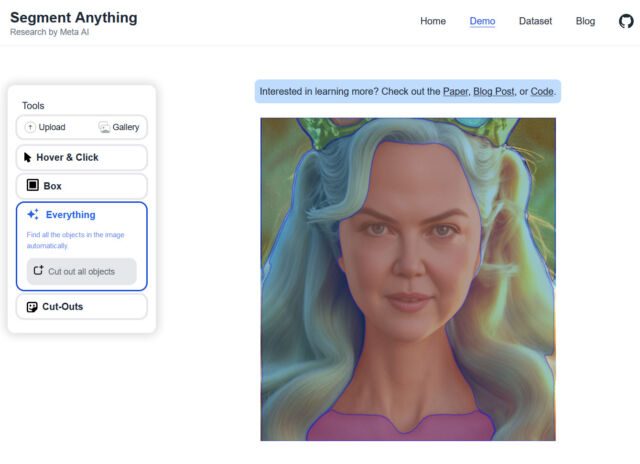

Currently, the code (without the weights) is available on GitHub, and Meta has created a free interactive demo of its segmentation technology. In the demo, visitors can upload a photo and use “Hover & Click” (selecting objects with a mouse), “Box” (selecting objects within a selection box), or “Everything” (which attempts to automatically ID every object in the image).

While image segmentation technology is not new, SAM is noteworthy for its ability to identify objects not present in its training dataset and its partially open approach. Also, the release of the SA-1B model could spark a new generation of computer vision applications, similar to how Meta’s LLaMA language model is already inspiring offshoot projects.

According to Reuters, Meta CEO Mark Zuckerberg has emphasized the importance of incorporating generative AI into the company’s apps this year. Although Meta has not released a commercial product using this type of AI yet, it has previously utilized technology similar to SAM internally with Facebook for photo tagging, content moderation, and determining recommended posts on Facebook and Instagram.

Meta’s announcement comes amid fierce competition among Big Tech companies to dominate the AI space. Microsoft-backed OpenAI’s ChatGPT language model gained widespread attention in the fall of 2022, sparking a wave of investments that may define the next major business trend in technology beyond social media and the smartphone.

https://arstechnica.com/?p=1929377