Late last week, both Bloomberg and The Washington Post published stories focused on the ostensibly disastrous impact artificial intelligence is having on the power grid and on efforts to collectively reduce our use of fossil fuels. The high-profile pieces lean heavily on recent projections from Goldman Sachs and the International Energy Agency (IEA) to cast AI’s “insatiable” demand for energy as an almost apocalyptic threat to our power infrastructure. The Post piece even cites anonymous “some [people]” in reporting that “some worry whether there will be enough electricity to meet [the power demands] from any source.”

Digging into the best available numbers and projections available, though, it’s hard to see AI’s current and near-future environmental impact in such a dire light. While generative AI models and tools can and will use a significant amount of energy, we shouldn’t conflate AI energy usage with the larger and largely pre-existing energy usage of “data centers” as a whole. And just like any technology, whether that AI energy use is worthwhile depends largely on your wider opinion of the value of generative AI in the first place.

Not all data centers

While the headline focus of both Bloomberg and The Washington Post’s recent pieces is on artificial intelligence, the actual numbers and projections cited in both pieces overwhelmingly focus on the energy used by Internet “data centers” as a whole. Long before generative AI became the current Silicon Valley buzzword, those data centers were already growing immensely in size and energy usage, powering everything from Amazon Web Services servers to online gaming services, Zoom video calls, and cloud storage and retrieval for billions of documents and photos, to name just a few of the more common uses.

The Post story acknowledges that these “nondescript warehouses packed with racks of servers that power the modern Internet have been around for decades.” But in the very next sentence, the Post asserts that, today, data center energy use “is soaring because of AI.” Bloomberg asks one source directly “why data centers were suddenly sucking up so much power” and gets back a blunt answer: “It’s AI… It’s 10 to 15 times the amount of electricity.”

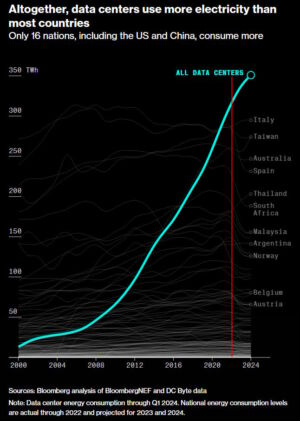

Unfortunately for Bloomberg, that quote is followed almost immediately by a chart that heavily undercuts the AI alarmism. That chart shows worldwide data center energy usage growing at a remarkably steady pace from about 100 TWh in 2012 to around 350 TWh in 2024. The vast majority of that energy usage growth came before 2022, when the launch of tools like Dall-E and ChatGPT largely set off the industry’s current mania for generative AI. If you squint at Bloomberg’s graph, you can almost see the growth in energy usage slowing down a bit since that momentous year for generative AI.

Determining precisely how much of that data center energy use is taken up specifically by generative AI is a difficult task, but Dutch researcher Alex de Vries found a clever way to get an estimate. In his study “The growing energy footprint of artificial intelligence,” de Vries starts with estimates that Nvidia’s specialized chips are responsible for about 95 percent of the market for generative AI calculations. He then uses Nvidia’s projected production of 1.5 million AI servers in 2027—and the projected power usage for those servers—to estimate that the AI sector as a whole could use up anywhere from 85 to 134 TWh of power in just a few years.

To be sure, that is an immense amount of power, representing about 0.5 percent of projected electricity demand for the entire world (and an even greater ratio in the local energy mix for some common data center locations). But measured against other common worldwide uses of electricity, it’s not representative of a mind-boggling energy hog. A 2018 study estimated that PC gaming as a whole accounted for 75 TWh of electricity use per year, to pick just one common human activity that’s on the same general energy scale (and that’s without console or mobile gamers included).

More to the point, de Vries’ AI energy estimates are only a small fraction of the 620 to 1,050 TWh that data centers as a whole are projected to use by 2026, according to the IEA’s recent report. The vast majority of all that data center power will still be going to more mundane Internet infrastructure that we all take for granted (and which is not nearly as sexy of a headline bogeyman as “AI”).

https://arstechnica.com/?p=2033273