In the researchers’ COCONUT model (for Chain Of CONtinUous Thought), those kinds of hidden states are encoded as “latent thoughts” that replace the individual written steps in a logical sequence both during training and when processing a query. This avoids the need to convert to and from natural language for each step and “frees the reasoning from being within the language space,” the researchers write, leading to an optimized reasoning path that they term a “continuous thought.”

Being more breadth-minded

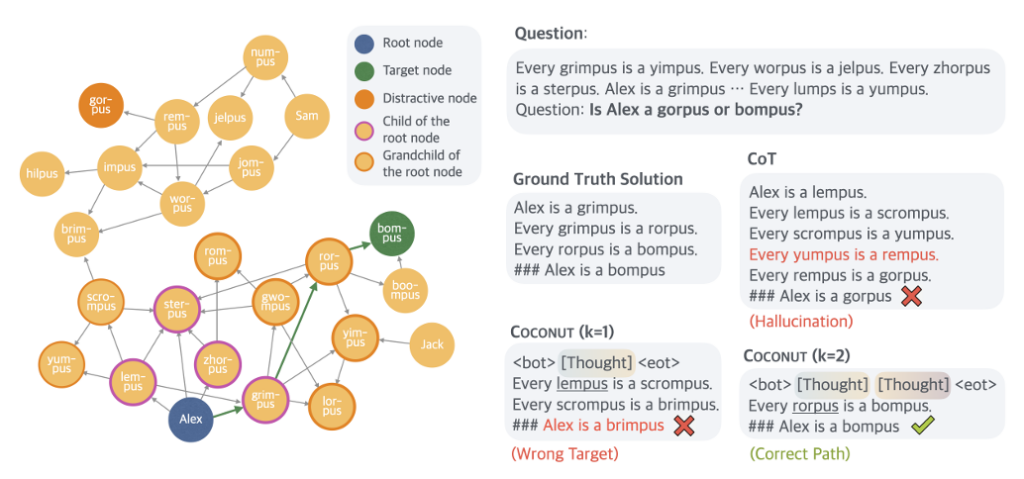

While doing logical processing in the latent space has some benefits for model efficiency, the more important finding is that this kind of model can “encode multiple potential next steps simultaneously.” Rather than having to pursue individual logical options fully and one by one (in a “greedy” sort of process), staying in the “latent space” allows for a kind of instant backtracking that the researchers compare to a breadth-first-search through a graph.

This emergent, simultaneous processing property comes through in testing even though the model isn’t explicitly trained to do so, the researchers write. “While the model may not initially make the correct decision, it can maintain many possible options within the continuous thoughts and progressively eliminate incorrect paths through reasoning, guided by some implicit value functions,” they write.

That kind of multi-path reasoning didn’t really improve COCONUT’s accuracy over traditional chain-of-thought models on relatively straightforward tests of math reasoning (GSM8K) or general reasoning (ProntoQA). But the researchers found the model did comparatively well on a randomly generated set of ProntoQA-style queries involving complex and winding sets of logical conditions (e.g., “every apple is a fruit, every fruit is food, etc.”)

For these tasks, standard chain-of-thought reasoning models would often get stuck down dead-end paths of inference or even hallucinate completely made-up rules when trying to resolve the logical chain. Previous research has also shown that the “verbalized” logical steps output by these chain-of-thought models “may actually utilize a different latent reasoning process” than the one being shared.

This new research joins a growing body of research looking to understand and exploit the way large language models work at the level of their underlying neural networks. And while that kind of research hasn’t led to a huge breakthrough just yet, the researchers conclude that models pre-trained with these kinds of “continuous thoughts” from the get-go could “enable models to generalize more effectively across a wider range of reasoning scenarios.”

https://arstechnica.com/ai/2024/12/are-llms-capable-of-non-verbal-reasoning/