The National Highway Traffic Safety Administration has egg on its face after a small research and consulting firm called Quality Control Systems produced a devastating critique of a 2017 agency report finding that Tesla’s Autopilot reduced crashes by 40 percent. The new analysis is coming out now—almost two years after the original report—because QCS had to sue NHTSA under the Freedom of Information Act to obtain the data underlying the agency’s findings. In its report, QCS highlights flaws in NHTSA’s methodology that are serious enough to completely discredit the 40 percent figure, which Tesla has cited multiple times over the last two years.

NHTSA undertook its study of Autopilot safety in the wake of the fatal crash of Tesla owner Josh Brown in 2016. Autopilot—more specifically Tesla’s lane-keeping function called Autosteer—was active at the time of the crash, and Brown ignored multiple warnings to put his hands back on the wheel. Critics questioned whether Autopilot actually made Tesla owners less safe by encouraging them to pay less attention to the road.

NHTSA’s 2017 finding that Autosteer reduced crash rates by 40 percent seemed to put that concern to rest. When another Tesla customer, Walter Huang, died in an Autosteer-related crash last March, Tesla cited NHTSA’s 40 percent figure in a blog post defending the technology. A few weeks later, Tesla CEO Elon Musk berated reporters for focusing on stories about crashes instead of touting the safety benefits of Autopilot.

“They should be writing a story about how autonomous cars are really safe,” Musk said in a May 2018 earnings call. “But that’s not a story that people want to click on. They write inflammatory headlines that are fundamentally misleading to readers.”

But now NHTSA’s full data set is available, and, if anything, it appears to contradict Musk’s claims. The majority of the vehicles in the Tesla data set suffered from missing data or other problems that made it impossible to say whether the activation of Autosteer increased or decreased the crash rate. But when QCS focused on 5,714 vehicles whose data didn’t suffer from these problems, it found that the activation of Autosteer actually increased crash rates by 59 percent.

In some ways this is old news. NHTSA distanced itself from its own findings last May, describing them as a “cursory comparison” that “did not assess the effectiveness” of Autosteer technology. Moreover, the NHTSA report focused on version 1 of the Autopilot hardware, which Tesla hasn’t sold since 2016.

Still, these new findings are relevant to a larger debate about how the federal government oversees driver-assistance systems like Autopilot. By publishing that 40 percent figure, NHTSA conferred unwarranted legitimacy on Tesla’s Autopilot technology. NHTSA then fought to prevent the public release of data that could help the public independently evaluate these findings, allowing Tesla to continue citing the figure for another year. In other words, NHTSA seemed more focused on protecting Tesla from embarrassment than on protecting the public from potentially unsafe automotive technologies.

Serious math errors made Autopilot look better

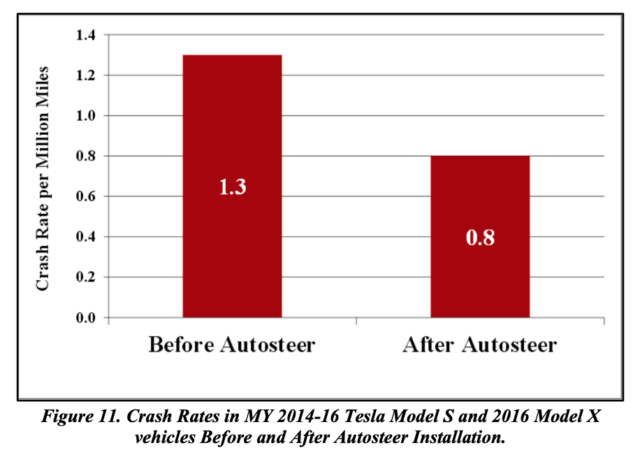

Tesla began shipping cars with Autopilot hardware in 2014, but the Autosteer lane-keeping system wasn’t enabled until October 2015. That provided something of a natural experiment: by comparing crash rates for the same vehicle before and after October 2015, NHTSA could try to estimate how the technology affected safety.

So NHTSA asked Tesla for data about cars sold between 2014 and 2016—how many miles they drove before and after receiving the Autopilot upgrade and whether they experienced a crash (as measured by airbag deployments) before or after Autopilot was enabled. Tesla has unusually complete data about this kind of thing because its cars have wireless connections to Tesla’s headquarters.

The math behind NHTSA’s findings should have been straightforward. Here’s how the agency summarized its findings in the January 2017 report:

To compute a crash rate, you take the number of crashes and divide it by the number of miles traveled. NHTSA did this calculation twice—once for miles traveled before the Autosteer upgrade, and again for miles traveled afterward. NHTSA found that crashes were more common before Autosteer, and the rate dropped by 40 percent once the technology was activated.

In a calculation like this, it’s important for the numerator and denominator to be drawn from the same set of data points. If the miles from a particular car aren’t in the denominator, then crashes for that same car can’t be in the numerator—otherwise the results are meaningless.

Yet according to QCS, that’s exactly what NHTSA did. Tesla provided NHTSA with data on 43,781 vehicles, but 29,051 of these vehicles were missing data fields necessary to calculate how many miles these vehicles drove prior to the activation of Autosteer. NHTSA handled this by counting these cars as driving zero pre-Autosteer miles. Yet NHTSA counted these same vehicles as having 18 pre-Autosteer crashes—more than 20 percent of the 86 total pre-Autosteer crashes in the data set. The result was to significantly overstate Tesla’s pre-Autosteer crash rate.

Other vehicles in the data set had a different, more subtle problem. Tesla supplied two different data points for cars: the last odometer reading before Autosteer installation and the first odometer reading afterward. Ideally these readings would be identical. However, 8,881 vehicles had a significant gap between these numbers. These cars collectively logged millions of miles during which it’s not known if Autosteer was active or not.

NHTSA chose not to include these miles in the denominator for either the pre- or post-Autosteer calculation. The result is to inflate the crash rate for both the “before” and “after” periods. This almost certainly made the results less accurate, but it’s impossible to know if this made Autopilot look better or worse, on net.

It’s only possible to compute accurate crash rates for vehicles that have complete data and no gap between the pre-Autosteer and post-Autosteer odometer readings. Tesla’s data set only included 5,714 vehicles like that. When QCS director Randy Whitfield ran the numbers for these vehicles, he found that the rate of crashes per mile increased by 59 percent after Tesla enabled the Autosteer technology.

So does that mean that Autosteer actually makes crashes 59 percent more likely? Probably not. Those 5,714 vehicles represent only a small portion of Tesla’s fleet, and there’s no way to know if they’re representative. And that’s the point: it’s reckless to try to draw conclusions from such flawed data. NHTSA should have either asked Tesla for more data or left that calculation out of its report entirely.

NHTSA kept its data from the public at Tesla’s behest

The misinformation in NHTSA’s report could have been corrected much more quickly if NHTSA had chosen to be transparent about its data and methodology. QCS filed a Freedom of Information Act request for the data and methodology underlying NHTSA’s conclusions in February 2017, about a month after the report was published. If NHTSA had supplied the information promptly, the problems with NHTSA’s calculations would likely have been identified quickly. Tesla would not have been able to continue citing them more than a year after they were published.

Instead, NHTSA fought QCS’ FOIA request after Tesla indicated that the data was confidential and would cause Tesla competitive harm if it was released. QCS sued the agency in July 2017. In September 2018, a federal judge rejected most of NHTSA’s arguments, clearing the way for NHTSA to release the information to QCS late last year.

QCS says it doesn’t have any financial stake in the Autopilot controversy and didn’t receive outside assistance for its litigation. “It seemed important,” Whitfield said. “We had some experience FOIAing data from NHTSA and we knew a good lawyer.”

We asked NHTSA for comment on QCS’ analysis on Monday morning. At their request, we delayed publishing this story by 24 hours to give them time to prepare a response. Here’s what the organization wound up sending us: “The agency is reviewing the report released by Quality Control Systems Corp. with interest and will provide comment as appropriate.”

We also asked Tesla for comment. “QCS’ analysis dismissed the data from all but 5,714 vehicles of the total 43,781 vehicles in the data set we provided to NHTSA back in 2016,” a company spokeswoman wrote. “Given the dramatic increase in the number of Tesla vehicles on the road, their analysis today represents about 0.5 percent of the total mileage that Tesla vehicles have traveled to date, and about 1 percent of the total mileage that Tesla vehicles have traveled to date with Autopilot engaged.”

Tesla also noted that NHTSA’s 2017 report had other positive things to say about Autopilot beyond the 40 percent figure. NHTSA wrote that it “did not identify any defects in the design or performance of the AEB or Autopilot systems,” nor did it find “any incidents in which the systems did not perform as designed.”

Tesla also touted a new quarterly safety report Tesla now publishes on its website. That report shows Tesla cars with Autopilot engaged experience fewer accidents per mile than Tesla cars with Autopilot disengaged. These, in turn, experience fewer accidents per mile than the average car on the road.

But Whitfield is dismissive of this data, too.

“The statistics are not controlled for a lot of the effects that we know are important,” Whitfield told Ars in a Monday phone interview. Autopilot use is supposed to be limited to freeways, which tend to have fewer accidents per mile than other streets. So the fact that there are fewer crashes per mile when Autopilot is engaged doesn’t necessarily prove that Autopilot is making the trips safer. It might simply reflect the fact that crashes are simply less common, on a per-mile basis, on freeways.

As for the comparison between Tesla and non-Tesla vehicles, new and high-end cars tend to have much lower accident rates than cars in general. Tesla’s lower crash rates may reflect the fact that most Tesla vehicles are newer than average—which means that they are less likely to have the kinds of problems that inevitably develop as cars age.

Also, the high cost of Tesla’s vehicles means that Tesla’s drivers are likely to be richer and older than the average driver. Middle-aged drivers tend to be safer than young drivers, and wealthier drivers can typically better afford to do regular maintenance, avoiding safety hazards like bald tires. So Tesla’s relatively low crash rate may reflect the demographics of its customer base more than the safety features of its cars.

But as far as we know, Tesla hasn’t provided recent crash data to independent experts who might be able to control for these kinds of factors to evaluate Autopilot’s safety in a rigorous way.

https://arstechnica.com/?p=1455289