For years, the open secret at Valve (makers of game series like Half-Life and Portal) has been the company’s interest in a new threshold of game experiences. We’ve seen this most prominently with SteamVR as a virtual reality platform, but the game studio has also openly teased its work on “brain-computer interfaces” (BCI)—meaning, ways to read brainwave activity to either control video games or modify those experiences.

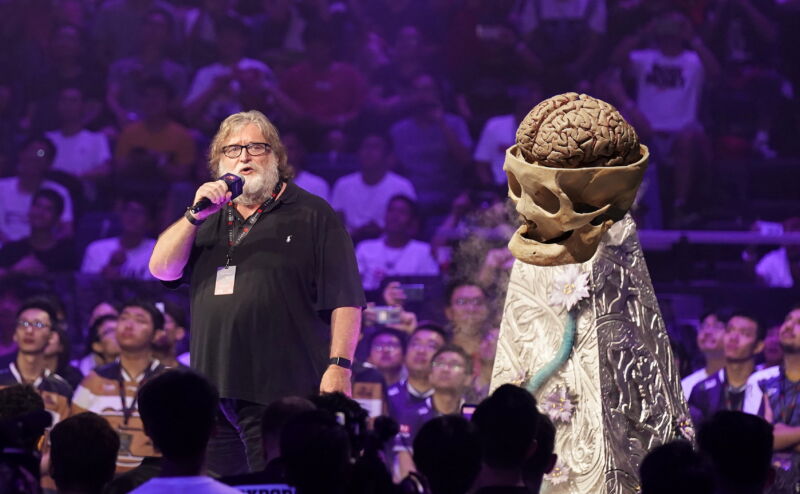

Most of what we’ve seen from Valve’s skunkworks divisions thus far, particularly at a lengthy GDC 2019 presentation, has revolved around reading your brain’s state (i.e., capturing nervous-system energy in your wrists before it reaches your fingers, to reduce button-tap latency in twitchy shooters like Valve’s Counter-Strike). In a Monday interview with New Zealand’s 1 News, Valve co-founder Gabe Newell finally began teasing a more intriguing level of BCI interaction: one that changes the state of your brain.

“Our ability to create experiences in people’s brains, that aren’t mediated through their meat peripherals [e.g., fingers, eyes], will be better than is [currently] possible,” Newell asserts as part of his latest 12-minute video interview. Later, he claims that “the real world will seem flat, colorless, and blurry compared to the experiences that you’ll be able to create in people’s brains.”

“But that’s not where it gets weird,” Newell continues. “Where it gets weird is when who you are becomes editable through a BCI.”

How many years until the tentacles?

As an example, Newell throws out a casual mood: “I’m feeling unmotivated today.” He envisions a world where such a state of being is no longer seen as “a fundamental personality characteristic that is relatively intractable to change” and shifts to “feed-forward and feedback loops of who you want to be.”

Or, more plainly, “Oh, I’ll turn up my focus right now. My mood should be this.”

Newell uses the phrase “science fiction” a few times in describing this possible BCI-driven future, along with overt references to The Matrix film series. But he also has a sales-pitch example of how mainstream acceptance could begin: with brain-control apps, whose interfaces resemble modern phone apps, for boosts like easier sleep.

This is how I want to sleep right now.

“Sleep will become an app you run, where you input, ‘I need this much sleep, this much REM,'” Newell says. “Instead of fluffing pillows or taking Zolpidem, I’ll just say, this is how I want to sleep right now.” From there, satisfied users will tell their friends about, say, sleeping through 12-hour flights “completely refreshed with my circadian rhythm,” he estimates.

Newell uses a personal story to illustrate why he believes brain-driven perspective is so malleable: He had corneal transplant surgery over a decade ago, which changed his perception of color between the two eyes. When his surgery corrected how his eyes saw color, it “perturbed that relationship” in his brain and created ghost-duplicated images until he got used to the change over a span of a few weeks.

Where do you go from there, if brains are so fungible? Newell mentions Valve’s work on synthetic hands as a collaboration with other researchers, then adds, “As soon as you do that, you say, ‘Oh, can you give people a tentacle?’ Then you think, ‘Oh, brains were never designed to have tentacles,’ but it turns out, brains are really flexible.” Why Newell immediately jumped to tentacles as a fantasy appendage is beyond us, but, hey.

In the short term, brain output before brain input

During this surface-level interview, however, Newell is careful not to estimate when such brain-input manipulation might ever come to bear on the market. In fact, he makes clear that he’s not currently in a rush to make it happen, saying, “The rate we’re learning stuff is so fast, we don’t want to prematurely say, ‘Let’s lock everything down and build a product,’ when six months from now, we have something to enable a bunch of other features.”

Instead, he uses the opportunity to confirm significant progress on “modified VR headstraps” that include “high-resolution read technologies.” In other words, Valve wants to more immediately capture users’ brainwave activity, either in terms of reducing button-tap latency or understanding how players’ moods shift during a game or app, and get such a device on the market. Newell admits this is more about creating a platform for game and software developers to “start thinking about these issues” ahead of second- and third-generation BCI products.

“If you’re a software developer in 2022 who doesn’t have one of these in your test lab, you’re making a silly mistake,” he adds.

We’re still waiting to hear more about Galea, a headset platform operated by the open-source collective OpenBCI with significant contributions from Valve, which may very well be the first “high-resolution read technology” headset in line with Newell’s proclamations about near-future BCI options in gaming.

In terms of nervous-system measurement, Galea may include EEG, EMG, EDA, PPG, and eye-tracking as options. It’s unclear whether, say, its EEG system would require a perfect connection to your scalp, or if any of its other measurement systems are particularly invasive. Still, we imagine Galea as a whole will be less invasive than Neuralink, the Elon Musk-driven neuroscience product that begins with a microchip connected directly to the human brain.

Trying not to “drive consumer acceptance off a cliff”

The juicier parts of the interview are the much more forward-looking ones, where Newell goes so far as to hint at playing God. If you think that’s an overstatement, look at this quote:

You’re used to experiencing the world through eyes, but eyes were created by this low-cost bidder who didn’t care about failure rates and RMAs. If [your eye] got broken, there was no way to repair anything effectively. It totally makes sense from an evolutionary perspective, but it’s not at all reflective of consumer preferences.

Newell is careful to temper such bold statements with the realities of trusting your sensitive data to massive tech firms. If modern-day handlers of your financial and personal data screw that up, Newell points out, “they’ll drive consumer acceptance off a cliff.” And he also doesn’t envision a world where everyone feels required to use BCIs, just like modern-day life doesn’t necessarily require smartphones.

The same scrutiny would apply to potentially invasive BCIs, Newell says. “‘Nobody wants to say, ‘Oh, you know, remember Bob? Remember when Bob got hacked by the Russian malware? Man, that sucked; is he still running naked through the forests?’… People are going to have to have a lot of confidence that these are secure systems that don’t have long-term health risks.”

Newell is also careful not to go into greater detail about how exactly a full read-write BCI would sync up with users or whether they’d require Neuralink-scale surgery—which could very well account for his choice not to estimate any release window in the foreseeable future.

For the full interview, head over to 1 News for its comprehensive report.

https://arstechnica.com/?p=1737116