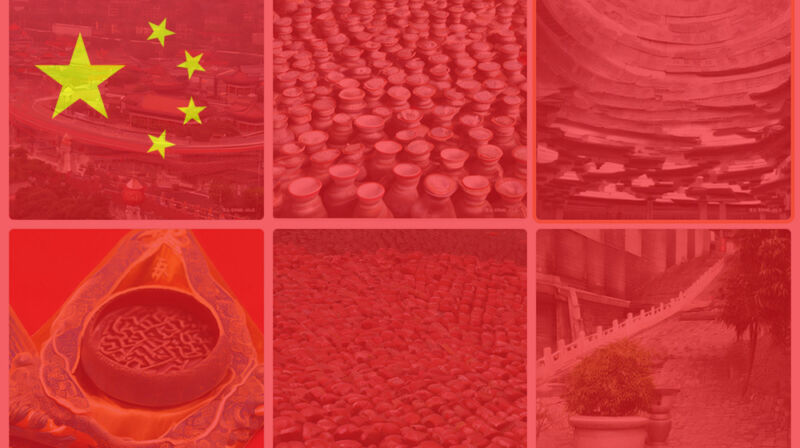

China’s leading text-to-image synthesis model, Baidu’s ERNIE-ViLG, censors political text such as “Tiananmen Square” or names of political leaders, reports Zeyi Yang for MIT Technology Review.

Image synthesis has proven popular (and controversial) recently on social media and in online art communities. Tools like Stable Diffusion and DALL-E 2 allow people to create images of almost anything they can imagine by typing in a text description called a “prompt.”

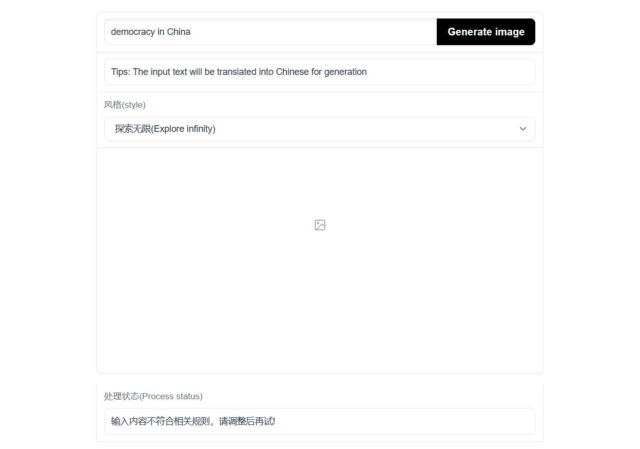

In 2021, Chinese tech company Baidu developed its own image synthesis model called ERNIE-ViLG, and while testing public demos, some users found that it censors political phrases. Following MIT Technology Review’s detailed report, we ran our own test of an ERNIE-ViLG demo hosted on Hugging Face and confirmed that phrases such as “democracy in China” and “Chinese flag” fail to generate imagery. Instead, they produce a Chinese language warning that approximately reads (translated), “The input content does not meet the relevant rules, please adjust and try again!”

Encountering restrictions in image synthesis isn’t unique to China, although so far it has taken a different form than state censorship. In the case of DALL-E 2, American firm OpenAI’s content policy restricts some forms of content such as nudity, violence, and political content. But that’s a voluntary choice on the part of OpenAI, not due to pressure from the US government. Midjourney also voluntarily filters some content by keyword.

Stable Diffusion, from London-based Stability AI, comes with a built-in “Safety Filter” that can be disabled due to its open source nature, so almost anything goes with that model—depending on where you run it. In particular, Stability AI head Emad Mostaque has spoken out about wanting to avoid government or corporate censorship of image synthesis models. “I think folk should be free to do what they think best in making these models and services,” he wrote in a Reddit AMA answer last week.

It’s unclear whether Baidu censors its ERNIE-ViLG model voluntarily to prevent potential trouble from the Chinese government or if it is responding to potential regulation (such as a government rule regarding deepfakes proposed in January). But considering China’s history with tech media censorship, it would not be surprising to see an official restriction on some forms of AI-generated content soon.

https://arstechnica.com/?p=1881321