Last week, Mona Lisa smiled. A big, wide smile, followed by what appeared to be a laugh and the silent mouthing of words that could only be an answer to the mystery that had beguiled her viewers for centuries.

A great many people were unnerved.

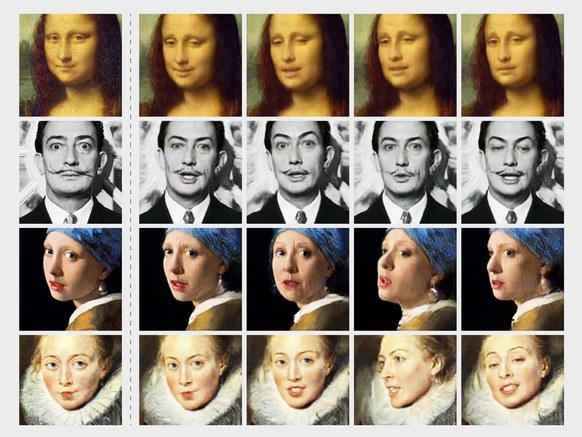

Mona’s “living portrait,” along with likenesses of Marilyn Monroe, Salvador Dali, and others, demonstrated the latest technology in deepfakes—seemingly realistic video or audio generated using machine learning. Developed by researchers at Samsung’s AI lab in Moscow, the portraits display a new method to create credible videos from a single image. With just a few photographs of real faces, the results improve dramatically, producing what the authors describe as “photorealistic talking heads.” The researchers (creepily) call the result “puppeteering,” a reference to how invisible strings seem to manipulate the targeted face. And yes, it could, in theory, be used to animate your Facebook profile photo. But don’t freak out about having strings maliciously pulling your visage anytime soon.

“Nothing suggests to me that you’ll just turnkey use this for generating deepfakes at home. Not in the short-term, medium-term, or even the long-term,” says Tim Hwang, director of the Harvard-MIT Ethics and Governance of AI Initiative. The reasons have to do with the high costs and technical know-how of creating quality fakes—barriers that aren’t going away anytime soon.

Deepfakes first entered the public eye late 2017, when an anonymous Redditor under the name “deepfakes” began uploading videos of celebrities like Scarlett Johansson stitched onto the bodies of pornographic actors. The first examples involved tools that could insert a face into existing footage, frame by frame—a glitchy process then and now—and swiftly expanded to political figures and TV personalities. Celebrities are the easiest targets, with ample public imagery that can be used to train deepfake algorithms; it’s relatively easy to make a high-fidelity video of Donald Trump, for example, who appears on TV day and night and at all angles.

The underlying technology for deepfakes is a hot area for companies working on things like augmented reality. On Friday, Google released a breakthrough in controlling depth perception in video footage—addressing, in the process, an easy tell that plagues deepfakes. In their paper, published Monday as a preprint, the Samsung researchers point to quickly creating avatars for games or video conferences. Ostensibly, the company could use the underlying model to generate an avatar with just a few images, a photorealistic answer to Apple’s Memoji. The same lab also published a paper this week on generating full-body avatars.

Concerns about malicious use of those advances have given rise to a debate about whether deepfakes could be used to undermine democracy. The concern is that a cleverly crafted deepfake of a public figure, perhaps imitating a grainy cell phone video so that it’s imperfections are overlooked, and timed for the right moment, could shape a lot of opinions. That’s sparked an arms race to automate ways of detecting them ahead of the 2020 elections. The Pentagon’s Darpa has spent tens of millions on a media forensics research program, and several startups are angling to become arbiters of truth as the campaign gets underway. In Congress, politicians have called for legislation banning their “malicious use.”

But Robert Chesney, a professor of law at the University of Texas, says political disruption doesn’t require cutting-edge technology; it can result from lower-quality stuff, intended to sow discord, but not necessarily to fool. Take, for example, the three-minute clip of House Speaker Nancy Pelosi circulating on Facebook, appearing to show her drunkenly slurring her words in public. It wasn’t even a deepfake; the miscreants had simply slowed down the footage.

By reducing the number of photos required, Samsung’s method does add another wrinkle: “This means bigger problems for ordinary people,” says Chesney. “Some people might have felt a little insulated by the anonymity of not having much video or photographic evidence online.” Called “few-shot learning,” the approach does most of the heavy computational lifting ahead of time. Rather than being trained with, say, Trump-specific footage, the system is fed a far larger amount of video that includes diverse people. The idea is that the system will learn the basic contours of human heads and facial expressions. From there, the neural network can apply what it knows to manipulate a given face based on only a few photos—or, as in the case of the Mona Lisa, just one.

The approach is similar to methods that have revolutionized how neural networks learn other things, like language, with massive datasets that teach them generalizable principles. That’s given rise to models like OpenAI’s GPT-2, which crafts written language so fluent that its creators decided against releasing it, out of fear that it would be used to craft fake news.

There are big challenges to wielding this new technique maliciously against you and me. The system relies on fewer images of the target face, but requires training a big model from scratch, which is expensive and time consuming, and will likely only become more so. They also take expertise to wield. It’s unclear why you would want to generate a video from scratch, rather than turning to, say, established techniques in film editing or PhotoShop. “Propagandists are pragmatists. There are many more lower cost ways of doing this,” says Hwang.

For now, if it were adapted for malicious use, this particular strain of chicanery would be easy to spot, says Siwei Lyu, a professor at the State University of New York at Albany who studies deepfake forensics under Darpa’s program. The demo, while impressive, misses finer details, he notes, like Marilyn Monroe’s famous mole, which vanishes as she throws back her head to laugh. The researchers also haven’t yet addressed other challenges, like how to properly sync audio to the deepfake, and how to iron out glitchy backgrounds. For comparison, Lyu sends me a state of the art example using a more traditional technique: a video fusing Obama’s face onto an impersonator singing Pharrell Williams’ “Happy.” The Albany researchers weren’t releasing the method, he said, because of its potential to be weaponized.

Hwang has little doubt improved technology will eventually make it hard to distinguish fakes from reality. The costs will go down, or a better-trained model will be released somehow, enabling some savvy person to create a powerful online tool. When that time comes, he argues the solution won’t necessarily be top-notch digital forensics, but the ability to look at contextual clues—a robust way for the public to evaluate evidence outside of the video that corroborates or dismisses its veracity. Fact-checking, basically.

But fact-checking like that has already proven a challenge for digital platforms, especially when it comes to taking action. As Chesney points out, it’s currently easy enough to detect altered footage, like the Pelosi video. The question is what to do next, without heading down a slippery slope to determine the intent of the creators—whether it was satire, maybe, or created with malice. “If it seems clearly intended to defraud the listener to think something pejorative, it seems obvious to take it down,” he says. “But then once you go down that path, you fall into a line-drawing dilemma.” As of the weekend, Facebook seemed to have come to a similar conclusion: The Pelosi video was still being shared around the Internet—with, the company said, additional context from independent fact-checkers.

This story originally appeared on Wired.com.

https://arstechnica.com/?p=1511041