With only 13 months left to go in the seemingly interminable 2020 US election season, senators are calling on Facebook, Snapchat, Reddit, and other social networks to do something sooner, rather than later, about the potential proliferation of misleading “deepfake” videos

“Over two-thirds of Americans now get their news from social media sites,” Sens. Mark Warner (D-Va.) and Marco Rubio (R-Fla.) jointly write in a series of letters to several technology platforms. “Increased reliance on social media will require your company to assume a heightened set of obligations to safeguard the public interest and the public’s trust.”

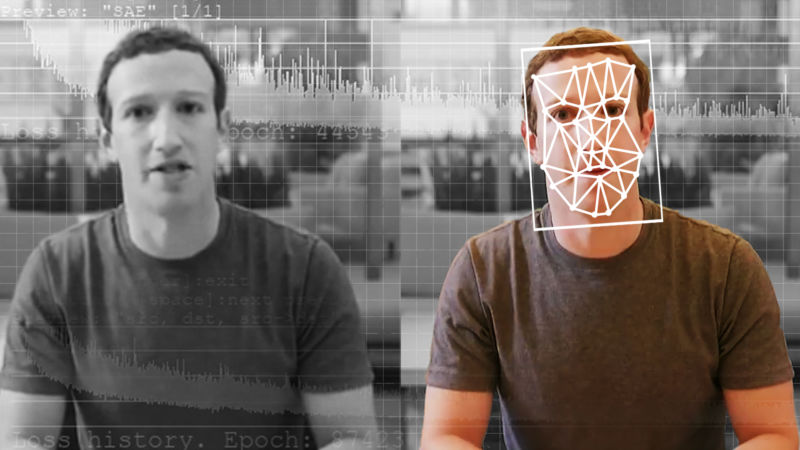

Image manipulation is nothing new, and doctored and misleading images have frequently gone viral online since enough Americans had fast-enough Internet access to make the sharing of digital images possible. The potential for not only doctored but completely fabricated video to be able to pass for the real thing, however, is a newer trend.

“We believe it is vital that your organization have plans in place to address the attempted use” of technologies that use “machine learning and data analytics techniques” to generate misleading audio or video, the senators said. “Having a clear policy in place for authenticating media, and slowing the pace at which disinformation spreads, can help blunt some of these risks.

To that end, the senators want platforms to tell them, first of all, what their current policies and rules regarding “misleading, synthetic, or fabricated media” are, if such rules even exist. The senators also want to know what recourse victims of fake videos have with the platforms and if the companies even have available to them the technical ability to detect deepfake video.

Rubio and Warner sent similar letters, with the same set of seven questions, to 11 video-sharing platforms: Facebook, Imgur, LinkedIn, Pinterest, Reddit, Snapchat, TikTok, Tumblr, Twitch, Twitter, and YouTube.

Are deepfakes a big problem?

Deepfake video can be identified—if you know how and where to look. But while individual users may be wising up to the clear tells of a badly Photoshopped still image, the general public is not yet adept at parsing fabricated video (and given how often clear hoaxes still travel far and wide, may never be).

Sens. Rubio and Warner have sounded this alarm before. They have said that deepfakes and other doctored or fabricated video should be considered a national security concern, especially related to election interference.

Unfortunately, manipulated, fake videos don’t have to rely on the latest in AI technology to be seriously misleading, as Sen. Warner pointed out in a tweet. “Whether we’re talking about deepfakes or more low-tech synthetic media like [what] slowed down video of [House] Speaker [Nancy] Pelosi, we’re facing a serious threat to public trust in the information we consume,” Warner said. “We ought to know if social media companies have a plan for how to deal with this.”

https://arstechnica.com/?p=1578591