At this point, anyone who follows generative AI is used to tools that can generate passive, consumable content in the form of text, images, video, and audio. Google DeepMind’s recently unveiled Genie model (for “GENerative Interactive Environment”) does something altogether different, converting images into “interactive, playable environments that can be easily created, stepped into, and explored.”

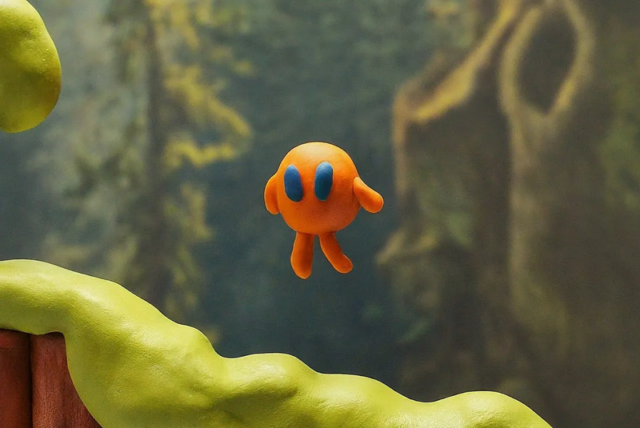

DeepMind’s Genie announcement page shows plenty of sample GIFs of simple platform-style games generated from static starting images (children’s sketches, real-world photographs, etc.) or even text prompts passed through ImageGen2. While those slick-looking GIFs gloss over some major current limitations that are discussed in the full research paper, AI researchers are still excited about how Genie’s generalizable “foundational world modeling” could help supercharge machine learning going forward.

Under the hood

While Genie’s output looks similar at a glance to what might come from a basic 2D game engine, the model doesn’t actually draw sprites and code a playable platformer in the same way a human game developer might. Instead, the system treats its starting image (or images) as frames of a video and generates a best guess at what the entire next frame (or frames) should look like when given a specific input.

To establish that model, Genie started with 200,000 hours of public Internet gaming videos, which were filtered down to 30,000 hours of standardized video from “hundreds of 2D games.” The individual frames from those videos were then tokenized into a 200-million-parameter model that a machine-learning algorithm could easily work with.

From here, the system generated a “latent action model” to predict what kind of interactive “actions” (i.e., button presses) could feasibly and consistently generate the kind of frame-by-frame changes seen across all of those tokens. The system limits the potential inputs to a “latent action space” of eight possible inputs (e.g., four d-pad directions plus diagonals) in an effort “to permit human playability” (which makes sense, as the videos it was trained on were all human-playable).

With the latent action model established, Genie then generates a “dynamics model” that can take any number of arbitrary frames and latent actions and generate an educated guess about what the next frame should look like given any potential input. This final model ends up with 10.7 billion parameters trained on 942 billion tokens, though Genie’s results suggest that even larger models would generate better results.

Previous work on generating similar interactive models using generative AI has relied on using “ground truth action labels” or text descriptions of training data to help guide their machine learning algorithms. Genie differentiates itself from that work in its ability to “train without action or text annotations,” inferring the latent actions behind a video using nothing but those hours of tokenized video frames.

“The ability to generalize to such significantly [out-of-distribution] inputs underscores the robustness of our approach and the value of training on large-scale data, which would not have been feasible with real actions as input,” the Genie team wrote in its research paper.

https://arstechnica.com/?p=2007877