The premiere of the second season of Westworld is a perfect time to ponder what makes us human. This is not new territory; such questions have long been dealt with in works of fiction, and they have appeared in science in the form of studies of creatures that have human-like characteristics—like consciousness—yet are not Homo sapiens.

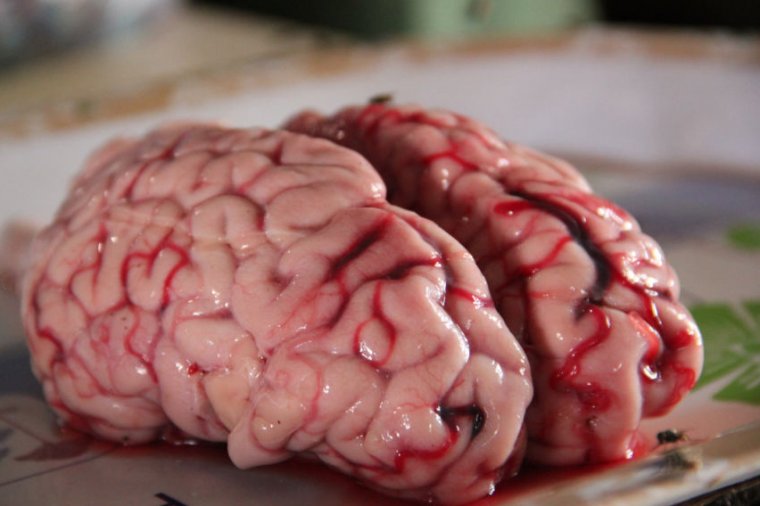

These studies raise ethical questions whether the subject is an animal or an AI. Last May, a consortium of bioethicists, lawyers, neuroscientists, geneticists, philosophers, and psychiatrists convened at Duke to discuss how this question may apply to relatively new entities: brain “organoids” grown in a lab. These organoids can be either chimaera of human or animal cells or slices of human brain tissue. Will these lab-grown constructs achieve any sort of consciousness deserving of protection?

Why organoids?

If we ever want to understand, let alone cure, the very complex brain disorders that plague people—like schizophrenia, ASD, and depression—we need research models. And in order to be informative, these models must be accurate representations of the human brain. Yet as our models become more and more like the real thing (and for now, they are still quite a long way off), the problems with using them become so pronounced as to negate their utility—like Borges’ map.

With mental and psychiatric disorders being so debilitating, is it ethical to use active human neural tissues? If we advance our ability to create them to the point where they develop consciousness, is using them still ethical?

Brain organoids are generated much like other organoids—we’ve made them for the eye, gut, liver, and kidney. Pluripotent stem cells are cultured under conditions that promote formation of specific cell types. In this case, we can push cells to adopt the fate of particular brain regions. These different brain regions can even be combined in limited ways. These 3D organoids contain multiple cell types and are indisputably more physiologically relevant for research than a lawn of identical cells growing in a petri dish.

And they are becoming increasingly complex; last year, a lab at Harvard recorded neural activity from an organoid after shining light on a region where cells of the retina had formed together with cells of the brain, demonstrating that the organoid can respond to an external stimulus. This is clearly not the same as feeling distress (or anything else)—but it’s a significant advance.

Chimaera have also been created. In this context, they’re animals—usually mice—into which human brain cells have been implanted. (The implanted cells are derived from pluripotent stem cells, like those used to make the brain organoids above—they are NOT harvested from individual humans.) Again, this is done to provide a more physiologically relevant model of brain diseases, like Parkinson’s. When human glial cells were transplanted into mice, the mice performed better on some learning tasks.

The small size of the rodents used should restrict the ability of the human brain cells to grow, but they’re clearly impacting the mice. In terms of “human-animal blurring,” the consortium writes: “We believe that decisions about which kinds of chimaera are permitted or about whether certain human organs grown in animals make animals ‘too human-like’ should ultimately be made on a case-by-case basis—taking into account the risks, benefits, and people’s diverse sensitivities.”

Feelings

What if these entities were to develop sentience, whatever that means? Or the ability to feel pleasure or pain? Or the ability to form memories? Or some sort of self-awareness? How would we even determine if they had such capabilities? The EEGs usually used to measure consciousness don’t work on infants, who are clearly conscious and human, so they might not be applicable here either.

Researchers have studied human brain tissue for more than a century, but now we have the ability to manipulate that brain tissue, to induce certain neurons to fire, for example. If we develop the technology to retrieve someone’s memories from a slice of tissue, how would we deal with that, legally and ethically? Consent for donating this tissue would take on a whole other dimension. And since some aspects of this technology could presumably function even if the tissue donor was dead, would that change the definition of brain death?

Genetic engineers set their own regulations on the use of recombinant DNA at the Asilomar Conference of 1975. Elon Musk argues that it is time to start regulating AI right now. With the BRAIN initiative currently underway, it is encouraging to see neuroscientists trying to stay ahead of this game and develop ethical guidelines before these technologies develop. So once these technologies do arrive, at least there’s a chance that we can deploy them responsibly.

Nature, 2018. DOI: 10.1038/d41586-018-04813-x (About DOIs).

https://arstechnica.com/?p=1298647