IBM has announced it has cleared a major hurdle in its effort to make quantum computing useful: it now has a quantum processor, called Eagle, with 127 functional qubits. This makes it the first company to clear the 100-qubit mark, a milestone that’s interesting because the interactions of that many qubits can’t be simulated using today’s classical computing hardware and algorithms.

But what may be more significant is that IBM now has a roadmap that would see it producing the first 1,000-qubit processor in two years. And, according to IBM Director of Research Darío Gil, that’s the point where calculations done with quantum hardware will start being useful.

What’s new

Gil told Ars that the new qubit count was a product of multiple developments that have been put together for the first time. One is that IBM switched to what it’s calling a “heavy hex” qubit layout, which it announced earlier this year. This layout connects qubits in a set of hexagons with shared sides. In this layout, qubits are connected to two, three, or a maximum of four neighbors—on average, that’s a lower level of connectivity than some competing designs. But Gil argued that the tradeoff is worth it, saying “it reduces the level of connectivity, but greatly improves crosstalk.”

That improvement means that the qubits are less likely to influence their neighbors in ways that can introduce errors. And that’s allowed IBM to increase the density of qubits on the chip.

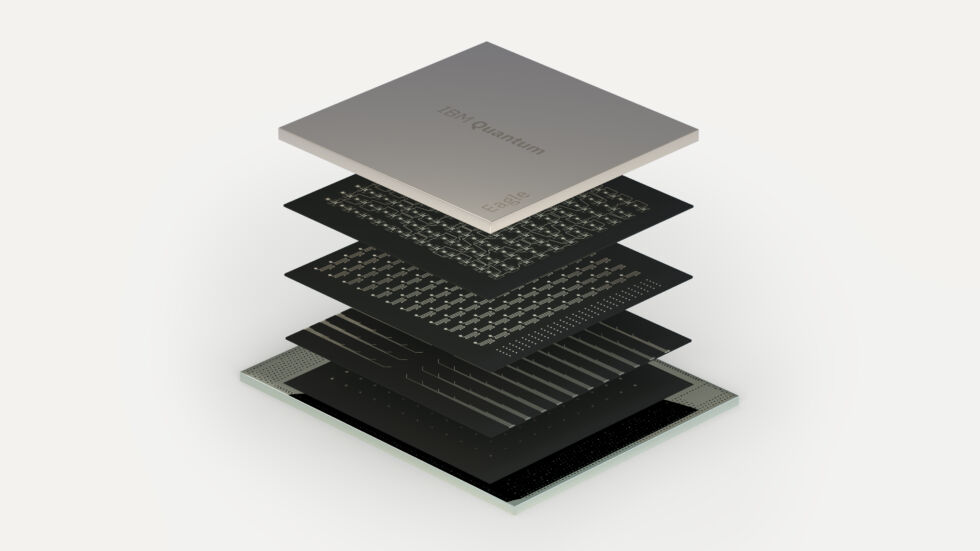

While the qubits remain on a single layer within the processor, the processor as a whole uses additional layers to host signal-carrying wires that allow the control and readout of the qubits. That’s common in traditional chips but new to the world of quantum computing. Those wires can also carry multiplexed signals. This is critical, since the wires that carry these signals are bulky enough that they threatened to limit the qubit count per chip—there simply wouldn’t be enough physical space on the chip’s packaging for all the wires needed for a one-wire-to-one-qubit layout once the qubit count climbed.

Other key advances that Gil highlighted included the ability to tune the frequencies of microwaves that each individual qubit responds to, preventing what are called “collisions,” where a signal meant for one qubit can also alter the behavior of others. The quantum “compiler”—the software that sequences the order of operations that perform a calculation on quantum hardware—can also help avoid collisions, Gil told Ars.

Finally, IBM has gotten the error rate of the basic functional unit of this hardware, the two-qubit gate, down to 0.001. That’s critical, because if error rates stay constant while qubit count goes up, more calculations are likely to suffer errors.

What’s coming

In terms of practical uses, the Eagle processor doesn’t change things dramatically. There are probably some interesting things you can do on it more easily than you could on a smaller processor, but we’ve not fundamentally reached the point when we can regularly do useful calculations that would be difficult to impossible on traditional computers. In many ways, Eagle is most important as a mile marker on IBM’s roadmap. That roadmap foresees a processor with 400-plus qubits next year and something with over 1,000 qubits in 2023. (It shifts to a vague “and beyond” after 2023.)

Putting all the technology together in a single package for Eagle was an important validation for that roadmap. “We have strong confidence that the roadmap of next year delivering a 433-qubit system and the year after that a system with over 1,000 would be possible,” Gil said.

Aside from the rising qubit count, Gil also highlighted the lower error rate. If you assume that rate continues to decline at the same pace it has over the last several years, Gil said that it should hit 0.0001 around the same time as the 1,000-qubit processors show up. That will be sufficient for some levels of error control and/or correction and will expand the sorts of algorithms that can be run on future processors.

That, IBM argues, combined with continued improvements in the compiler and control software, will fundamentally change the utility of quantum computing. “I think that we’re pretty confident that we will be able to demonstrate quantum advantage to some use cases that have value within the next two years,” Gil told Ars.

This doesn’t mean that, overnight, everything will work better—or at all—if it’s run on quantum hardware. Instead, Gil argued that the transition out of our current state (a small number of error-prone qubits) will be gradual. Some specific applications will start running more efficiently on the very best quantum hardware in the near future. Over time, as further improvements are made, we’ll see a growing number of applications that see what Gil termed a quantum advantage. But there won’t be any sort of overnight change.

https://arstechnica.com/?p=1813523