The Washington Post reported earlier today that Apple’s relationship with third-party security researchers could use some additional fine tuning. Specifically, Apple’s “bug bounty” program—a way companies encourage ethical security researchers to find and responsibly disclose security problems with its products—appears less researcher-friendly and slower to pay than the industry standard.

The Post says it interviewed more than two dozen security researchers who contrasted Apple’s bug bounty program with similar programs at competitors including Facebook, Microsoft, and Google. Those researchers allege serious communication issues and a general lack of trust between Apple and the infosec community its bounties are supposed to be enticing—”a bug bounty program where the house always wins,” according to Luta Security CEO Katie Moussouris.

Poor communication and unpaid bounties

Software engineer Tian Zhang appears to be a perfect example of Moussouris’ anecdote. In 2017, Zhang reported a major security flaw in HomeKit, Apple’s home automation platform. Essentially, the flaw allowed anyone with an Apple Watch to take over any HomeKit-managed accessories physically near them—including smart locks, as well as security cameras and lights.

After a month of repeated emails to Apple security with no response, Zhang enlisted Apple news site 9to5Mac to reach out to Apple PR—Zhang described them as “much more responsive” than Apple Product Security had been. Two weeks later—six weeks after initially reporting the vulnerability—the issue was finally remedied in iOS 11.2.1.

According to Zhang, his second and third bug reports were again ignored by Product Security, without bounties paid or credit given—but the bugs themselves were fixed. Zhang’s Apple Developer Program membership was revoked after submission of the third bug.

Swiss app developer Nicolas Brunner had a similarly frustrating experience in 2020. While developing an app for Swiss Federal Roadways, Brunner accidentally discovered a serious iOS location-tracking vulnerability that would allow an iOS app to track users without their consent. Specifically, granting an app permission to access location data only while foregrounded actually granted permanent, 24/7 tracking access to the app.

Brunner reported the bug to Apple, which eventually fixed it in iOS 14.0 and even credited Brunner in the security release notes. But Apple dithered for seven months about paying him a bounty, eventually notifying him that “the reported issue and your proof-of-concept do not demonstrate the categories listed” for bounty payout. According to Brunner, Apple ceased responding to his emails after that notification, despite requests for clarification.

According to Apple’s own payouts page, Brunner’s bug discovery would appear to easily qualify for a $25,000 or even $50,000 bounty under the category “User-Installed App: Unauthorized Access to Sensitive Data.” That category specifically references “sensitive data normally protected by a TCC prompt,” and the payouts page later defines “sensitive data” to include “real-time or historical precise location data—or similar user data—that would normally be prevented by the system.”

When asked to comment on Brunner’s case, Apple Head of Security Engineering and Architecture Ivan Krstić told The Washington Post that, “when we make mistakes, we work hard to correct them quickly, and learn from them to rapidly improve the program.”

An unfriendly program

Moussouris—who helped create bug-bounty programs for both Microsoft and the US Department of Defense—told the Post that “you have to have a healthy internal bug fixing mechanism before you can attempt to have a healthy bug vulnerability disclosure program.” Moussouris went on to ask, “What do you expect is going to happen if [researchers] report a bug that you already knew about but hadn’t fixed? Or if they report something that takes you 500 days to fix?”

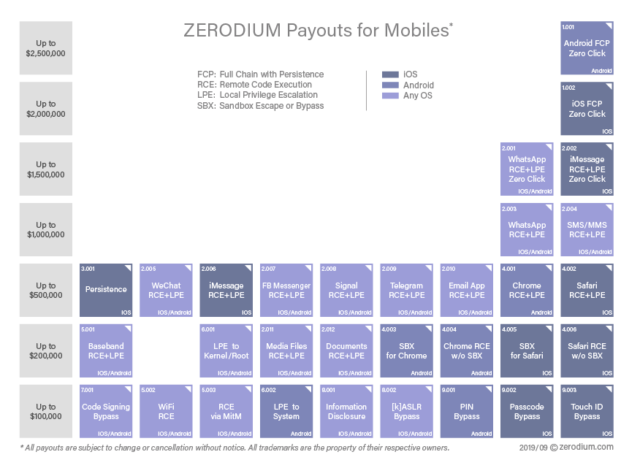

One such option is bypassing a relatively unfriendly bug-bounty program run by the vendor in question and selling the vulnerability to gray-market brokers instead—where access to them can in turn be purchased by threat actors like Israel’s NSO Group. Zerodium offers bounties of up to $2 million for the most severe iOS vulnerabilities—with less-severe vulnerabilities like Brunner’s location-exposure bug in its “up to $100,000” category.

Former NSA research scientist Dave Aitel told the Post that Apple’s closed, secretive approach to dealing with security researchers hampers its overall product security. “Having a good relationship with the security community gives you a strategic vision that goes beyond your product cycle,” Aitel said, adding, “hiring a bunch of smart people only gets you so far.”

Bugcrowd founder Casey Ellis says that companies should pay researchers when reported bugs lead to code changes closing a vulnerability, even if—as Apple rather confusingly told Brunner about his location bug—the reported bug doesn’t meet the company’s own strict interpretation of its guidelines. “The more good faith that goes on, the more productive bounty programs are going to be,” he said.

A runaway success?

Apple’s own description of its bug bounty program is decidedly rosier than the incidents described above—and reactions of the broader security community—would seem to suggest.

Apple Security Engineering and Architecture head Ivan Krstić told the Washington Post that “the Apple Security Bounty program has been a runaway success.” According to Krstić, the company has nearly doubled its annual bug bounty payout and leads the industry in average bounty amount.

“We are working hard to scale the program during its dramatic growth, and we will continue to offer top rewards to security researchers,” Krstić continued. But despite Apple’s year-on-year increase in total bounty payouts, the company lags far behind rivals Microsoft and Google—which paid out totals of $13.6 million and $6.7 million, respectively, in their most recent annual reports, as compared to Apple’s $3.7 million.

https://arstechnica.com/?p=1793284