At Intel Architecture Day 2020, most of the focus and buzz surrounded the upcoming Tiger Lake 10nm laptop CPUs—but Intel also announced advancements in their Xe GPU technology, strategy, and planning that could shake up the industry in the next couple of years.

Integrated Xe graphics are likely to be one of the Tiger Lake laptop CPU’s best features. Although we don’t have officially sanctioned test results yet, let alone third-party tests, some leaked benchmarks show Tiger Lake’s integrated graphics beating the Vega 11 chipset in Ryzen 4000 mobile by a sizable 35-percent margin.

Assuming these leaked benchmarks pan out in the real world, they’ll be a much-needed shot in the arm for Intel’s flagging reputation in the laptop space. But there’s more to Xe than that.

A new challenger appears

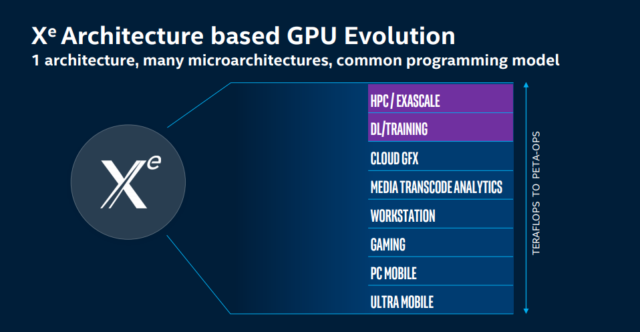

It has been a long time since any third party really challenged the two-party lock on high-end graphics cards—for roughly 20 years, your only realistic high-performance GPU choices have been Nvidia or Radeon chipsets. We first got wind of Intel’s plans to change that in 2019—but at the time, Intel was only really talking about its upcoming Xe GPU architecture in Ponte Vecchio, a product aimed at HPC supercomputing and datacenter use.

The company wasn’t really ready to talk about it then, but we spotted a slide in Intel’s Supercomputing 2019 deck that mentioned plans to expand Xe architecture into workstation, gaming, and laptop product lines. We still haven’t seen a desktop gaming card from Intel yet—but Xe has replaced both the old UHD line and its more-capable Iris+ replacement, and Intel’s a lot more willing to talk about near-future expansion now than it was last year.

When we asked Intel executives about that “gaming” slide in 2019, they seemed pretty noncommittal about it. When we asked again at Architecture Day 2020, the shyness was gone. Intel still doesn’t have a date for a desktop gaming (Xe HPG) card, but its executives expressed confidence in “market leading performance”—including onboard hardware raytracing—in that segment soon.

A closer look at Xe LP

-

If you read our Tiger Lake CPU coverage, this graph should look familiar—Xe LP integrated graphics get the same increase in voltage range and frequency efficiency from Intel’s newly improved FinFET and SuperMIM components under the hood.

-

Parallelism is key to GPU performance. This Xe LP GPU’s 96 Execution Units can produce 1,536 floating point operations, 48 texels, and 24 pixels per clock cycle.

-

Inside each Xe LP Execution Unit, there is an eight-wide floating point / integer arithmetic logic unit, and two-wide extended math ALU. EUs are thread-controlled in pairs.

-

The Xe LP integrated GPU has up to 16MiB of its own L3 cache—not shared with the CPU!—and an L1 data cache associated with each 16-EU subslice.

-

Xe LP is designed to be optimally efficient across a wide range of datatypes—dropping precision from 32 bits to 16 doubles the ops per clock; dropping to 8-bit double ops per clock again.

-

Xe LP’s media engine is designed for high performance environments, including 8K video at 60FPS.

-

Xe LP’s display engine is designed for multiple high-performance video output interfaces, at high resolutions and framerates.

If you followed our earlier coverage of Tiger Lake’s architecture, the first graph in the gallery should look very familiar. The Xe LP GPU enjoys the same benefits from Intel’s redesigned FinFET transistors and SuperMIM capacitors that the Tiger Lake CPU does. Specifically, that means stability across a greater range of voltages and a higher frequency uplift across the board, as compared to Gen11 (Ice Lake Iris+) GPUs.

With greater dynamic range for voltage, Xe LP can operate at significantly lower power than Iris+ could—and it can also scale to higher frequencies. The increased frequency uplift means higher frequencies at the same voltages Iris+ could manage, as well. It’s difficult to overstate the importance of this curve, which impacts power efficiency and performance on not just some but all workloads.

The improvements don’t end with voltage and frequency uplift, however. The high-end Xe LP features 96 execution units (comparing to Iris+ G7’s 64), and each of those execution units has FP/INT Arithmetic Logic Units twice as wide as Iris+ G7’s. Add a new L1 data cache for each 16 EU subslice, and an increase in L3 cache from 3MiB to 16MiB, and you can begin to get an idea of just how large an improvement Xe LP really is.

The 96-EU version of Xe LP is rated for 50-percent more 32-bit Floating Point Operations (FLOPS) per clock cycle than Iris+ G7 was and operates at higher frequencies, to boot. This conforms pretty well with the leaked Time Spy GPU benchmarks we referenced earlier—the i7-1165G7 achieved a Time Spy GPU score of 1,482 to i7-1065G7’s 806 (and Ryzen 7 4700U’s 1,093).

Improving buy-in with OneAPI

One of the biggest business keys to success in the GPU market is lowering costs and increasing revenue by appealing to multiple markets. The first part of Intel’s strategy for wide appeal and low manufacturing and design costs for Xe is scalability—rather than having entirely separate designs for laptop parts, desktop parts, and datacenter parts, they intend for Xe to scale relatively simply by adding more subslices with more EUs as the SKUs move upmarket.

There’s another key differentiator Intel needs to really break into the market in a big way. AMD’s Radeon line suffers from the fact that no matter how appealing they might be to gamers, they leave AI practitioners cold. This isn’t necessarily because Radeon GPUs couldn’t be used for AI calculations—the problem is simpler; there’s an entire ecosystem full of libraries and models designed specifically for Nvidia’s CUDA architecture, and no other.

It seems unlikely that a competing deep-learning GPU architecture, requiring massive code re-writing, could succeed unless it offers something much more tantalizing than slightly cheaper or slightly more powerful hardware. Intel’s answer is to offer a “write once, run anywhere” environment instead—specifically, the OneAPI framework, which is expected to hit production release status later this year.

Many people expect that all “serious” AI/deep-learning workloads will run on GPUs, which generally offer massively higher throughput than CPUs—even CPUs with Intel’s AVX-512 “Deep Learning Boost” instruction set—possibly can. In the datacenter, where it’s easy to order whatever configuration you like with little in the way of space, power, or heating constraints, this is at least close to true.

But when it comes to inference workloads, GPU execution isn’t always the best answer. While the GPU’s massively parallel architecture offers potentially higher throughput than a CPU can, the latency involved in setting up and tearing down short workloads can frequently make the CPU an acceptable—or even superior—alternative.

An increasing amount of inference isn’t done in the datacenter at all—it’s done at the edge, where power, space, heat, and cost constraints can frequently push GPUs out of the running. The problem here is that you can’t easily port code written for Nvidia CUDA to an x86 CPU—so a developer needs to make hard choices about what architectures to plan for and support, and those choices impact code maintainability as well as performance down the road.

Although Intel’s OneAPI framework is truly open, and Intel invites hardware developers to write their own libraries for non-Intel parts, Xe graphics are obviously a first-class citizen there—as are Intel CPUs. The siren call of deep learning libraries written once, and maintained once, to run on dedicated GPUs, integrated GPUs, and x86 CPUs may be enough to attract serious AI dev interest in Xe graphics, where simply competing on performance would not.

Conclusions

As always, it’s a good idea to maintain some healthy skepticism when vendors make claims about unreleased hardware. With that said, we’ve seen enough detail from Intel to make us sit up and pay attention on the GPU front, particularly with the (strategically?) leaked Xe LP benchmarks to back up their claims so far.

We believe that the biggest thing to pay attention to here is Intel’s holistic strategy—Intel executives have been telling us for a few years now that the company is no longer a “CPU company,” and it invests as heavily in software as it does in hardware. In a world where it’s easier to buy more hardware than hire (and manage) more developers, this strikes us as a shrewd strategy.

High-quality drivers have long been a trademark of Intel’s integrated graphics—while the gaming might not have been first-rate on UHD graphics, the user experience overwhelmingly has been, with “just works” expectations across all platforms. If Intel succeeds in expanding this “it just works” expectation to deep-learning development, with OneAPI, we think it’s got a real shot at breaking Nvidia’s current lock on the deep learning GPU market.

In the meantime, we’re looking very much forward to seeing Xe LP graphics debut in the real world, when Tiger Lake launches in September.

https://arstechnica.com/?p=1699348