For people with limited use of their limbs, speech recognition can be critical for their ability to operate a computer. But for many, the same problems that limit limb motion affect the muscles that allow speech. That had made any form of communication a challenge, as physicist Stephen Hawking famously demonstrated. Ideally, we’d like to find a way to get upstream of any physical activity and identify ways of translating nerve impulses to speech.

Brain-computer interfaces were making impressive advances even before Elon Musk decided to get involved, but the problem of brain-to-text wasn’t one of its successes. We’ve been able to recognize speech in the brain for a decade, but the accuracy and speed of this process are quite low. Now, some researchers at the University of California, San Francisco, are suggesting that the problem might be that we weren’t thinking about the challenge in terms of the big-picture process of speaking. And they have a brain-to-speech system to back them up.

Lost in translation

Speech is a complicated process, and it’s not necessarily obvious where in the process it’s best to start. At some point, your brain decides on the meaning it wants conveyed, although that often gets revised as the process continues. Then, word choices have to be made, although once mastered, speech doesn’t require conscious thought—even some word choices, like when to use articles and which to use, can be automatic at times. Once chosen, the brain has to organize collections of muscles to actually make the appropriate sounds.

Beyond that, there’s the issue of what exactly to recognize. Individual units of sound are built into words, and words are built into sentences. Both are subject to issues like accents, mispronunciations, and other audible issues. How do you decide on what to have your system focus on understanding?

The researchers behind the new work were inspired by the ever-improving abilities of automated translation systems. These tend to work on the sentence level, which probably helps them figure out the identity of ambiguous words using the context and inferred meaning of the sentence.

Typically, these systems process written text into an intermediate form and then extract meaning from that to identify what the words are. The researchers recognized that the intermediate form doesn’t necessarily have to be the result of processing text. Instead, they decided to derive it by processing neural activity.

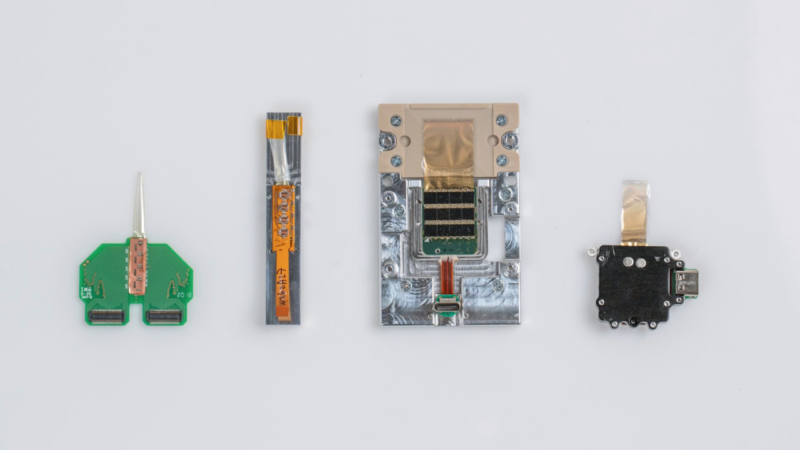

In this case, they had access to four individuals who had electrodes implanted to monitor for seizures that happened to be located in parts of the brain involved in speech. The participants were asked to read a set of 50 sentences, which in total contained 250 unique words, while neural activity was recorded by the implants. Some of the participants read from additional sets of sentences, but this first set provided the primary experimental data.

The recordings, along with audio recordings of the actual speech, were then fed into a recurrent neural network, which processed them into an intermediate representation that, after training, captured their key features. That representation was then sent in to a second neural network, which then attempted to identify the full text of the spoken sentence.

How’d it work?

The primary limitation here is the extremely limited set of sentences available for training—even the participant with the most spoken sentences had less than 40 minutes of speaking time. It was so limited that the researchers were afraid the system might end up just figuring out what was being said by tracking how long the system took to speak. And this did cause some problems, in that some of the errors that the system made involved the wholesale replacement of a spoken sentence with the words of a different sentence in the training set.

Still, outside those errors, the system did pretty well considering its limited training. The authors used a measure of performance called a “word error rate,” which is based on the minimum number of changes needed to transform the translated sentence into the one that was actually spoken. For two of the participants, after the system had gone through the full training set, its word error rate was below eight percent, which is comparable to the error rate of human translators.

To learn more about what was going on, the researchers systematically disabled parts of the system. This confirmed that the neural representation was critical for the system’s success. You could disable the audio processing portion of the system, and error rates would go up but still fall within a range that’s considered usable. That’s rather important for potential uses, which would include people who do not have the ability to speak.

Disabling different parts of the electrode input confirmed that the key areas that the system was paying attention to were involved in speech production and processing. Within that, a major contribution came from an area of the brain that paid attention to the sound of a person’s own voice to give feedback on whether what was spoken matched the intent of the speaker.

Transfer tech

Finally, the researchers tested various forms of transference learning. For example, one of the subjects spoke an additional set of sentences that weren’t used in the testing. Training the system on those as well caused the error rate to drop by 30 percent. Similarly, training the system on data from two users improved its performance for both of them. These studies indicated that the system really was managing to extract features of the sentence.

The transfer learning has two important implications. For one, it suggests that the modular nature of the system could allow it to be trained on intermediate representations derived from text, rather than requiring neural recordings at all times. That, of course, would open it up to being more generally useful, although it might increase the error rate initially.

The second thing is that it suggests it’s possible that a significant portion of training could take place with people other than the individual a given system is ultimately used for. This would be critical for those who have lost the ability to vocalize and would significantly decrease the amount of training time any individual needs on the system.

Obviously, none of this will work until getting implants like this is safe and routine. But there’s a bit of a chicken-and-egg problem there, in that there’s no justification for giving people implants without the demonstration of potential benefits. So, even if decades might go by before a system like this is useful, simply demonstrating that it could be useful can help drive the field forward.

Nature Neuroscience, 2020. DOI: 10.1038/s41593-020-0608-8 (About DOIs).

https://arstechnica.com/?p=1664423