One of the near-term (but somewhat irrelevant) goals of quantum computing is something called quantum supremacy. Quantum supremacy is not, sadly, a cage fight between proponents of competing interpretations of quantum mechanics. It is a demonstration of a quantum computer performing a computation, no matter how trivial, that cannot be performed on a classical computer.

The key question: what computation should be performed? A team of researchers is suggesting that computing the state of a random quantum circuit that exhibits chaotic behavior would be perfect for the task. Let’s delve into why that might be.

Getting all superior

The idea of quantum supremacy goes a bit like this. Yes, we have all of these different versions of quantum computing. And yes, they all seem to behave how we expect a quantum computer to behave. But they are all remarkably slow and can easily be beaten by classical computers.

From a technological point of view, a slow quantum computer makes sense. Current quantum computers don’t have much capacity, so they can’t do anything that a classical computer can’t.

That’s one problem, but it’s not the most important problem. The technology issues mask the hidden weaknesses in the mathematical proofs that quantum computing is built on. Most proofs make assumptions about the properties of qubits (a qubit is a bit of quantum information). “Consider n qubits, all optimally entangled and fully coherent” might be a good summary of the beginning statement of each proof. There is no need, in this article, to get into what entanglement and coherence are or even how a qubit is constructed. Suffice it to say that none of these conditions has been fully satisfied in the real world.

While a computer might have n qubits, they are probably not all perfectly entangled or fully coherent, and yes, errors will creep in and have to be corrected for.

The upshot is that it is not yet clear how well the proofs (which are good) apply to a practical implementation of a quantum computer. This leads us to the idea of quantum supremacy. The goal is to find some simple, perhaps meaningless, algorithm that the best classical computer cannot run in a reasonable time. Then run that algorithm successfully on a quantum computer. Bingo! Proofs be damned, we know that quantum computers are faster.

Finding the perfect algorithm

This shifts the problem to choosing an algorithm. Scientists need to be reasonably sure that it cannot be run efficiently on a classical computer. But they also need to expect that it can be run efficiently on a quantum computer. Funnily enough, algorithms that model quantum systems may be the best choice: a quantum computer is a quantum system and may be able to model a different quantum system efficiently. However, modeling quantum systems on classical computers is notoriously difficult.

Many choices fit this category. For instance, quantum chemistry is a proper computational hog. The advantage of problems like quantum chemistry is that the best classical algorithms are really good—many hours have been investigated in finding fast solutions to bottlenecks.

The problem is that any quantum computer has to have the flexibility to be programmed to solve the problem. Now, you have two problems: designing a quantum computer flexible enough to implement the relevant algorithm, and making that computer big enough to beat a classical computer. Much better to go with something simple.

From chaos, an answer emerges?

It turns out that quantum chaos may be the answer. Chaotic systems are notoriously difficult to model. The problem is not that the equations are intricate but that the solutions are completely different for even minutely different starting conditions. For example, a class of equations that is used to predict population growth and decay might be used to predict the number of birds after a certain time, given a starting population. But, if the starting population is changed even a tiny bit, the calculated end population may be vastly different.

In the movie below, you can see an example of a chaotic system in action: each dot represents a solution to an equation. The starting points are very nearly identical. But, as time progresses, the different starting points lead to very different end points. In other words, long-term predictions are impossible.

The problem of making predictions in chaotic systems cannot be defeated, but its onset can be delayed. If the number of calculation steps between the start and the end time are increased, and the number of bits used to represent numbers are increased, then the solution trajectories remain predictable for longer. In other words, the further into the future you want to accurately predict a chaotic system (like the weather), the more computer power you need to throw at the problem.

It is also possible to have chaotic systems that are quantum mechanical. This hits classical computers with a double whammy. Not only do we have to calculate the change in the system state to high accuracy and in tiny time steps, we also have to maintain all the quantum properties as well.

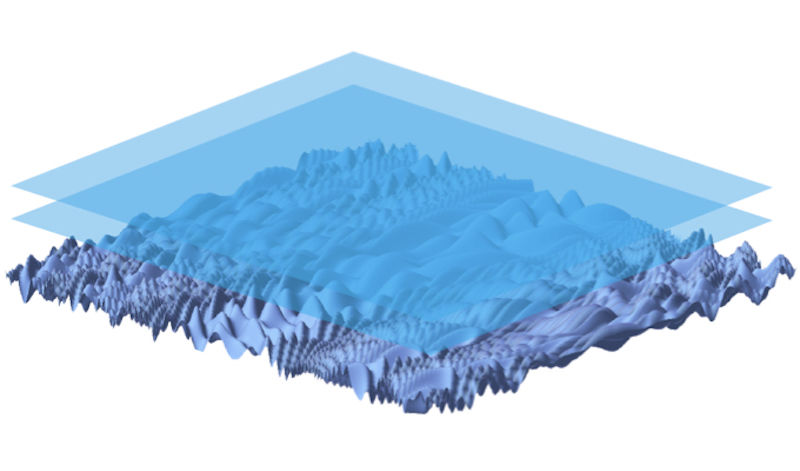

According to the researchers involved in making this suggestion, a grid of qubits, coupled correctly (i.e., they perform specific quantum logic operations on their neighbors in sequence) will exhibit quantum chaos. This makes the idea very attractive, because grids of qubits are something most researchers are familiar with.

Chaos vs. noise

The researchers produced a model of the grid and examined how the difficulty of predicting the qubits’ states grew over time. They concluded that the amount of classical computer power required to predict the state of the quantum computer grows exponentially with a combination of grid size and number of operations (computational depth). Their prediction is that a grid containing 49 qubits that has a computational depth of 40 will be sufficient to demonstrate quantum supremacy. (Computational depth is the number of operations that a gate can perform before the qubit state is randomized due to environmental noise.)

But there is a catch here. Although the quantum circuit is random in one sense, the output should not be random. So, the question is, how can we distinguish the output of a random and chaotic quantum circuit from noise? To do this, the researchers use the idea of correlations. If the circuit is really just producing noise, as opposed to carrying out an algorithm, then the entropy and energy of the circuit will be different. By measuring the energy of the qubits and comparing it to that expected from the average of randomly assigned qubit states, the circuit performance can be evaluated.

The power of the idea is that the imperfections of the quantum circuit can (and are) included in the measurement. In other words, if I measure the error rate of the gates (or, more precisely, the fidelity) in my circuit, I can use those measurements to recognize the difference between chaos and noise.

In fact, the researchers show that this concept of entropy differences can also be used to identify when a circuit goes beyond the computational power of a classical model. And this is where their prediction of 49 qubits with a depth of 40 comes from. Essentially, they use the error rates from published quantum computers; given the known performance of classical models, they look for the size of the quantum system that cannot be modeled.

Are we there yet?

It also seems that we are getting close to the champagne day of quantum supremacy. Fifty-odd qubits is pretty much where both Google (several researchers from Google are authors on this paper) and IBM are heading, while D-Wave has been there for a while.

Computational depth is another question. Some quantum computers have the gate fidelities (a measure of reliability) to reach the computational depth. However, those implementations are nowhere near 50 qubits, while the schemes—involving super-conducting loops as qubits—that can scale to 50 qubits probably have another order of magnitude to go in gate fidelities.

Although this bit of research is quite esoteric, it represents a reasonably important step. The specific prediction of qubit number and depth is not that important to me. The most important part of the paper is that it provides a general way to compare different quantum computers and different algorithms with their classical counterparts. That is very useful, considering that there is nothing close to a standard for quantum computing architecture.

Nature Physics, 2018, DOI: 10.1038/s41567-018-0124-x (About DOIs).

https://arstechnica.com/?p=1301573