SAN FRANCISCO—Valve Software’s famously “flat” structure means most of its game-making staffers have vague titles. One of the few exceptions is its Principal Experimental Psychologist, who presented a futuristic gaming vision at this year’s Game Developers Conference—in particular, he made a few peculiar admissions about how Valve might one day study your brain activity in the middle of a game and what the company might do with it.

Before speaking, Valve Software’s Mike Ambinder laid out a very loud disclaimer about GDC’s “vision” track of panels: “This is supposed to be speculative,” he said. “This is one possible direction things could go.” Even with that caveat in mind, Ambinder’s choice of details is interesting to sink our teeth into, especially coming from a company that seems to offer more speculation about the future of gaming than it does actual applications of it (i.e. new games).

The slot machine of your mind?

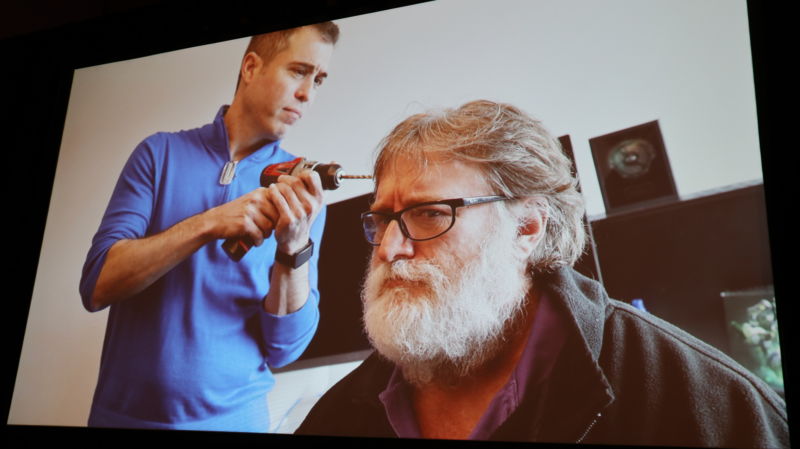

The above and below images of Ambinder goofing off with Valve co-founder Gabe Newell weren’t just for yuks: “Every talk I’ve given, this reliably gets a laugh. Think about that. What if we could elicit reliable reactions [from video games] and determine we were doing so?”

-

Management, Valve style.Sam Machkovech

-

A variety of existing headsets and mechanisms used to measure brainwave activity and synaptic responses.

-

Ambinder shows one off.

-

Newell tests another.

-

Examples of existing, relatively affordable brainwave sensing systems.

-

Valve’s dreams of how those signals could affect gameplay, in a confusing chart.

Ambinder compared a game player’s use of a controller to an average conversation. In his analogy, when you talk to a person, your words and gestures are equivalent to pressing controller buttons: loud and clear signals. But in terms of subtler signals like facial cues, “video games leave us out of the non-verbal part of a conversation,” he said.

As a result, games face interface limitations like memory, Ambinder argues. A player may memorize as many as 20-100 combinations of mouse-and-keyboard commands, he says. That may seem like plenty for some games, “but that is still a limitation.” And the exact amount of time that it takes for a synapse to reach a finger can average around 100 milliseconds, he estimates. “What if we could shave as much as 20-30 milliseconds off that time?” he asked.

Existing hardware, if money wasn’t a limit, could allow game makers to track everything from synaptic responses to “galvanic skin response,” from eyes’ gaze to muscle tension and posture. Many of Ambinder’s suggestions for what a game maker might do with this data sound like heart-rate sensor experiments, such as adjusting difficulty based on a player’s feelings at a given moment. We’ve seen Valve (and other studios) talk about the gameplay-tweaking potential of eye-tracking in previous years.

But one suggestion in particular raised our alarms: adjusting virtual goodies in a game on the fly. “We can figure out what kinds of rewards you like, and the kinds you don’t,” Ambinder suggested, potentially based on the physiological responses a player might have from getting loot. He didn’t talk to the very severe privacy implications of this feedback loop, however, nor about the abuse potential for having a game pump players with loot-driven endorphins at the moment they might start getting bored. (Slot machine and loot box mechanics are already decried for artificially toying with player expectations to hook them longer.)

“Make you like the Predator”

Other ideas, on the other hand, sounded positive, if not absolutely insane. For instance, this level of connectivity could potentially help blind, deaf, and otherwise sense-deprived people perceive a video game in new ways. This would require transcranial magnetic stimulation (TMS), a particularly wild kind of treatment that is already being used to help sufferers of insomnia, PTSD, and other conditions.

“Can we make you see in infrared like the Predator? Give you access to spatial location data, or even echolocation? Could we add a sense? Improve your sense of touch? Help you notice new frequencies?” Ambinder continued. “Taste and smell things you’ve never tasted or smelled? Focus your attention? Stimulate certain areas of your brain to recruit neurons for other tasks? Help you hold more memory at once? improve memory retrieval?”

Remember that “speculative” caveat from earlier? There you go. That particular hypothetical scenario would require TMS systems, and those are admittedly much further out as a possibility than the head-mounted, synapse-monitoring systems that Newell was seen wearing in the above photos.

Of course, the near-future possibilities of these tracking systems are also limited by the amount of head- and skin-interaction required. Such connectivity would require both purchasing a bulky headset and players choosing to wear it. But Valve does have at least one path to getting players to adopt such a crazy scenario. After asking about its feasibility, Ambinder gestured to a photo of himself sporting an HTC Vive VR headset. “Not everyone plays VR, but VR gives you semi-consistent contact with a source of brain activity,” he said. It’s an interesting thing for Ambinder to point out on Valve’s behalf as rumors of a Valve-produced VR headset mount.

Until this sort of system becomes more commonplace, Valve clearly has practical work to do to either create hardware or wait for more game makers to buy into this ultra-connected theory, that games would offer an “improved, qualitatively better player experience if developers had access to states, emotions, cognition, and decisions.” In the meantime, now—not later—might be the time to start talking about the privacy implications inherent in “games as a service” that read and respond to our brainwaves.

https://arstechnica.com/?p=1479021