Deepfakes have made a huge impact on the world of image, audio, and video editing, so why isn’t Adobe, corporate behemoth of the content world, getting more involved? Well, the short answer is that it is — but slowly and carefully. At the company’s annual Max conference today, it unveiled a prototype tool named Project Morpheus that demonstrates both the potential and problems of integrating deepfake techniques into its products.

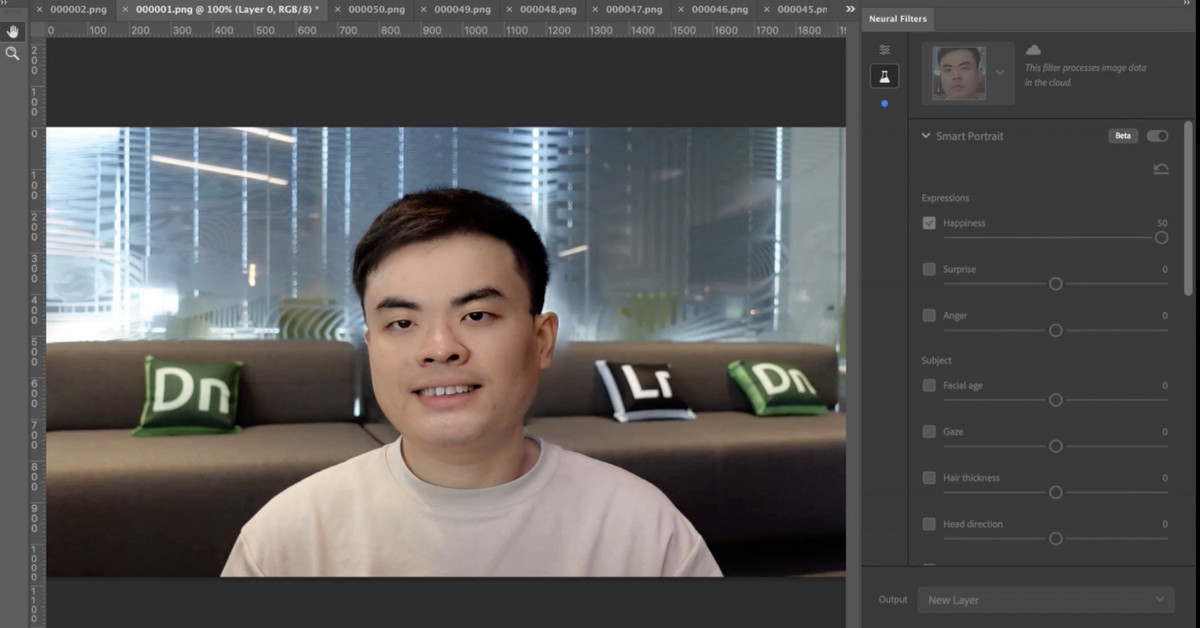

Project Morpheus is basically a video version of the company’s Neural Filters, introduced in Photoshop last year. These filters use machine learning to adjust a subject’s appearance, tweaking things like their age, hair color, and facial expression (to change a look of surprise into one of anger, for example). Morpheus brings all those same adjustments to video content while adding a few new filters, like the ability to change facial hair and glasses. Think of it as a character creation screen for humans.

The results are definitely not flawless and are very limited in scope in relation to the wider world of deepfakes. You can only make small, pre-ordained tweaks to the appearance of people facing the camera, and can’t do things like face swaps, for example. But the quality will improve fast, and while the feature is just a prototype for now with no guarantee it will appear in Adobe software, it’s clearly something the company is investigating seriously.

What Project Morpheus also is, though, is a deepfake tool — which is potentially a problem. A big one. Because deepfakes and all that’s associated with them — from nonconsensual pornography to political propaganda — aren’t exactly good for business.

Now, given the looseness with which we define deepfakes these days, Adobe has arguably been making such tools for years. These include the aforementioned Neural Filters, as well as more functional tools like AI-assisted masking and segmentation. But Project Morpheus is obviously much more deepfakey than the company’s earlier efforts. It’s all about editing video footage of humans — in ways that many will likely find uncanny or manipulative.

Changing someone’s facial expression in a video, for example, might be used by a director to punch up a bad take, but it could also be used to create political propaganda — e.g. making a jailed dissident appear relaxed in court footage when they’re really being starved to death. It’s what policy wonks refer to as a “dual-use technology,” which is a snappy way of saying that the tech is “sometimes maybe good, sometimes maybe shit.”

This, no doubt, is why Adobe didn’t once use the word “deepfake” to describe the technology in any of the briefing materials it sent to The Verge. And when we asked why this was, the company didn’t answer directly but instead gave a long answer about how seriously it takes the threats posed by deepfakes and what it’s doing about them.

Adobe’s efforts in these areas seem involved and sincere (they’re mostly focused on content authentication schemes), but they don’t mitigate a commercial problem facing the company: that the same deepfake tools that would be most useful to its customer base are those that are also potentially most destructive.

Take, for example, the ability to paste someone’s face onto someone else’s body — arguably the ur-deepfake application that started all this bother. You might want such a face swap for legitimate reasons, like licensing Bruce Willis’ likeness for a series of mobile ads in Russia. But you might also be creating nonconsensual pornography to harass, intimidate, or blackmail someone (by far the most common malicious application of this technology).

Regardless of your intent, if you want to create this sort of deepfake, you have plenty of options, none of which come from Adobe. You can hire a boutique deepfake content studio, wrangle with some open-source software, or, if you don’t mind your face swaps being limited to preapproved memes and gifs, you can download an app. What you can’t do is fire up Adobe Premiere or After Effects. So will that change in the future?

It’s impossible to say for sure, but I think it’s definitely a possibility. After all, Adobe survived the advent of “Photoshopped” becoming shorthand for digitally edited images in general, and often with negative connotations. And for better or worse, deepfakes are slowly losing their own negative associations as they’re adopted in more mainstream projects. Project Morpheus is a deepfake tool with some serious guardrails (you can only make prescribed changes and there’s no face-swapping, for example), but it shows that Adobe is determined to explore this territory, presumably while gauging reactions from the industry and public.

It’s fitting that as “deepfake” has replaced “Photoshopped” as the go-to accusation of fakery in the public sphere, Adobe is perhaps feeling left out. Project Morpheus suggests it may well catch up soon.

https://www.theverge.com/2021/10/27/22748508/adobe-deepfake-tool-max-project-morpheus