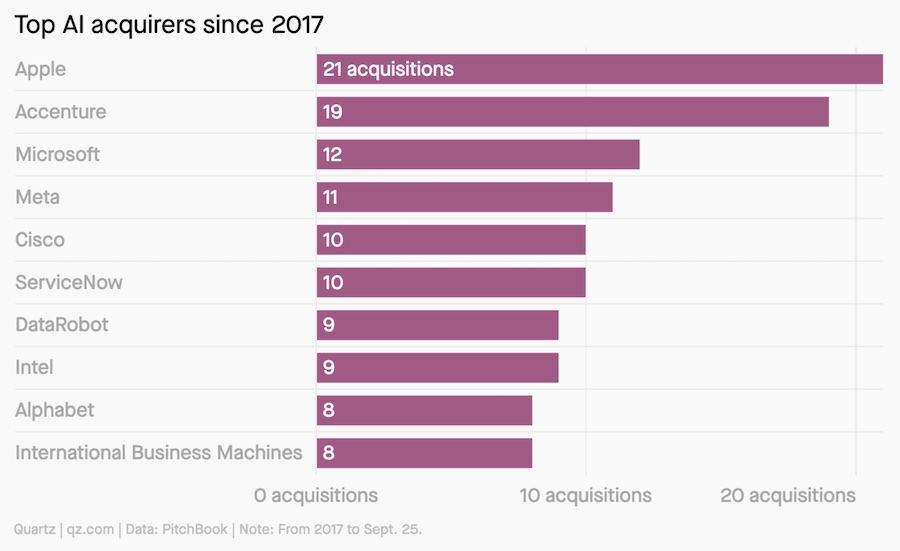

Over the last few months, it has been hard to get away from the AI headlines. Amazon, Microsoft, Meta, Google and have been everywhere, and we have seen a huge influx of capital into the AI industry as a whole. Companies without a business plan or earnings call statement about AI often get noticed and punished for it. High priced acquisitions have investors salivating. Just take a look at some of the top acquisitions in the last 6 years.

I started to get familiar with machine learning in 2015, right when the industry was starting to grow and data science became its own field, separate and distinct from statistics and computer science. Universities quickly followed building programs, corporations starting investing in upgraded hardware such as GPUs, and now we have a whole industry well equipped for using machine learning models to build artificial intelligence.

As with many waves (Gartner uses the term “Hype Cycles”), we know that all things need to be actualized and refined over time, many of them into long term business strategies. Gartner thinks we are between 2-10 years from getting there, which I think is fairly realistic.

Similar to other waves in the past, there are numerous subcomponents of AI and I believe security is a small portion of it. If you do a quick search on the internet you will find various articles on the ways that AI will change the entire industry, such as threat detection, behavioral analysis, and natural language processing. I do believe these industries will be improved, we have already seen that AI can piece data together much faster and better than humans can, but I don’t think that’s going to completely change the function. Here are the two areas that I believe will completely change.

Automatic patching of vulnerabilities

For many CISOs, vulnerability management is the thorn in your side that never goes away. It’s an ongoing process that expands as the surface area with technology grows. But what if there was a way to automatically patch your systems as soon as a vulnerability was released?

To help me explain further, I want to draw on an example from Defcon in 2016. For those who are not familiar, DARPA unveiled their two year long contest championship known as the Cyber Grand Challenge. It was a historic event putting machines against machines, attacking zero day vulnerabilities based on real world vulnerabilities, as well as rebuilding and defending your systems at the same time. Scoring was simple, you were judged each round on the following concepts:

- Availability – Is your program still working and available?

- Defense (Security) – Did anyone exploit your program?

- Offensive (Evaluation) – Did you get into someone else’s program?

The results were quite impressive, you can watch a full analysis on YouTube. However after the groundbreaking concepts, it seemed the traction faded shortly after. Voting machines and Autonomous driving took over the stage the next years, and we hadn’t seen a lot of traction in the industry since. The winner, ForAllSecure, did recently raise a series B round in 2022, but It seemed the overall concepts and technologies were poorly shared with industry or even implemented broadly at both the federal and private sector.

While the concept was novel, the technology was quite nascent. Fast forward to today and DARPA said they are doing it again at Defcon in 2024 and 2025, this time in partnership with Anthropic, Google, Microsoft and OpenAI and over $18 million USD in prizes. The results are bound to be stunning with over 8 years of improvements. With so many open source projects and the growing surface area of third party programs, I am a strong believer that the only way to fix vulnerability management is to have dynamic patching instead of traditional human-led patching. The registration is open here, I’m excited to see what’s in store next year.

The SOC

The Security Operations Center, also known as the SOC, is one of the most human labor intensive units within the security umbrella. The SOC needs to be always on, staffed with playbooks, and ready to go for any type of security incident that an organization might face. This means you might need a global workforce, shift work, or an outsourced provider who can provide complete coverage that fits your needs. Another challenge is that keeping morale high within the SOC can be very difficult and burnout is common.

There can be hundreds of data sources connected to a SOC. Some to name a few are endpoint logs, network logs, Intrusion detection systems, application logs, third party vendor logs, identity logs, security access logs, email logs and more. Having a reliable timeline is the best way to stitch data together, but often an analyst needs to manually pull and verify logs, especially if they are not easily integrated into existing systems. The SOC playbooks are usually some form of tree and branch structure, which either leads to a resolution or escalation. The work can be tedious and means interacting with many forms of data to make informed decisions about the next step of the process. Parsing logs, indicators, and building timelines can take hours or days to do.

Although this is still fairly new, and training data is currently lacking, I believe that AI can truly disrupt all elements of the SOC and provide an analyst with 10x more data and save 10x more time than what currently exists. It will take a lot of corporate data consolidation, privacy boundaries, and processing power to put it together. We can already see the industry working on the start of this trend. Cisco is buying Splunk, Google bought Mandiant, Microsoft bought RiskIQ and CloudKnox and Miburo. Amazon and Meta are working on refining their own tools with internal investments.

It is hard to say where we will be in 10 years, but I picture a very different vulnerability management program and SOC, areas that I believe are underrepresented as a whole. Even though security is a small sliver of the AI wave, I am hopeful we will see big changes in these areas.

https://www.securityweek.com/narrowing-the-focus-of-ai-in-security/