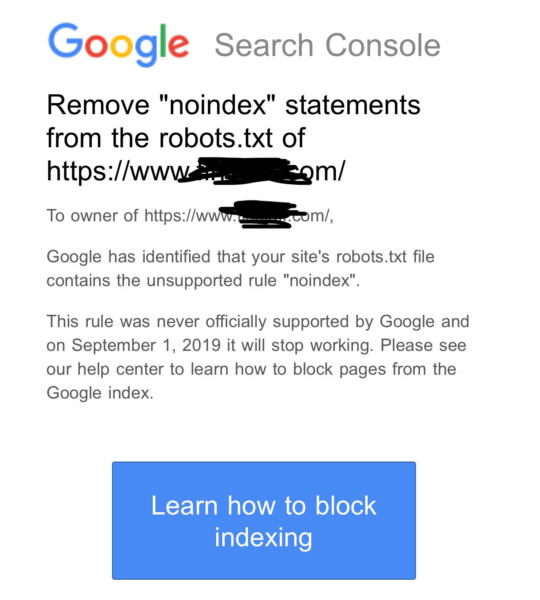

As part of Google fully removing support for the noindex directive in robots.txt files, Google is now sending notifications to those that have such directives. This morning, many within the SEO community started getting notifications from Google Search Console with the subject line “Remove “noindex” statements from the robots.txt of…”

What it looks like. There are many screenshots of this on social media, but here is one from Bill Hartzer on Twitter:

September 1, 2019. That is the date that you need to no longer depend on the noindex mention in your robots.txt file. This is something Google announced earlier this month and is now sending out messaging to help spread the word of this change.

Why we should care. If you get this notice, make sure to ensure whatever you mentioned in this noindex directive is supported a different way. The most important thing is to make sure that you are not using the noindex directive in the robots.txt file. If you are, you will want to make the suggested changes above before September 1. Also, look to see if you are using the nofollow or crawl-delay commands and, if so, look to use the true supported method for those directives going forward.

What are the alternatives? Google listed the following options, the ones you probably should have been using anyway:

(1) Noindex in robots meta tags: Supported both in the HTTP response headers and in HTML, the noindex directive is the most effective way to remove URLs from the index when crawling is allowed.

(2) 404 and 410 HTTP status codes: Both status codes mean that the page does not exist, which will drop such URLs from Google’s index once they’re crawled and processed.

(3) Password protection: Unless markup is used to indicate subscription or paywalled content, hiding a page behind a login will generally remove it from Google’s index.

(4) Disallow in robots.txt: Search engines can only index pages that they know about, so blocking the page from being crawled often means its content won’t be indexed. While the search engine may also index a URL based on links from other pages, without seeing the content itself, we aim to make such pages less visible in the future.

(5) Search Console Remove URL tool: The tool is a quick and easy method to remove a URL temporarily from Google’s search results.

http://feeds.searchengineland.com/~r/searchengineland/~3/GNECAVf2IDg/google-notifying-webmasters-to-remove-noindex-from-robots-txt-files-319974