“We found that the tools were deficient in trying to represent who we are as an agency,” Lerma.

In an attempt to rectify these issues, Lerma attempted to edit the text further in Mid Journey for the music video, with details like location, visual composition, atmosphere, inclusion of the camera, and body language. As a result, the images took on a distorted and unnatural appearance, with some showing humans with missing body parts.

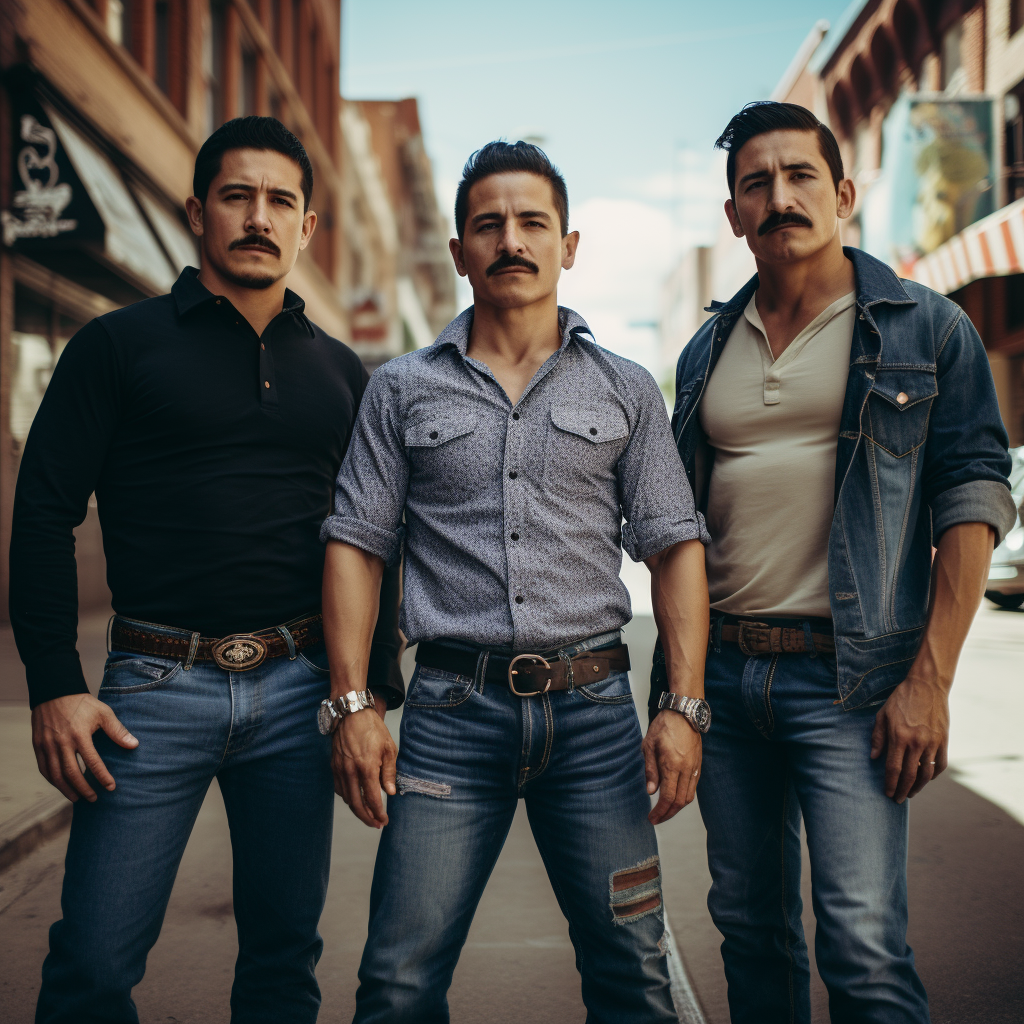

In another case, when responding to the prompt of “three Hispanic males standing in the middle of a street,” the AI tool generated an image of three people closely resembling each other, lacking the diversity of features within specific groups that the agency had intended to represent.

“It’s important for [AI] platforms to consider looking at consultants, such as marketing planners or even anthropologists, to provide input onto these tools to try and avoid those biases from happening,” said Alma’s Sotelo.

Addressing these biases

Lerma has instead built and trained its own open-source AI model, LERM@NOS, released on the Hugging Face platform, which helps the agency’s creative teams deliver client-facing work such as storyboard compositions without running into biased results.

The agency ran a photoshoot involving its diverse employees, capturing them from various angles and under different lighting setups. In total, the agency generated a dataset of nearly 18,000 variations of facial features, which served as the training data for LERM@NOS.