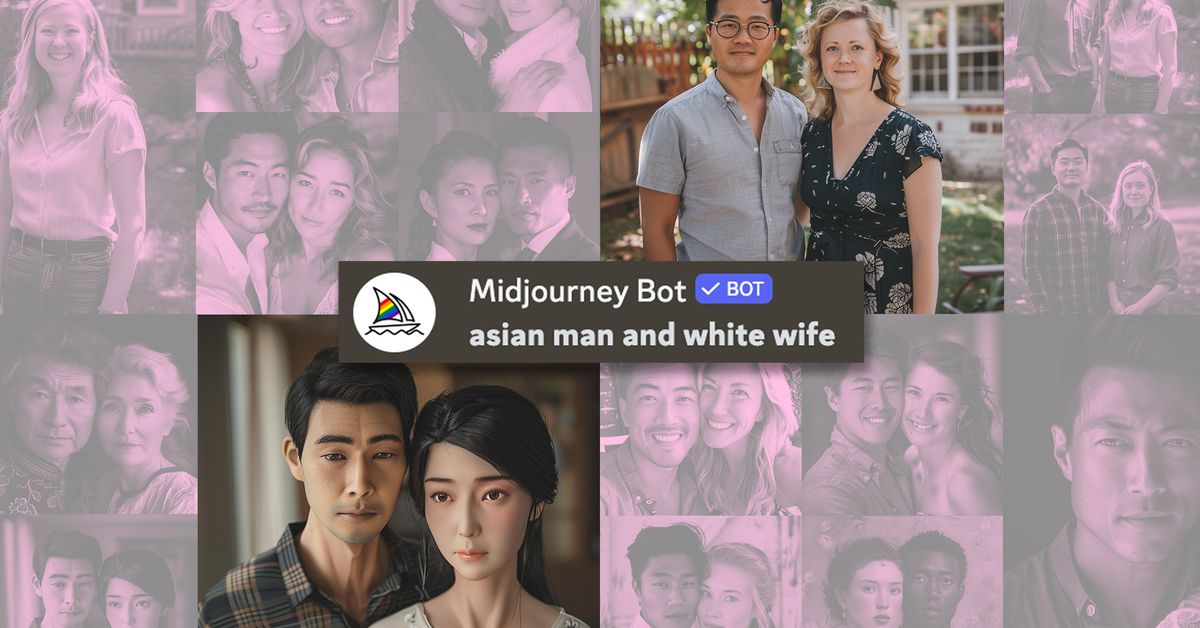

I inadvertently found myself on the AI-generated Asian people beat this past week. Last Wednesday, I found that Meta’s AI image generator built into Instagram messaging completely failed at creating an image of an Asian man and white woman using general prompts. Instead, it changed the woman’s race to Asian every time.

The next day, I tried the same prompts again and found that Meta appeared to have blocked prompts with keywords like “Asian man” or “African American man.” Shortly after I asked Meta about it, images were available again — but still with the race-swapping problem from the day before.

I understand if you’re a little sick of reading my articles about this phenomenon. Writing three stories about this might be a little excessive; I don’t particularly enjoy having dozens and dozens of screenshots on my phone of synthetic Asian people.

But there is something weird going on here, where several AI image generators specifically struggle with the combination of Asian men and white women. Is it the most important news of the day? Not by a long shot. But the same companies telling the public that “AI is enabling new forms of connection and expression” should also be willing to offer an explanation when its systems are unable to handle queries for an entire race of people.

After each of the stories, readers shared their own results using similar prompts with other models. I wasn’t alone in my experience: people reported getting similar error messages or having AI models consistently swapping races.

I teamed up with The Verge’s Emilia David to generate some AI Asians across multiple platforms. The results can only be described as consistently inconsistent.

Gemini refused to generate Asian men, white women, or humans of any kind.

In late February, Google paused Gemini’s ability to generate images of people after its generator — in what appeared to be a misguided attempt at diverse representation in media — spat out images of racially diverse Nazis. Gemini’s image generation of people was supposed to return in March, but it is apparently still offline.

Gemini is able to generate images without people, however!

a:hover]:text-gray-63 [&>a:hover]:shadow-underline-black dark:[&>a:hover]:text-gray-bd dark:[&>a:hover]:shadow-underline-gray [&>a]:shadow-underline-gray-63 dark:[&>a]:text-gray-bd dark:[&>a]:shadow-underline-gray”>Screenshot: Emilia David / The Verge

Google did not respond to a request for comment.

Gemini refused to generate Asian men, white women, or humans of any kind.

In late February, Google paused Gemini’s ability to generate images of people after its generator — in what appeared to be a misguided attempt at diverse representation in media — spat out images of racially diverse Nazis. Gemini’s image generation of people was supposed to return in March, but it is apparently still offline.

Gemini is able to generate images without people, however!

Google did not respond to a request for comment.

ChatGPT’s DALL-E 3 struggled with the prompt “Can you make me a photo of an Asian man and a white woman?” It wasn’t exactly a miss, but it didn’t quite nail it, either. Sure, race is a social construct, but let’s just say this image isn’t what you thought you were going to get, is it?