Researchers at Wiz have flagged another major security misstep at Microsoft that caused the exposure of 38 terabytes of private data during a routine open source AI training material update on GitHub.

The exposed data includes a disk backup of two employees’ workstations, corporate secrets, private keys, passwords, and over 30,000 internal Microsoft Teams messages, Wiz said in a note documenting the discovery.

Wiz, a cloud data security startup founded by ex-Microsoft software engineers, said the issue was discovered during routine internet scans for misconfigured storage containers. “We found a GitHub repository under the Microsoft organization named robust-models-transfer. The repository belongs to Microsoft’s AI research division, and its purpose is to provide open-source code and AI models for image recognition,” the company explained.

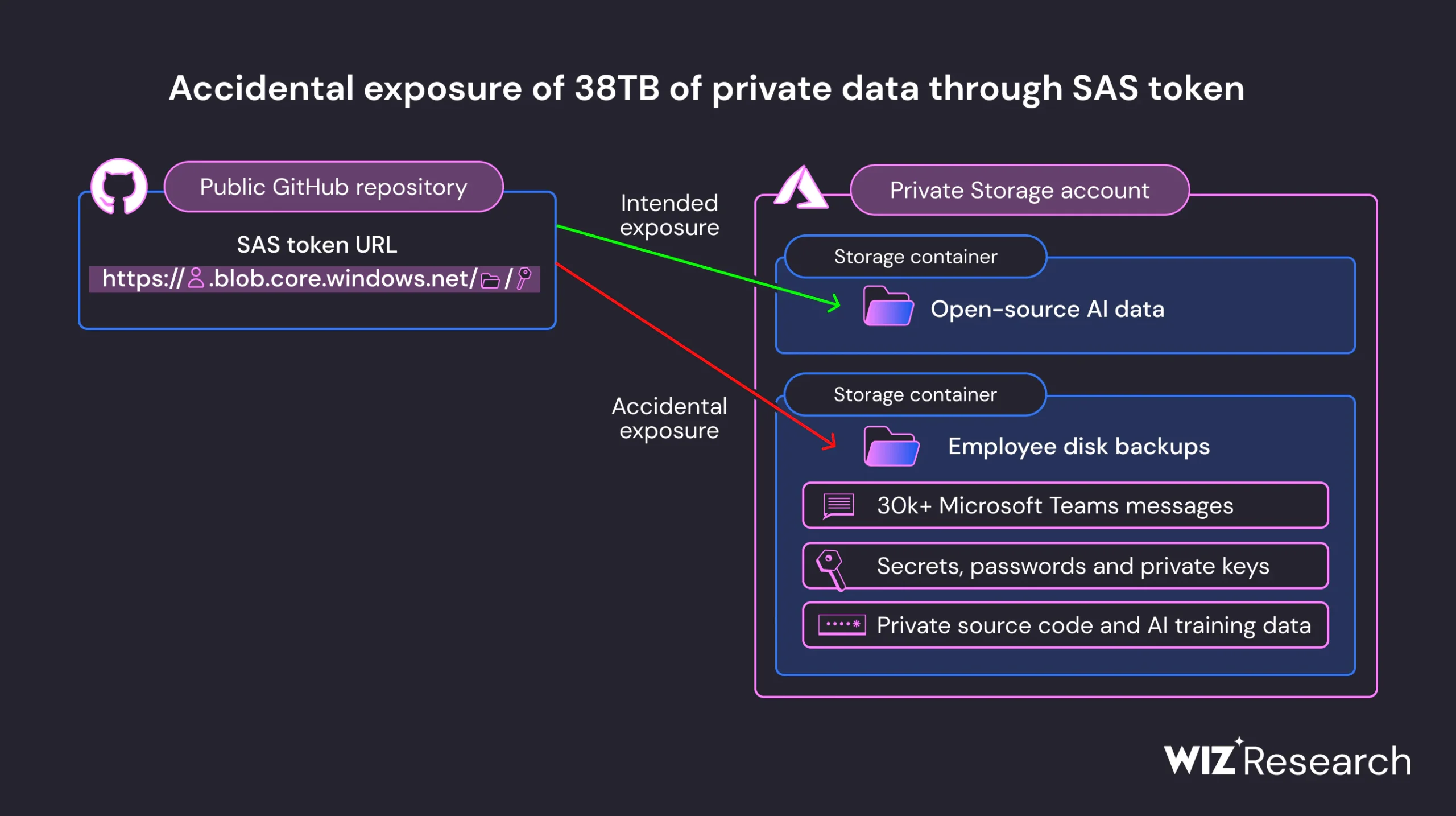

While sharing the files, Microsoft used an Azure feature called SAS tokens that allows data sharing from Azure Storage accounts. While the access level can be limited to specific files only; Wiz found that the link was configured to share the entire storage account — including another 38TB of private files.

“This URL allowed access to more than just open-source models. It was configured to grant permissions on the entire storage account, exposing additional private data by mistake,” Wiz noted.

“Our scan shows that this account contained 38TB of additional data — including Microsoft employees’ personal computer backups. The backups contained sensitive personal data, including passwords to Microsoft services, secret keys, and over 30,000 internal Microsoft Teams messages from 359 Microsoft employees,” it added.

In addition to what it describes as overly permissive access scope, Wiz found that the token was also misconfigured to allow “full control” permissions instead of read-only, giving attackers the power to delete and overwrite existing files.

“An attacker could have injected malicious code into all the AI models in this storage account, and every user who trusts Microsoft’s GitHub repository would’ve been infected by it,” Wiz warned.

The repository’s primary function compounds the security concerns. Tasked with supplying AI training models, these blueprints come in a ‘ckpt‘ format, a creation of the widely-used TensorFlow and sculpted using Python’s pickle formatter. Wiz notes that the very format can be a gateway for arbitrary code execution.

“An attacker could have injected malicious code into all the AI models in this storage account, and every user who trusts Microsoft’s GitHub repository would’ve been infected by it,” the company added.

According to Wiz, Microsoft’s security response team invalidated the SAS token within two days of initial disclosure in June this year. The token was replaced on GitHub a month later.

Microsoft has published its own blog post to explain how the data leak occurred and how such incidents can be prevented.

“No customer data was exposed, and no other internal services were put at risk because of this issue. No customer action is required in response to this issue,” the tech giant noted.

*updated with link to Microsoft’s blog post

Related: Microsoft Puts ChatGPT to Work on Automating Security

Related: OpenAI Using Security to Sell ChatGPT Enterprise

Related: Wiz Says 62% of AWS Environments Exposed to Zenbleed

Related: Microsoft Hack Exposed More Than Exchange, Outlook Emails

https://www.securityweek.com/microsoft-ai-researchers-expose-38tb-of-data-including-keys-passwords-and-internal-messages/