The world’s largest software maker is putting ChatGPT to work in the cybersecurity trenches.

Microsoft on Wednesday rolled out an AI-powered security analysis tool to automate incident response and threat hunting tasks, showcasing a security use-case for the popular chatbot developed by OpenAI.

The new tool, called Microsoft Security Copilot, is powered by OpenAI’s newest GPT-4 model and will be trained on data from Redmond’s massive trove of telemetry signals from enterprise deployments and Windows endpoints.

Cybersecurity experts are already using generative AI chatbots to simplify and enhance software development, reverse engineering and malware analysis tasks and Microsoft’s latest move adds several new use-cases for defenders.

Microsoft is already raking in about $20 billion a year from the sale of cybersecurity protection products and industry watchers expect the push into AI automation will create new revenue streams and drive new levels of innovation among cybersecurity startups.

SecurityWeek sources expect to see similar offerings from the likes of Cisco, Palo Alto Networks and Google as rivals rush to embrace the use of generative AI to automate complex and time-consuming security tasks.

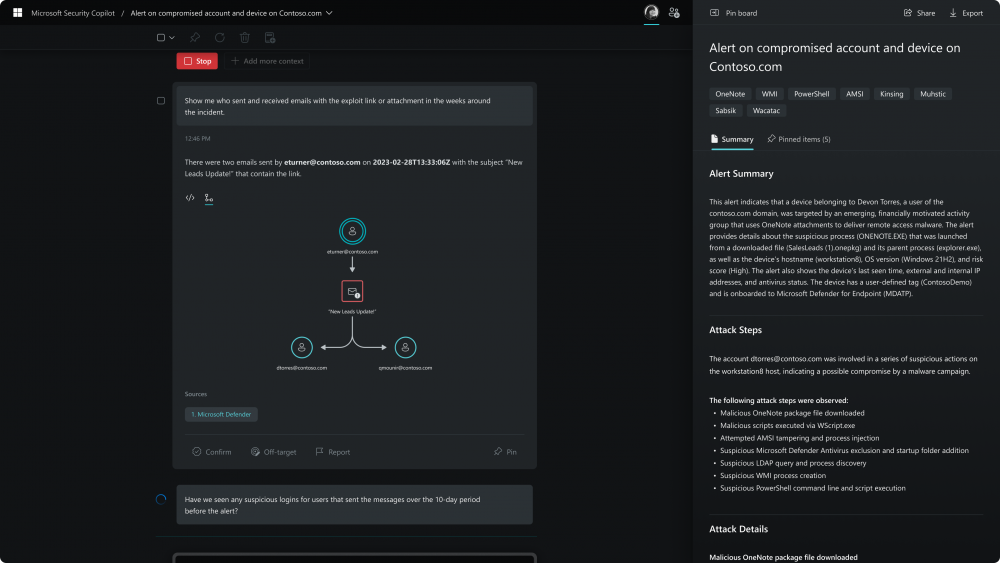

Microsoft is positing the Security Copilot chatbot as a tool that works seamlessly with security teams to allow defenders to see what is happening in their environment, learn from existing intelligence, correlate threat activity, and make better decisions at machine speed.

From Microsoft’s documentation:

“Security Copilot will simplify complexity and amplify the capabilities of security teams by summarizing and making sense of threat intelligence, helping defenders see through the noise of web traffic and identify malicious activity.

It will also help security teams catch what others miss by correlating and summarizing data on attacks, prioritizing incidents and recommending the best course of action to swiftly remediate diverse threats, in time.“

For incident response teams, Microsoft says the chatbot can be used to identify an ongoing attack, assess its scale, and get instructions to begin remediation based on proven tactics from real-world security incidents.

For threat hunting practitioners, the company says Security Copilot can help determine whether an organization is susceptible to known vulnerabilities and exploits by using AI to examine the environment one asset at a time for evidence of a breach.

The tool can also be used to summarize any event, incident, or threat in minutes and prepare information in a ready-to-share, customizable report.

The company said the tool will integrate natively with products like Microsoft Sentinel, Microsoft Defender and Microsoft Intune to provide an “end-to-end experience across their entire security program.”

Related: ChatGPT and the Growing Threat of Bring Your Own AI to the SOC

Related: Microsoft to Acquire Threat Intelligence Vendor RiskIQ

Related: Microsoft Flexes Security Vendor Muscles With Managed Services

Related: For Microsoft, Security is a $10 Billion Business

https://www.securityweek.com/microsoft-puts-chatgpt-to-work-on-automating-cybersecurity/