Welcome back to our discussion about platforms and democracy! I had a great time meeting Interface readers last week at the Techonomy conference in Half Moon Bay and the Conference on New Media and Democracy at Tufts University. I also made great progress on a special report I plan to have for you here before the end of the year. But enough prelude — on with today’s update.

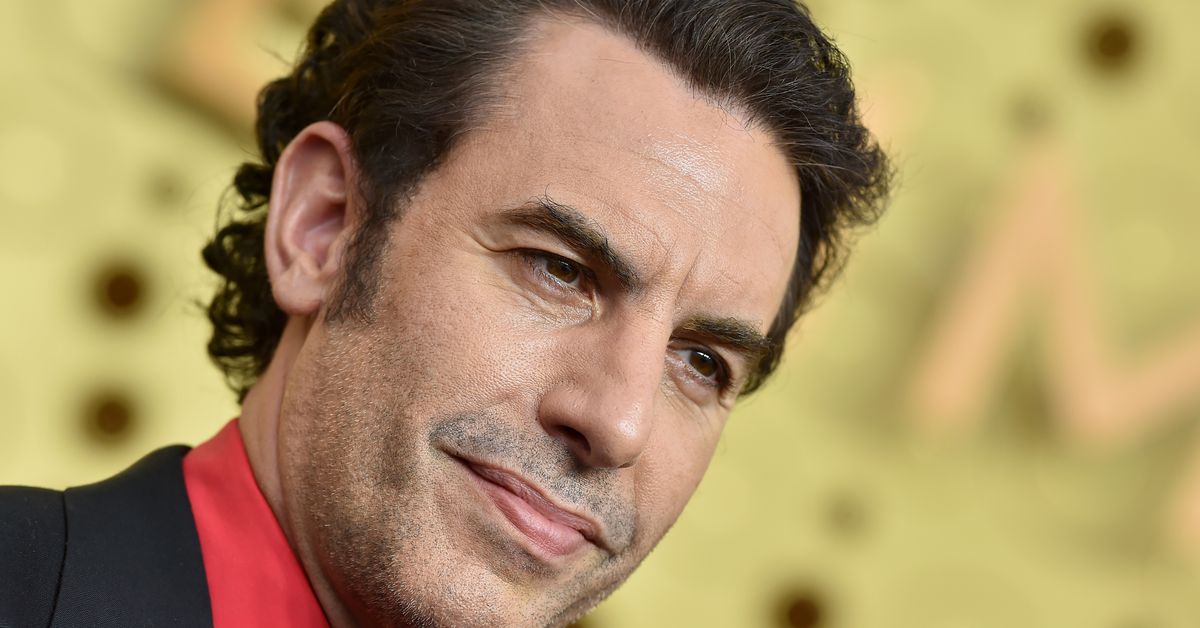

“I’m one of the last people you’d expect to hear warning about the danger of conspiracies and lies,” the actor and comedian Sacha Baron Cohen said today in an op-ed in the Washington Post, adapted from last week’s viral speech about the dangers of social networks at an Anti-Defamation League conference.

In fact, Baron Cohen is exactly the sort of person I’d expect to be warning us about social networks. As a rich celebrity who has no need for the free communication tools they provide, and who can thrive without relying on the promotional benefits that come with active use of the platforms, blasting Big Tech costs Baron Cohen nothing.

Meanwhile, few people would have ever even heard of Baron Cohen’s speech had it not thrived on social media — first on Twitter, then on YouTube — where social media critiques, particularly of Facebook, have grown increasingly popular. In coming to bury the big platforms, Baron Cohen inadvertently proved their benefit: providing a wide lane for an outsider — in this case, a comedian with no previous experience as a tech pundit — to come in and start a worthwhile discussion.

To be sure, Baron Cohen raises some valuable points — and he does so with more nuance and detail than the average “Zuck sucks” Twitter egg in my mentions. (Note the way he cites academic research in his links — a welcome touch.) Baron Cohen is right, for example, about the unique danger of algorithmic recommendations on social platforms — the way they give fringe viewpoints unearned reach, and recruit followers for violent ideologies, most prominently on the far right:

The algorithms these platforms depend on deliberately amplify content that keeps users engaged — stories that appeal to our baser instincts and trigger outrage and fear. That’s why fake news outperforms real news on social media; studies show lies spread faster than truth.

On the Internet, everything can appear equally legitimate. Breitbart resembles the BBC, and the rantings of a lunatic seem as credible as the findings of a Nobel Prize winner. We have lost a shared sense of the basic facts upon which democracy depends.

Baron Cohen also picks up on issue we have discussed here quite often in recent months: the fact that large technology companies, thanks to a combination of ignorance and inattention from our elected officials, are essentially accountable to no one, even as their products have unleashed dangerous, rippling butterfly effects the world over:

These super-rich “Silicon Six” care more about boosting their share price than about protecting democracy. This is ideological imperialism — six unelected individuals in Silicon Valley imposing their vision on the rest of the world, unaccountable to any government and acting like they’re above the reach of law. Surely, instead of letting the Silicon Six decide the fate of the world order, our democratically elected representatives should have at least some say.

Amen.

Unfortunately, Baron Cohen’s proposed solution for making tech platforms accountable is to amend Section 230 of the Communications Decency Act to make Facebook and other sites legally liable for what their users post. He approvingly cites the passage last year of FOSTA-SESTA, an act nominally intended to reduce sex trafficking that was really about scrubbing sexual content off the internet. By all accounts, it has done almost nothing to reduce sex trafficking. Instead, it has forced sex workers to once again rely on abusive pimps and put themselves into unnecessary danger.

Websites reacted to the passage of FOSTA-SESTA by overreacting. Once Craigslist could be found legally liable for unwittingly hosting an ad that enabled sex trafficking, it removed all personals from its service altogether. Reddit removed several communities associated with sex work. Several sites that allowed sex workers to vet potential clients shut down entirely.

It seems likely that amending Section 230 to introduce what scholars call “intermediary liability” for Facebook et al would play out in much the same way: by over-moderating and censoring speech. In an environment in which democracy is in retreat around the world, and the internet is increasingly controlled by far-right authoritarian governments, the prospect of surging censorship in our communications tools sends a chill down my spine. How will Baron Cohen feel when a government orders the takedown of one of his satires across the entire internet? If 230 disappears, and other countries adopt similar measures, I can’t imagine a likelier target.

Moreover, it’s Section 230 that enables platforms to be more aggressive in pulling down hate speech and abusive content — the outcome that Baron Cohen argues for most passionately in his speech. His argument to eliminate Section 230 protections glosses right over this point, likely because Baron Cohen misunderstands what Section 230 actually does. (See also Mike Masnick on this point.)

Those qualms aside, what has stayed with me most about Baron Cohen’s speech is the way it captures the new conventional wisdom among left-leaning critics: that Facebook disproportionately benefits the right wing. (Plenty of conservatives believe the exact opposite, of course.)

The thought that Facebook empowers the far right is not exactly new. Anxiety that Facebook had become a handmaiden to the conservative movement lay at the root of Cambridge Analytica blowing up into a global scandal in 2018, two years after we knew most of the details. (We knew that Facebook was sharing our data with third parties. What most of us didn’t know was that third parties were using that data as part of sophisticated, micro-targeted political influence campaigns.)

But there is new evidence of Facebook’s material support of the right wing. In the Wall Street Journal this weekend, Deepa Seetharaman profiled James Barnes, who Facebook once embedded in the Trump campaign to help officials there use the company’s advertising platform. Barnes, who like a growing number of former Facebook employees experienced a crisis of conscience over the work he did there, revealed that the company had made unusual arrangements to ensure Trump could buy the maximum amount of ads.

The profile lays out the extraordinary amount of assistance that Facebook lent Trump. In theory, the same amount of assistance was available to Hillary Clinton, but she declined. Barnes hand-coded custom advertising tools for Trump, ran split tests on advertising copy to see which would be most effective, and offered troubleshooting help whenever asked during what he describes as 12-hour days working on the campaign. He also ensured the company could access a larger line of credit than Facebook had ever previously extended:

The Trump campaign needed a large credit line from Facebook, according to Mr. Barnes and others familiar with the situation. This issue posed special challenges. Facebook sometimes extends credit to a select group of digital agencies, but Mr. Parscale’s outfit didn’t qualify for a large line because it didn’t have a track record with Facebook, according to people familiar with the matter. The Trump team also wanted to pay for ads with a credit card, but Facebook’s transactions system wasn’t set up to handle payments of as much as $300,000 to $400,000 a day on a credit card, according to Mr. Barnes and others familiar with the matter.

As employees looked for ways to address the problem, Mr. Parscale texted Mr. Barnes to say Mr. Trump would go on TV and “say Facebook was being unfair to him” if the issue wasn’t resolved quickly, Mr. Barnes said. Eventually, Facebook came up with a fix.

Of course, it’s possible that Facebook bent over backwards for Trump for simple reasons of self-preservation. In an environment where regulation seems increasingly likely, a corporation will naturally seek to make nice with any potential nemesis.

Still, the scope of Facebook’s aid to the Trump campaign is surprising. And it gives credence to one of the arguments made forcefully by Baron Cohen: that social networks have been far more successful at empowering dangerous reactionaries than they have more progressive forces.

In most ways, 2019 was a stellar year for Facebook’s business. (Alex Heath has a nice [paywalled!] overview here.) But it was a bad year for Facebook’s reputation. And until the company is held accountable for the misuses of its products in some meaningful way, it’s hard to see how that will improve. I only hope that when regulation comes, policymakers come up with better solutions than Baron Cohen did.

The Ratio

Today in news that could affect public perception of the big tech platforms.

Trending up: Google changed its political ad policy last week to limit the ways in which politicians can target potential voters. The move puts more pressure on Facebook, which has yet to budge on its policy to allow misinformation in political ads.

Trending down: White nationalists are openly operating on Facebook, and the company hasn’t yet removed their accounts. Facebook promised to ban white nationalists in March 2019, but several groups remain active today, as this report illustrates.

Trending down: Facebook and Twitter say hundreds of users may have had their data improperly accessed after using their accounts to log into apps like Giant Square and Photofy.

Trending down: YouTube has a policy of flagging state-sponsored media channels, but enforcement has been lax. The company allowed at least 57 channels funded by governments in Iran, Russia, China, Turkey and Qatar to operate without the required labels.

Governing

⭐ Singapore invoked its “fake news” law for the first time Monday, forcing a citizen to amend his Facebook post, which the government said used “false and misleading statements” to smear reputations. Adam Taylor from The Washington Post explains:

The move is likely to cause consternation among rights groups, which have already argued that anti-misinformation laws run the risk of hindering freedom of speech.

Singapore’s “fake news” law, officially the Protection from Online Falsehoods and Manipulation Act (POFMA), is one of the world’s most far-reaching of anti-misinformation laws over the past few years, and it has sparked imitators.

On Monday, British-born Brad Bowyer, a member of the Progress Singapore Party (PSP), was asked to retract statements he had made that implied the Singaporean government influenced investments made by GIC and Temasek, two state investors that he said had made poor financial moves.

Facebook has scrapped its 2016 pitch to political advertisers, which emphasized its ability to reach and persuade voters with targeted messaging. Now, the company is emphasizing its ability to prevent voter suppression and stop fake accounts from being created. (Alex Kantrowitz / BuzzFeed)

Facebook’s former chief marketing officer will oversee what could be an enormous ad campaign on Facebook for Michael Bloomberg, the latest Democratic presidential candidate. (Theodore Schleifer / Recode)

Facebook said a network of anonymous pages that spread misinformation and pro-Trump content don’t violate its rules. The pages represent themselves as grassroots Trump supporters based in different states — but they’re working in coordination, according to this report. (Craig Silverman and Jane Lytvynenko / BuzzFeed)

Joel Kaplan, Facebook’s head of public policy, “helped quarterback” Brett Kavanaugh’s nomination for the Supreme Court, a new book reveals. Kaplan’s friendship with Kavanaugh has been known for some time, but the book documents the lengths the executive went to to get Kavanaugh’s nomination approved. (Maxwell Tani and Andrew Kirell / The Daily Beast)

Authorities have arrested an individual who is allegedly part of The Chuckling Squad, a hacker group that compromised Jack Dorsey’s Twitter account in August. The individual’s identity is being kept under wraps because they’re a minor. (Joseph Cox / Vice)

National security officials expressed wonderment that Rudy Giuliani, Trump’s personal attorney and the former mayor of New York, was running an “irregular channel” of diplomacy with Ukraine over open cell lines and messaging apps penetrated by the Russians. (David E. Sanger / The New York Times)

A leaked excerpt of TikTok’s content moderation policies shows moderators suppress political content. While they aren’t told to take political and protest videos down, they are told to stop them from going viral. The company has vehemently denied censoring political content outside of China. (Angela Chen / MIT Technology Review)

WeChat banned Chinese Americans for talking about the Hong Kong protests. The company, owned by Chinese tech giant Tencent, often censors people in China, but its strict content policies appear to be spreading overseas. (Zoe Schiffer / The Verge)

The Chinese tech giant Huawei filed three defamation suits in France over claims that it’s controlled by the Chinese government. The comments were made on TV by a French researcher, a broadcast journalist and a telecommunications sector expert. (Helene Fouquet / Bloomberg)

Protests in Hong Kong, Lebanon and Iran have been complicating the idea that cryptocurrencies are resistant to censorship. Many people say they aren’t able to transact once their internet connections are disrupted — an increasingly frequent occurrence. (Leigh Cuen / Coindesk)

Google wants to do business with the military, but many of its employees don’t. They’re saying the company has drifting from its old “don’t be evil” ethos, according to this deep dive. (Joshua Brustein and Mark Bergen / Bloomberg)

Google employees gathered outside the company’s San Francisco office on Friday to protest the company’s decision to put two staff members on leave. Employees are mad that the company hasn’t acted enough when it comes to executives accused of sexual misconduct, but are quick to silence employees who speak out. The company fired four people connected to the protests today, citing violations of internal data security practices. (Mark Bergen / Bloomberg)

Apple CEO Tim Cook said Congress should pass a federal privacy bill. “I think we can all admit that when you’ve tried to do something and companies haven’t self policed, that it’s time to have rigorous regulation,” he said. (Zoe Schiffer / The Verge)

Tim Berners-Lee, one of the inventors of the World Wide Web, says the internet is broken. Lee is advocating for a new “Contract for the Web” that would, among other things, give people more control over their data. Most of the big tech platforms have signed on as supporters. (Tim Berners-Lee / The New York Times)

Industry

⭐ A new investigation from The Verge shows freelancers working for the audio transcription platform Rev are often exposed to graphic content with no warning. The company has been in the news lately for slashing minimum pay for its transcribers — but many say that disturbing content is of equal or greater concern, Dani Deahl reports:

Nearly every Revver who spoke with The Verge said they were exposed to graphic or troubling material on multiple occasions with no warning. This includes recordings of physical and verbal abuse between intimate partners, graphic descriptions of sexual assault, amateur porn, violent footage from police body cameras, a transphobic rant, and, in one instance, “a breast augmentation filmed by a physician’s cell phone, being performed on a patient who was under sedation.”

It doesn’t bother everyone, but for some, it can be overwhelming. “I’ve finished more than one file in tears because listening to someone talk about being abused or assaulted is emotionally taxing, and frankly I have no training or expertise that really helps me cope with it,” one Revver tells The Verge.

Facebook once built a facial-recognition app that lets employees identify people by pointing a phone at them. The app was not released publicly — it was used on company employees and their friends who opted in to the facial-recognition system. I am (1) disturbed by the privacy implications and (2) always wishing that something like this existed when I walk into a room and see a familiar face that I can’t place. Which happens about twice a week. (Rob Price / Business Insider)

Facebook launched a new market research app called Viewpoints. The move comes just a few months after the company introduced a controversial Android data collection app called Study, designed to monitor how long users are accessing other software. (Jay Peters / The Verge)

Facebook is building its own version of Instagram’s Close Friends. The feature will let users designate friends as Favorites, and then instantly send them their Facebook Stories or photos from Messenger. More evidence that public sharing in the Big Blue app is on the decline. (Josh Constine / TechCrunch)

Doctors are turning to YouTube to learn how to do surgical procedures, but there are no quality controls to ensure that instructions are legitimate or safe. Some experts are calling for better vetting and curation. (Christina Farr / CNBC)

A marketing campaign promoting tourism to the fictional island of Eroda is running sponsored ads on Twitter. It’s managed by the same people who run Harry Styles’ official homepage, though no one is saying why they’re pushing people to visit a place that doesn’t exist. (Andy Baio / Waxy)

And finally…

Cameo is a platform that lets people pay celebrities to record short video messages — custom birthday greetings, anniversary wishes, and that sort of thing. Well anyway, someone hired Sugar Ray frontman Mark McGrath to break up with their partner, and while this is easily among the worst ways to break up with someone, it makes for undeniably incredible viewing.

Katie Notopoulos offers some good reasons why the video might not be 100 percent above board, but still — I expect we’ll be seeing more of these.

Talk to us

Send us tips, comments, questions, and your best Borat impressions: casey@theverge.com and zoe@theverge.com.

https://www.theverge.com/interface/2019/11/26/20982078/sacha-baron-cohen-adl-speech-facebook-section-230