Future AI-generated phishing emails are likely to be more effective and damaging than the email-based attacks we are seeing today.

Since the arrival of ChatGPT, the media and security pundits have warned that phishing is now on steroids: more compelling and a vastly increased tempo. IBM’s X-Force Red wanted an objective assessment on this subjective assumption.

The method chosen was to test an AI-generated phishing email and a human generated email against employees working for a healthcare firm. Sixteen hundred staff members were selected: 800 received the AI phish, while the other 800 received the human phish.

The outcome of the investigation is that AI can produce a phish considerably faster than humans (five minutes from five simple prompts compared to 16 hours for the IBM human social engineers); but that human social engineering is currently more effective than AI phishing.

Stephanie Carruthers, IBM’s Chief People Hacker at X-Force Red, puts human success down to three major factors: emotional intelligence, personalization, and a more succinct and effective headline. “Humans,” notes IBM’s report on the test, “understand emotions in ways that AI can only dream of. We can weave narratives that tug at the heartstrings and sound more realistic, making recipients more likely to click on a malicious link.”

In short, the current algorithmic recompilation of stored knowledge is not as compelling as an OSINT-driven human narrative.

But this is only half the story.

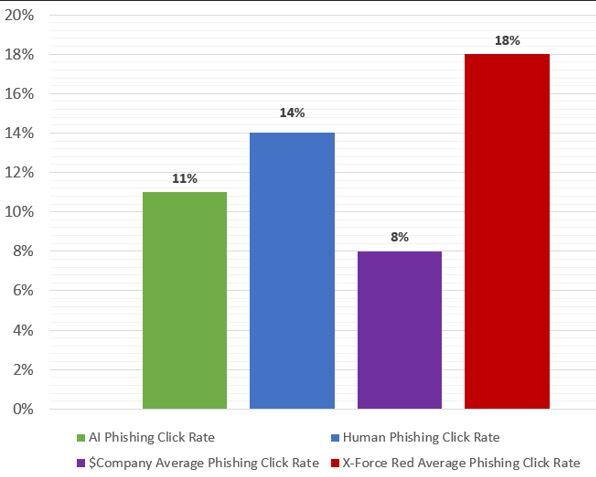

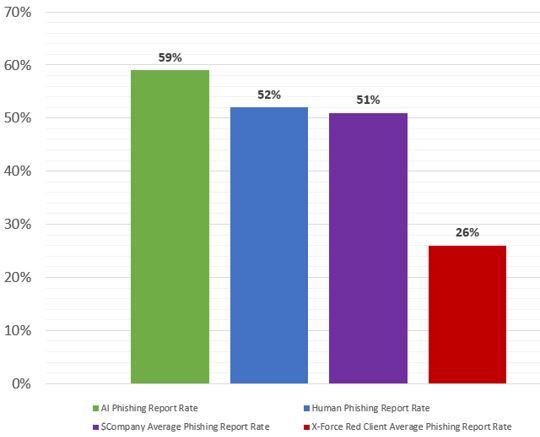

Firstly, the results were close. The human phish achieved a 14% click rate against 11% from the AI phish. Fifty-two percent of the human emails were reported as suspicious, against 59% of the AI emails.

Secondly, AI is in its infancy while human social engineering has been honed over decades of experience. Two questions: could the AI have been used more efficiently (for example with different prompts; that is, better prompt engineering), and how much will AI improve over the next few years?

Carruthers is aware of these issues. “I spent hours creating the prompt engineering and figuring out which ones worked – and I can tell you the first ones I produced were garbage. A lot with AI is garbage in garbage out,” she told SecurityWeek. She is confident that these were the best prompts that could be achieved today. “I think I have very solid principles and techniques with what I was asking it to do… I am very happy with the results.”

One example explains her efforts. ChatGPT can be prompted to answer in different styles. Given the apparent lack of ‘emotional intelligence’, could the AI be instructed to respond with greater emotion? “The first responses I got were good, but felt just a bit robotic, a bit cold,” said Carruthers. She tried to inject warmth. “But the more I played with it the more like it just started to break – it just doubled down on the coldness, or it got really wacky. It was hard to find that balance.”

The second question is the big unknown – how much will AI improve over the next few years? This itself has two parts: how much will publicly available AI improve, and how much will criminal AI improve?

Gen-AI obtains its information from what it ingests. Public gen-AI must be wary in this. It must avoid absorbing dangerous personal information that can then resurface in its responses. Criminal AI has no such concerns. So, while the primary source for public AI will be the surface web (with compliance guardrails), there are no such restrictions for criminal AI – which will most likely combine both the surface and the dark web as its data source, with no guardrails.

The potential for criminal AI to include and combine stolen personal data could lead to highly personalized spear-phishing. If this is combined with improved emotional intelligence, the result is likely to be very different to today’s IBM test.

This is subjective conjecture and is exactly what IBM was trying to avoid in its study. But given that ChatGPT can already achieve an 11% success rate in its phishing, it is not something we should completely ignore.

Carruthers own primary takeaway from her study admits such. “If you had asked me before I started who I think would win, I would say humans, hands down. But the more I started prompt engineering, I started getting a little nervous and… these emails are getting better and better,” she told SecurityWeek.

“So, I think my biggest takeaway is to question what the future is going to look like. If we continue to improve gen-AI and make it sound more human, these phishing emails are going to be possibly devastating.”

Related: Malicious Prompt Engineering With ChatGPT

Related: ‘Grim’ Criminal Abuse of ChatGPT is Coming, Europol Warns

Related: ChatGPT, the AI Revolution, and the Security, Privacy and Ethical Implications

Related: Don’t Expect Quick Fixes in ‘Red-Teaming’ of AI Models. Security Was an Afterthought

https://www.securityweek.com/the-64k-question-how-does-ai-phishing-stack-up-against-human-social-engineers/