The human brain has evolved to instantly recognize images.

Visual identification is a natural ability made possible through a wonder of nerves, neurons, and synapses. We can look at a picture, and in 13 milliseconds or less, know exactly what we’re seeing.

But creating technology that can understand images as quickly and effectively as the human mind is a huge undertaking.

Visual search therefore requires machine learning tools that can quickly process images, but these tools must also be able to identify specific objects within the image, then generate visually similar results.

Yet thanks to the vast resources at the disposal of companies like Google, visual search is finally becoming viable. How, then, will SEO evolve as visual search develops?

Here’s a more interesting question: how soon until SEO companies have to master visual search optimization?

Visual search isn’t likely to replace text-based search engines altogether. For now, visual search is most useful in the world of sales and retail. However, the future of visual search could still disrupt the SEO industry as we know it.

What is visual search?

If you have more than partial vision, you’re able to look across a room and identify objects as you see them. For instance, at your desk you can identify your monitor, your keyboard, your pens, and the sandwich you forgot to put in the fridge.

Your mind is able to identify these objects based on visual cues alone. Visual search does the same thing, but with a given image on a computer. However, it’s important to note that visual search is not the same as image search.

Image search is when a user inputs a word into a search engine and the search engine spits out related images. Even then, the search engine isn’t recognizing images, just the structured data associated with the image files.

Visual search uses an image as a query instead of text (reverse image search is a form of visual search). It identifies objects within the image and then searches for images related to those objects. For instance, based on an image of a desk, you’d be able to use visual search to shop for a desk identical or similar to the one in the image.

While this sounds incredible, the technology surrounding visual search is still limited at best. This is because machine learning must recreate the mind’s image processing before it can effectively produce a viable visual search application. It isn’t enough for the machine to identify an image. It must also be able to recognize a variety of colors, shapes, sizes, and patterns the way the human mind does.

The technology surrounding visual search is still limited at best

However, it’s difficult to recreate image processing in a machine when we barely understand our own image processing system. It’s for this reason that visual search programming is progressing so slowly.

Visual search as it stands: Where we are

Today’s engineers have been using machine learning technology to jumpstart the neural networks of visual search engines for improved image processing. One of the most recent examples of these developments is Google Lens.

Google Lens is an app that allows your smartphone to work as a visual search engine. Announced at Google’s 2017 I/O conference, the app works by analyzing the pictures that you take and giving you information about that image.

For instance, by taking a photo of an Abbey Road album your phone can tell you more about the Beatles and when the album came out. By taking a photo of an ice cream shop your phone can tell you its name, deliver reviews, and tell you if your friends have been there.

All of this information stems from Google’s vast stores of data, algorithms, and knowledge graphs, which are then incorporated into the the neural networks of the Lens product. However, the complexity of visual search involves more than just an understanding of the neural networks.

The mind’s image processing touches on more than just identification. It also draws conclusions that are incredibly complex. And it’s this complexity, known as the “black box problem”, that engineers struggle to recreate in visual search engines.

Rather than waiting explicitly on scientists to understand the human mind, DeepMind — a Google-owned company — has been taking steps toward programming the visual search engine based on cognitive psychology rather than relying solely on neural networks.

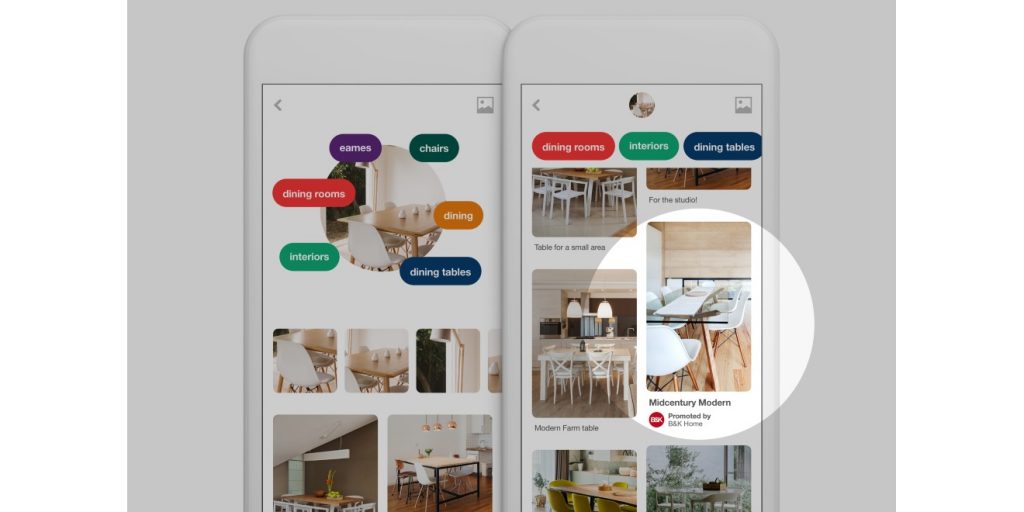

However, Google isn’t the only company with developing visual search technology. Pinterest launched its own Lens product in March 2017 to provide features such as Shop the Look and Pincodes. Those using Pinterest can take a photo of a person or place through the app and then have the photo analyzed for clothing or homeware options for shopping.

What makes Pinterest Lens and Google Lens different is that Pinterest offers more versatile options for users. Google is a search engine for users to gather information. Pinterest is a website and app for shopping, recipes, design ideas, and recreational searching.

Unlike Google, which has to operate on multiple fronts, Pinterest is able to focus solely on the development of its visual search engine. As a result, Pinterest could very well become the leading contender in visual search technology.

Nevertheless, other retailers are beginning to catch on and pick up the pace with their own technology. The fashion retailer ASOS also released a visual search tool on its website in August 2017.

The use of visual search in retail helps reduce what’s been called the Discovery Problem. The Discovery Problem is when shoppers have so many options to choose from on a retailer’s website that they simply stop shopping. Visual search reduces the number of choices and helps shoppers find what they want more effectively.

The future of visual search: Where we’ll go from here

It’s safe to assume that the future of visual search engines will be retail-dominated. For now, it’s easier to search for information with words.

Users don’t need to take a photo of an Abbey Road album to learn more about the Beatles when they can use just as many keystrokes to type ‘Abbey Road’ into a search engine. However, users do need to take a photo of a specific pair of sneakers to convey to a search engine exactly what they’re looking to buy.

Searching for a pair of red shoes using Pinterest Lens

As a result, visual search engines are convenient, but they’re not ultimately necessary for every industry to succeed. Services, for instance, may be more likely to rely on textual search engines, whereas sales may be more likely to rely on visual search engines.

That being said, with 69% of young consumers showing an interest in making purchases based on visual-oriented searches alone, the future of visual search engines is most likely to be a shopper’s paradise in the right retailer’s hands.

What visual search means for SEO

Search engines are already capable of indexing images and videos and ranking them accordingly. Video SEO and image SEO have been around for years, ever since video and image content became popular with websites like YouTube and Facebook.

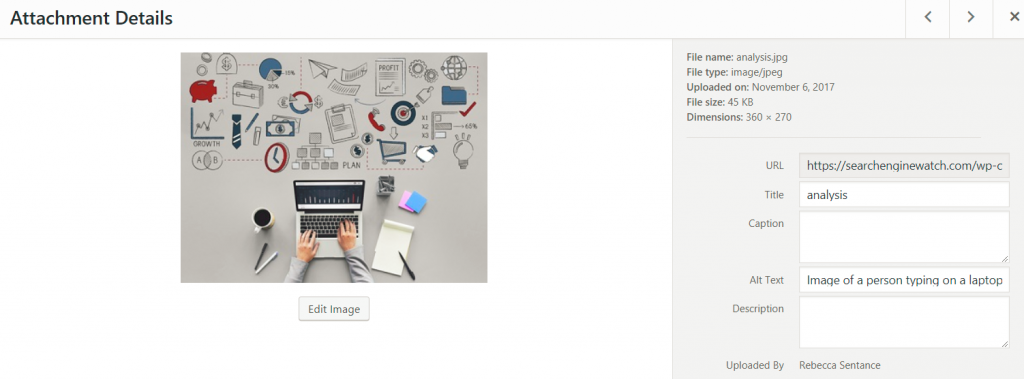

Yet despite this surge in video and image content, SEO still meets the needs of those looking to rank higher on search engines. Factors such as creating SEO-friendly alt text, image sitemaps, SEO-friendly image titles, and original image content can put your website’s images a step above the competition.

However, the see-snap-buy behavior of visual search can make image SEO more of a challenge. This is because the user no longer has to type, but can instead take a photo of a product and then search for the product on a retailer’s website.

Currently, SEO has been functioning alongside visual search via alt-tagging, image optimization, schema markup, and metadata. Schema markup and metadata are especially important for SEO in visual search. This is because, with such minimal text used in the future of visual search, this data may be one of the only sources of textual information for search engines to crawl.

Meticulously cataloging images with microdata may be tedious, but the enhanced description that microdata provides when paired with an optimized image should help that image rank higher in visual search.

Metadata is just as important. In both text-based searches and visual-based searches, metadata strengthens the marketer’s ability to drive online traffic to their website and products. Metadata hides in the HTML of both web pages and images, but it’s what search engines use to find relevant information.

Marking up your images with relevant metadata is essential for image SEO

For this reason, to optimize for image search, it’s essential to use metadata for your website’s images and not just the website itself.

Both microdata and metadata will continue to play an important role in the SEO industry even as visual search engines develop and revolutionize the online experience. However, additional existing SEO techniques will need to advance and improve to adapt to the future of visual search.

The future of SEO and visual search

To assume visual search engines are unlikely to change the future of the SEO industry is to be short-sighted. Yet it’s just as unlikely that text-based search will be made obsolete and replaced by a world of visual-based technology.

However, just because text-based search engines won’t be going anywhere doesn’t mean they won’t be made to share the spotlight. As visual search engines develop and improve, they’ll likely become just as popular and used as text-based engines. It’s for this reason that existing SEO techniques will need to be fine-tuned for the industry to remain up-to-date and relevant.

But how can SEO stay relevant as see-snap-buy behavior becomes not just something used on retail websites, but in most places online? As mentioned before, SEO companies can still utilize image-based SEO techniques to keep up with visual search engines.

Like text-based search engines, visual search relies on algorithms to match content for online users. The SEO industry can use this to its advantage and focus on structured data and optimization to make images easier to process for visual applications.

Additional techniques can help impove image indexing by visual search engines. Some of these techniques include:

- Setting up image badges to run through structured data tests

- Creating alternative attributes for images with target keywords

- Submitting images to image sitemaps

- Optimizing images for mobile use

Visual search engines are bound to revolutionize the retail industry and the way we use technology. However, text-based search engines will continue to have an established place in industries that are better suited to them.

The future of SEO is undoubtedly set for rapid change. The only question is which existing strategies will be reinforced in the visual search revolution and which will be outdated.

Related reading

Reaching new customers has become significantly more complex. With new platforms and channels still emerging, consumers have more and more choices and are demanding greater attention from brands trying to reach them. Here’s how you can ramp up your multi-channel strategy in 2018 to meet that demand.

Google rarely stands still. In fact, the search giant claims to tweak its search algorithms at least 3 times per day. Some of these updates are bigger than others, and the past month has brought an unprecedented wave of newsworthy enhancements.

On Thursday 11th January, Facebook announced a major change to News Feed that would severely impact the reach of publishers’ posts. Deprived of referral traffic from Facebook, will publishers be turning en masse back to SEO to restore their fortunes?

https://searchenginewatch.com/2018/01/31/the-future-of-visual-search-and-what-it-means-for-seo-companies/