Machine vision has been one of the biggest success stories of the AI boom, enabling everything from automated medical scans to self-driving cars. But while the accuracy of all-seeing algorithms has improved massively, these systems can still be confused by images that humans have no problem deciphering.

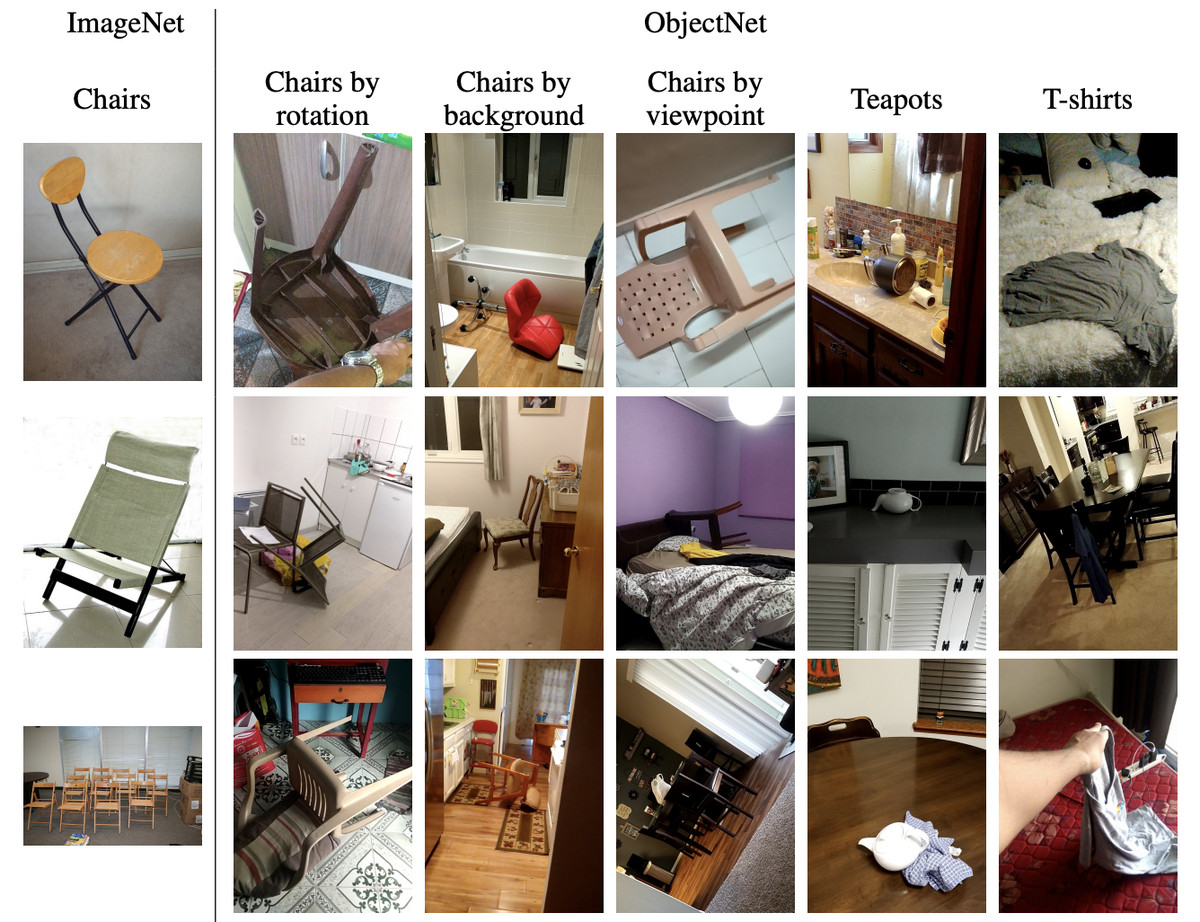

Just look at the collage at the top of this story. None of these pictures are particularly confusing, right? You can see hammers, oven gloves, and although the top middle image is a bit tricky to decipher, it doesn’t take long to work out it’s a chair viewed from above. A cutting-edge machine vision algorithm, meanwhile, would probably only identify one or two of these objects. That’s a huge downgrade for systems that are supposed to drive our cars.

But that’s exactly why these images were made. They’re part of a dataset called ObjectNet compiled MIT scientists from MIT to test the limits of AI vision. “We created this dataset to tell people the object-recognition problem continues to be a hard problem,” research scientist Boris Katz told MIT News. “We need better, smarter algorithms.”

Better data builds better algorithms, and ObjectNet will help here. It consists of 50,000 images of objects viewed from weird angles or in surprising contexts (things like a teapot upside-down on a sofa or a dining chair on its back in a bathroom). The idea is that ObjectNet can be used to test and assess the capabilities of different algorithms.

These sorts of images are hard for computers to process because they don’t appear in training data and because these systems have a limited understanding of how objects in the real world work. AI systems can’t easily extrapolate from items they’ve seen before to imagine how they might appear from different angles, in different lighting, and so on.

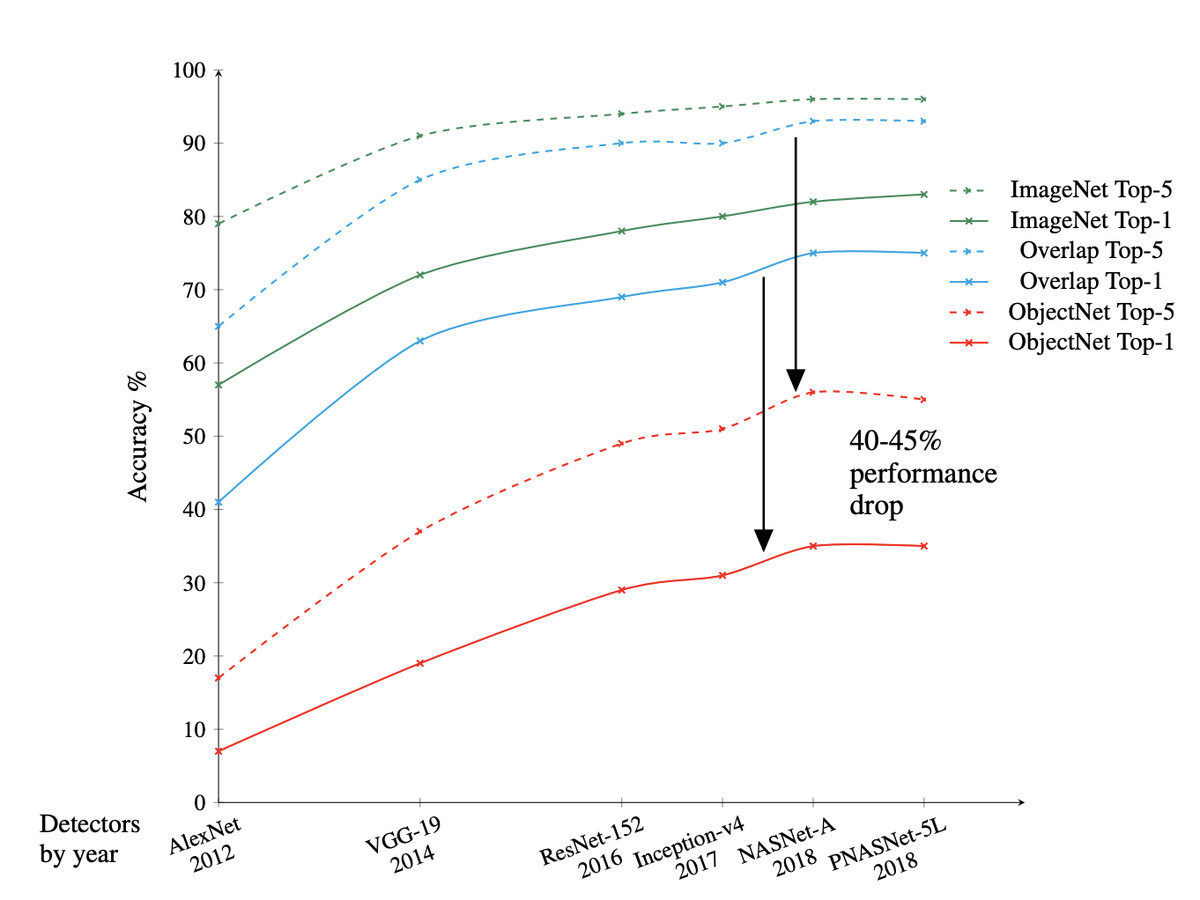

Indeed, when the creators of ObjectNet tested a number of popular machine vision systems on their pictures, they found their accuracy fell between 40 to 45 percent.

You could argue that because these pictures are designed to fool AI, it’s not exactly a fair fight. But it’s surely better to understand the weaknesses of these systems before we put our trust in them. “If we want to know how well algorithms will perform in the real world, we should test them on images that are unbiased and that they’ve never seen before,” one of the dataset’s co-creators, research scientist Andrei Barbu, told MIT News.

This fragility in computer vision systems is well-known, though, and AI engineers have been working on this problem for years. They’ve even released similar datasets before, like this one compiled of naturally occurring confusing images (rather than the pictures of ObjectNet, which were deliberately posed). Improving the performance of AI vision systems is not impossible either; it just takes time, effort, and training. Just as with humans, sucking at something is the first step toward becoming sort of good at it.

https://www.theverge.com/2019/12/12/21012410/machine-vision-ai-adversarial-images-dataset-objectnet-mit-algorithms