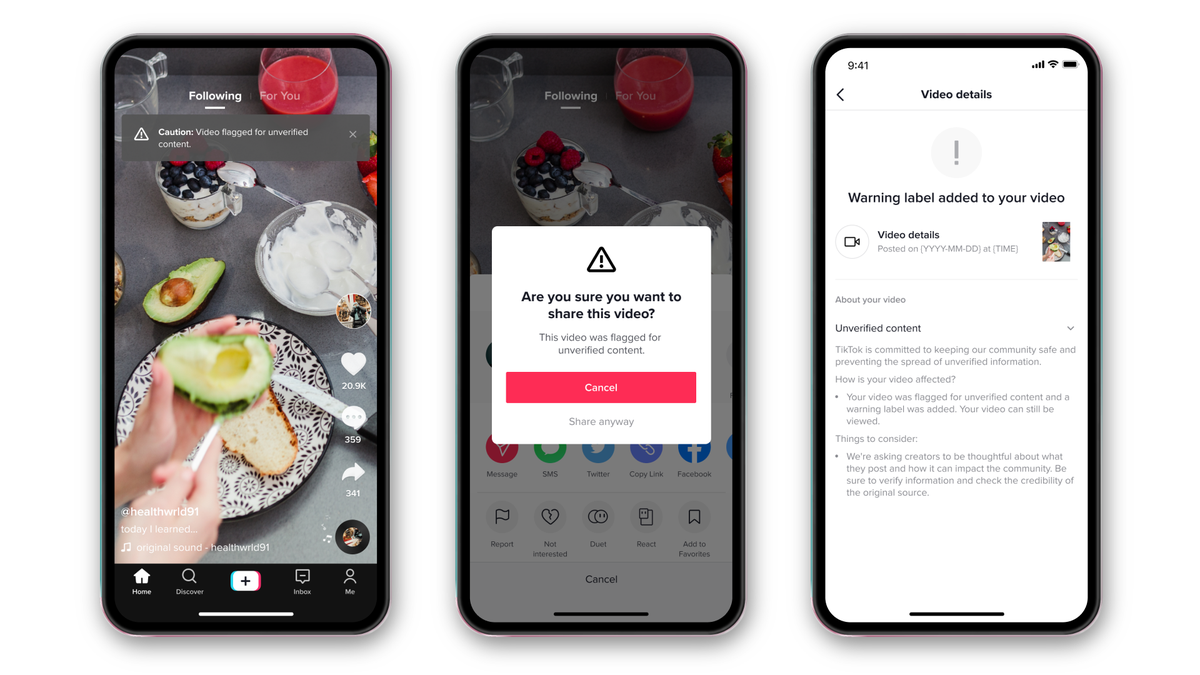

TikTok will start displaying warnings on videos that contain questionable information that couldn’t be verified by fact-checkers, and it’ll begin warning users when they go to re-share those videos that the information hasn’t been confirmed.

The app will now display a warning label on these videos that reads, “Caution: Video flagged for unverified content.” This means a fact-checker looked at the content but wasn’t able to certify that it was right or wrong. TikTok has already been reducing the spread of some unverified videos, but they weren’t publicly flagged before today. Creators will now get a message when a warning label is added to their video, and those videos will all have their distribution reduced.

Viewers will also be warned when they go to re-share a video that has a warning label on it. After clicking the share button, which lets you post a video outside the app, a pop-up will ask if you’re “sure you want to share this video?” You can still share the video if you want to, but the prompt seems to be designed to dissuade some people from following through.

TikTok didn’t say how many videos it looks at each day for fact-checking or how it chooses which videos to review. A spokesperson said that fact-checking is often focused on topics like elections, vaccines, and climate change and that a video doesn’t have to reach a certain popularity to qualify for review. Videos that violate TikTok’s misinformation policy will be removed outright.

Other social apps have tried similar warnings over the past year to limit the spread of misinformation. Facebook warns you before you share stories about COVID-19 or articles that may be out of date. Twitter will warn you before retweeting potential misinformation, and on a much funnier note, will also suggest that you open and read through a news story before you repost it.

https://www.theverge.com/2021/2/3/22263100/tiktok-fact-check-warning-labels-unverified-content