ADVERTISEMENT

A meme recently made the rounds. You might have heard about it. The “Ten Year Challenge.”

This challenge showed up on Facebook, Twitter, Instagram along with a variety of hashtags such as the #10YearChallenge or the #TenYearChallenge or even the #HowHardDidAgingHitYou along with a dozen or so more lesser used identifiers.

You might have even posted photos yourself. It was fun. You liked seeing your friends’ photos. “Wow! You haven’t aged a bit!” is a nice thing to hear any day.

That was until someone told you they read an article that this meme was potentially a nefarious attempt by Facebook to collect your photos to help train their facial recognition software and you felt duped!

But was it? No. Were you? Probably not.

Meme Training?

The implication that this meme might be more than some innocent social media fun originated from an article in Wired by Kate O’Neil.

To be clear, the article does not say the meme is deceptive, but it does imply it is a possibility that it is being used to train Facebook’s facial recognition software.

From Wired.

“Imagine that you wanted to train a facial recognition algorithm on age-related characteristics and, more specifically, on age progression (e.g., how people are likely to look as they get older). Ideally, you’d want a broad and rigorous dataset with lots of people’s pictures. It would help if you knew they were taken a fixed number of years apart—say, 10 years.”

O’Neil was not saying it was, but she also wasn’t saying it wasn’t. That was enough to spawn dozens of articles and thousands of shares that warned users they were being duped.

But were they?

The Meme

While we can never be 100 percent sure unless we work at Facebook, I would lay good Vegas odds that this meme was nothing more than what it appeared to be – harmless fun.

O’Neil stated that the purpose of her article was more about creating a dialogue around privacy, which I agree is a good thing.

“The broader message, removed from the specifics of any one meme or even any one social platform, is that humans are the richest data sources for most of the technology emerging in the world. We should know this and proceed with due diligence and sophistication.”

We do need to be more aware and more conversant in the nature of digital privacy and our protections. However, is sparking a conversation about a meme that is almost surely harmless sparking the right conversation?

Is causing users to fear what they shouldn’t while not informing them of how they are, right now, contributing the system they were being warned about the best conversation to have around this issue?

Maybe, but maybe not.

Chasing Ghosts

I believe the only way we become better online netizens is by knowing what is truly threatening our privacy and knowing what is not.

So, in the spirit of better understanding let’s break this “nefarious” meme down and get a better understanding of what processes are actually at work and why this meme – or any meme – would not likely be used to create a training set for Facebook’s (or any other) facial recognition system.

Facebook Denies Involvement

Before we take the deep dive into Facebook’s facial recognition capabilities, it is important to mention that Facebook denies any involvement in the meme’s creation.

The 10 year challenge is a user-generated meme that started on its own, without our involvement. It’s evidence of the fun people have on Facebook, and that’s it.

— Facebook (@facebook) January 16, 2019

But can we trust Facebook?

Maybe they are doing something without our knowledge. After all, it would not be the first time, right?

Remember how we just found out they downloaded an app on people’s phone to spy on them?

So how do we know that Facebook is not using this meme to better their software?

Well, maybe we need to start with a better understanding of how powerful their facial recognition software is and the basics of how it and the Artificial Intelligence behind it works.

Facebook & Facial Recognition

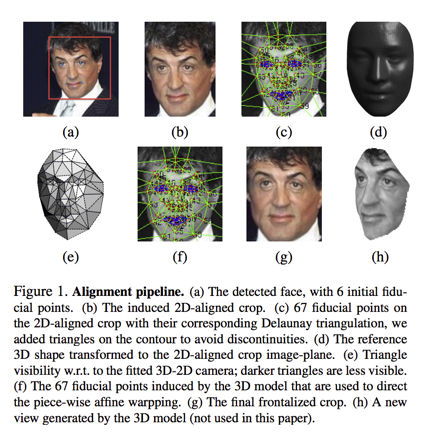

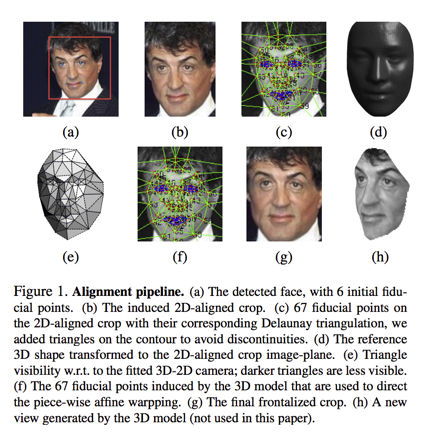

Back in 2014 Facebook presented a paper at the IEEE conference called “DeepFace: Closing the Gap to Human-Level Performance in Face Verification”.

*Note the PDF was published in 2016, but the paper was presented in 2014.

This paper outlined a breakthrough in facial recognition technology called “DeepFace.”

What Is DeepFace?

DeepFace was developed by Facebook’s internal research group and in 2014, it was almost as good as a human in recognizing the image of another human.

Well, almost.

DeepFace “only” had a “97.25 percent accuracy” which was “.28 percent less than a human being”. So while not 100 percent the same as a human, it was nearly equivalent – or let’s just say it was good enough for government work.

Noting for comparison the FBI facial recognition system being developed at the same time was only 85 percent accurate. A far cry from Facebook’s new technology.

Why was Facebook so much better at this? What made the difference?

Facebook, DeepFace & AI

In the past, computers were just not powerful enough to process facial recognition at scale with great accuracy no matter how well written the software behind it.

However, in the past 5 to 10 years, computer systems have become much more capable and are outfitted with the processing power necessary to resolve the number of calculations that would be used in a 97.25 percent accurate facial recognition system.

Processing Power = Game Changer!

Why? Because these newer systems increased computing capacity allowed researchers to apply artificial intelligence (or AI) and machine learning to the problem of identifying people.

So why was the FBI so much less accurate than Facebook, after all they had access to the same computer processing power.

Simply put in laymen’s terms: Facebook had data.

Not just any data, but good data and lots of it. Good data with which to train its AI system to identify users. The FBI did not. They had far fewer data and their data was much less capable of training the AI because it was not “labeled.”

Labeled meaning they have a data set where the people in it are known to from which to give the AI to learn.

But why?

DeepFace

Before we explore why Facebook was so much better at identifying users than the FBI was at identifying criminals, let’s take a look at how DeepFace solved issues of facial recognition.

From the paper presented at IEEE.

Facebook was using a neural network and deep learning to better identify users when the user was not labeled (i.e., unknown).

A neural network is a computer “brain,” so to speak.

Neural Networks.

To put it simply, neural networks are meant to simulate how our minds work.

While computers do not have the processing power of the human mind (yet), neural networks allow the computer to better “think” rather than just process. There is a “fuzziness” to how It analyzes data.

Thinking Computers?

OK, computers do not really think, but they can process data input much faster than we can and these networks allow them to deeply analyze patterns quickly, and assign data to vectors with numeric equivalents. This is a form of categorization.

From these vectors, analyses can be made and the software can make determinations or “conclusions” from the data. The computer can then “act” on these determinations without human intervention. This is the computer version of “thinking.”

Note: when the word act is used it does not mean the computer is capable of independent thought, it is just responding to the algorithms with which it is programmed.

This is an oversimplified explanation, but this is the basis of the system Facebook created.

Here’s Skymind’s definition of neural network:

But how did Facebook become so good at labeling people if it did not know who they were?

Like anything humans do, with practice and training.

Tag Suggestions

In 2010, Facebook rolled out a default user tagging system called “Tag Suggestions”. They did not inform users of the purpose behind it, they just made tagging those photos or your friends and family seem like something fun to do.

This tagging allowed Facebook to create a “template” of your face to be used as a control when trying to identify you.

How Did They Get Your Permission?

As often happens, Facebook used the acceptance of their Terms of Service as a blanket opt-in for everyone in Facebook except where the laws of a country forbade it. As The Daily Beast reported:

“First introduced in 2010, Tag Suggestions allows Facebook users to label friends and family members in photos with their name using facial recognition. When a user tags a friend in a photo or selects a profile picture, Tag Suggestions creates a personal data profile that it uses to identify that person in other photos on Facebook or in newly uploaded images.

Facebook started quietly enrolling users in Tag Suggestions in 2010 without informing them or obtaining users’ permission. By June 2011, Facebook announced it had enrolled all users, except for a few countries.”

Labeled Data

AI training sets require a known set of labeled variables. The machine cannot learn in the same way we as humans do – by inferring relationships between unknown variables without reference points, so it needs a known labeled set of people from which to start.

This is where tag suggestions came in.

We can see in the paper they presented, that to accomplish this they used 4.4 million faces from 4,030 people from Facebook. People that were labeled or what we call today – “tagged”.

Note: We can also see here that in the original research they also accounted for age when they timestamped their original training data

So it begs the question, why would they need a meme now?

The answer is because they wouldn’t.

Why could the FBI only analyze people correctly 85 percent of the time? Because they lacked data. Facebook didn’t.

Labels

To be clear, facial recognition software like DeepFace does not “recognize you” the way a human would. It can only decide if photos similar enough to be from the same source.

It only knows Image A and Image B are X% likely to be the same as the template image. The software requires labels to train it in how to tell you are you.

What Facebook and all facial recognition software was missing were those labels to tie users to those photos.

However, Facebook did not need to guess who a user was, it had tags to tell them. As we can see in the paper a portion of these known users were then utilized as a training set for the AI and then that was expanded across the platform.

As mentioned, this was done without the users’ knowledge because well this was thought to be kind of “creepy”.

Billions of Images All Tagged by You

So, it is not just because they accounted for aging in their original data set or that they used deep learning and neural networks to recognize over 120 million parameters on the faces they analyzed, but also because their training data was tagged by you.

As we now know facial recognition cannot identify an image as JOHN SMITH, it can just tell if a set of images are likely the same as the template image. However, with Facebook users tagging billions of images over and over again, Facebook could say these two images = this person, with a level of accuracy that was unparalleled.

That tagging enables the software to say that not only are these two images alike, but they are most likely JOHN SMITH.

You trained the AI with your tagging, but what does that mean?

AI Training & ‘The Ten Year Challenge’

So, we now know the way AI is trained is by using good data sets of known labeled variables, in this case, faces tied to users, so that it understands why a piece of data fits the algorithmic models and why it doesn’t.

Now, this is a broad simplification that I am sure AI experts would have right to take exception with, but this works as a general definition for simplicity’s sake.

So, O’Neil’s Wired article speculated that the meme could be training the AI, so let’s look at why this wouldn’t be a good idea from a scientific perspective.

“Imagine that you wanted to train a facial recognition algorithm on age-related characteristics and, more specifically, on age progression (e.g., how people are likely to look as they get older). Ideally, you’d want a broad and rigorous dataset with lots of people’s pictures. It would help if you knew they were taken a fixed number of years apart—say, 10 years.”

O’Neil states here the most important factor for an AI training set:

“…you’d want a broad and rigorous data-set with lots of people’s pictures”.

The meme data is definitely broad, but is it rigorous?

Flawed Data

While the meme’s virality might mean the data is broad, it isn’t rigorous.

Here is a sample of postings from the top 100 photos found in one of the hashtags on Facebook.

While there were some people that posted their photos (They were not included for privacy reasons) over approximately 70 percent of the images were not of people, but everything from drawings to photos of inanimate objects/animals even logos as we see presented here.

Now this is not a scientific test, I just grabbed the screenshots from the top 100 photos showing in the hashtags. That being said it is fairly easy to see that the “data set” would be so rife with unlabeled noise, it would be virtually impossible to use it to train anything, especially not one of the most sophisticated facial recognition software systems in the world.

This is why a social media meme would not be used to train the AI. It is inherently flawed data.

So now that we know how the software associates like images with you, how does the AI specifically decide what images are similar in the first place?

Facial Recognition at Work

Remember all that tagging Facebook had users do without telling them what it was doing and that template it created of you and everyone in Facebook?

The template is used as a control to identify new images either as likely you or likely not you, whether or not you tag them.

This is how Facebook’s DeepFace sees you. Outlined is the linear process in which it normalizes the data it finds in your image.

To the AI you are not a face, you are just a series of pixels in varying shades that it uses to determine where common reference points lie and it uses those points of reference to determine if this face is a match to your initial template. The one that was created when Facebook rolled out the tag suggestions feature.

For instance, the nose always throws a shadow in a certain way, so the AI can determine a nose even when the shadow is in different places.

And so on and so on.

Pipeline Process

The site Techechelons offers an excellent summary of how the complex process of DeepFace’s facial recognition system was developed and how it works

The Input

Researchers scanned a wild form (low picture quality photos without any editing) of photos with large complex data like images of body parts, clothes, hairstyles etc. daily. It helped this intelligent tool obtain a higher degree of accuracy. The tool enables the facial detection on the basis of human facial features (eyebrows, nose, lips etc.).

The Process

In modern face recognition, the process completes in four raw steps:

- Detect

- Align

- Represent

- Classify

As Facebook utilizes an advanced version of this approach, the steps are a bit more matured and elaborated than these. Adding the 3D transformation and piece-wise affine transformation in the procedure, the algorithm is empowered for delivering more accurate results.

The Output

The final result is a face representation, which is derived from a 9-layer deep neural net. This neural net has more than 120 million variables, which are mapped to different locally-connected layers. On contrary to the standard convolution layers, these layers do not have weight sharing deployed.

Training Data

Any AI or deep learning system needs enough training data so that it can ‘learn’. With a vast user base, Facebook has enough images to experiment. The team used more than 4 million facial images of more than 4000 people for this purpose. This algorithm performs a lot of operations for recognizing faces with human accuracy level.

The Result

Facebook can detect whether the two images represent the same person or not. The website can do it, irrespective of surroundings light, camera angle, and colors wearing on face i.e. facial make-up. To your surprise, this algorithm works with 97.47 percent accuracy, which is almost equal to human eyes accuracy 97.65 percent.

I know that for some this might all seem above their pay grade, but the question is really quite simple.

Since Facebook was as accurate as a human five years ago, it begs the question, why would they need a meme now? Again, they wouldn’t.

It is the same reason they could the FBI only analyze people correctly 85 percent of the time? Because they lacked data. Facebook didn’t.

Who gave Facebook that data? You did. When you tagged people.

Don’t be too hard on yourself though, as you now know when Facebook rolled out the initial labeling system, they didn’t tell you why. By the time you might have known, the system was already set.

Now what about the claim is that the meme is needed to help the AI better identify aging?

Facial Recognition & Aging

Although we as humans might take a breath if we had to identify someone 30 or 40 years older than the last time we saw them, not so much at 10 years.

When you looked at all your friends’ posts did you have trouble recognizing most or any of them? I know I didn’t and DeepFace doesn’t either.

All those billions of photos with all those billions of tags has made Facebook’s facial recognition system incredibly accurate and “knowledgeable.” It would not be thrown off by the lines of an aging face because remember it is not looking at your face the way a human does. It is looking at data points and those data points are not thrown by a few wrinkles.

Even the parts of the face that change with age can be calculated relatively easily since the AI was trained to recognize aging in the original data sets.

There will always be some outliers, but age progression, while difficult for less sophisticated software was programmed into Facebook’s algorithms over five years ago in the original training data.

Now think of how many photos have been uploaded and tagged since then? Every tag is a training the AI to be more accurate. Every person has a template starting point that is their control. Matching your face now to that template is not difficult.

How Powerful is Facebook’s Recognition AI Today?

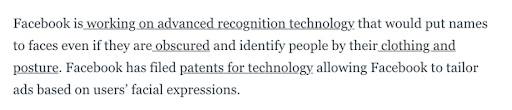

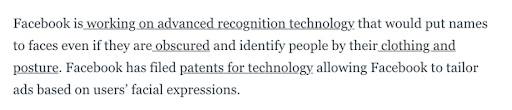

Facebook’s recognition AI is so powerful they do not even need your face to recognize you anymore. The advanced version of DeepFace can use the way your clothing lays, posture, and gait to determine who you are with relatively high degrees of accuracy – even if they never “see” your face.

Need more proof?

This is Facebook’s notification about their facial recognition technology.

Notice it can find you when you are not tagged. That means it has to determine who you are without a current label, but never fear – all those labels you used before created a template.

And that template can be used to transform almost any image of you into a standardized front facing scannable piece of data that can be tied to you because your template was created from the tag suggestions and subsequent general tagging of photos all of these years.

How Can You Tell If They Can Identify You?

Upload a photo. Did it suggest your name? Did it tag you at an event with someone even though your photo is not tagged itself?

This is because it can determine who are without human intervention. The neural network does not need you to tell it who you are anymore – it knows.

In fact, this AI is so powerful that I was able to upload an image of my cat and tag it with an existing facial tag for another animal.

I tagged it 4x, went away for a few days and came back. I uploaded a new picture of my cat and lo and behold – Facebook tagged it without my action.

It tagged it with the tag of the friend’s pet.

This also shows you how easy it would be to retrain the algo to recognize something else or someone else other than you for your name, should you ever want to change your template.

The Good News!

You can turn off this feature.

When you turn it off the template that the AI uses to match new unknown images to you is turned off. Without that template, the AI cannot recognize you. Remember the template is the control that it needs to know if the new image it “sees” is your or someone else.

So now that we know that what trains the AI is not a random meme of variable data, we can come back to the discussion around privacy.

Facial Recognition Is Everywhere

Before everyone deletes their Facebook accounts it is important for users to realize that there are facial recognition systems of varying level of accuracy everywhere in our daily lives.

For example:

- Amazon has been taken to court by the ACLU for its “Rekognition” facial recognition system after it falsely identified 28 members of Congress as known noting it was inherently biased against people with darker skin tones. Amazon has two pilot programs with police departments in the U.S. One in Orlando has dropped the technology, but the one in Washington is still likely in use though they have stated they would not use it for mass surveillance as it is against state law.

- The Daily Beast reports that the Trump administration staffed the DHS with four executives tied to these systems.“Government is relying on it as well. President Donald Trump staffed the U.S. Homeland Security Department transition team with at least four executives tied to facial recognition firms. Law enforcement agencies run facial recognition programs using mug shots and driver’s license photos to identify suspects. About half of adult Americans are included in a facial recognition database maintained by law enforcement, estimates the Center on Privacy & Technology at Georgetown University Law School.”

These are just a couple of examples. MIT reported that:

“…the toothpaste is already out of the tube. Facial recognition is being adopted and deployed incredibly quickly. It’s used to unlock Apple’s latest iPhones and enable payments, while Facebook scans millions of photos every day to identify specific users. And just this week, Delta Airlines announced a new face-scanning check-in system at Atlanta’s airport. The US Secret Service is also developing a facial-recognition security system for the White House, according to a document highlighted by UCLA. “The role of AI in widespread surveillance has expanded immensely in the U.S., China, and many other countries worldwide,” the report says.

In fact, the technology has been adopted on an even grander scale in China. This often involves collaborations between private AI companies and government agencies. Police forces have used AI to identify criminals, and numerous reports suggest it is being used to track dissidents.

Even if it is not being used in ethically dubious ways, the technology also comes with some in-built issues. For example, some facial-recognition systems have been shown to encode bias. The ACLU researchers demonstrated that a tool offered through Amazon’s cloud program is more likely to misidentify minorities as criminals.”

Privacy Is a Dwindling Commodity

There is a need for humans to be able to live a life untracked by technology. We need spaces to be ourselves without the thought of being monitored and to make mistakes without fear of repercussions, but with technology, those spaces are getting smaller and smaller.

So, while O’Neil’s Wired article was incorrect about the likelihood of the meme to be used to train the AI, she was not wrong that we all need to be more aware of how much of our privacy we are giving up for the sake of getting a $5 off coupon to Sizzler.

What we need are citizens who are more informed about how technology works and how that technology is encroaching little by little into our private lives.

Then we need those citizens to demand better laws to protect them from companies that would create the largest and most powerful facial recognition system in the world by simply convincing them that tagging photos would be fun.

There are places like this. The European Union (EU) has some of the strictest privacy laws and doesn’t allow Facebook’s facial recognition feature. The U.S. needs people to demand better data protections as we know just where this type of system can go if left to its own devices.

If you’re unsure, just look to China. It has developed a social rating system for its people that affects everything from whether they can get a house or go to college or work at all.

This is an extreme example. But remember the words of one of the originators of facial recognition technology.

“When we invented face recognition, there was no database,” Atick said. Facebook has “a system that could recognize the entire population of the Earth.”

Memes are the last thing we need to worry about. Enjoy them!

There are far bigger issues to contemplate.

Oh, but O’Neil’s right that you should stay away from those quizzes where you use your Facebook login to find out what “Game of Thrones” character you are. They are stealing your data, too.

More Resources:

Image Credits

All screenshots taken by author, January 2019

Subscribe to SEJ

Get our daily newsletter from SEJ’s Founder Loren Baker about the latest news in the industry!

http://tracking.feedpress.it/link/13962/11057565