A long time in the making

Some of the models that constitute Project Firefly have been in the works for up to three years, according to Costin, a period of time in which generative AI has undergone a transformation from garbled abstractions and meandering strings of text to passably realistic images and copy. But investment in the project picked up around a year ago when the release of research group OpenAI’s Dall-E 2 supercharged the field of AI image generation.

In an effort to avoid lawsuits like the one Getty Images filed against image generation startup Stability AI last month, Adobe has trained its image model only on Adobe stock images and media in the public domain. The company also gives creators the option to exclude their work from training databases and plans to eventually draw up a compensation model for artists whose work is used.

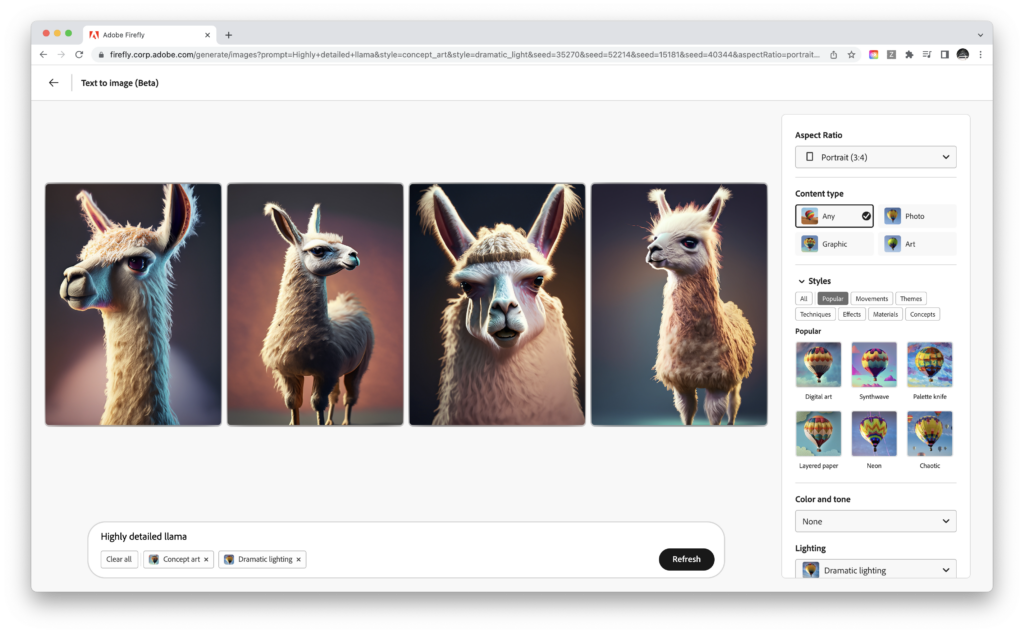

Whether or not that training set of around 330 million images would be enough to produce a tool on par with models trained on billions of assets from across the web remained to be seen when research began, Costin said.

“This is a question that we had to prove by doing, and our research organization took this challenge to heart,” Costin said. “We actually feel that we have achieved pretty good output quality, even if it’s not the whole internet.”

While the model may be smaller, it could quell the concerns of some brands and agencies who have been hesitant to use image generation in anything beyond internal ideation communication for legal issues.

The release comes as other big tech companies are scrambling to integrate the latest advances in AI into their products. Last week, Microsoft announced an initiative called Copilot that will embed AI into its enterprise tools like Word, PowerPoint, Outlook and Teams. Google is similarly bringing its own competing AI tools to Gmail, Docs and Slides.